...making Linux just a little more fun!

April 2011 (#185):

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Linux based SMB NAS System Performance Optimization, by Sagar Borikar and Rajesh Palani

- Activae: towards a free and community-based platform for digital asset management, by Manuel Domínguez-Dorado

- Away Mission for April 2011, by Howard Dyckoff

- HAL: Part 3 Functions, by Henry Grebler

- synCRONicity: A riff on perceived limitations of cron, by Henry Grebler

- Applying Yum Package Updates to Multiple Servers Using Fabric, by Ikuya Yamada and Yoshiyasu Takefuji

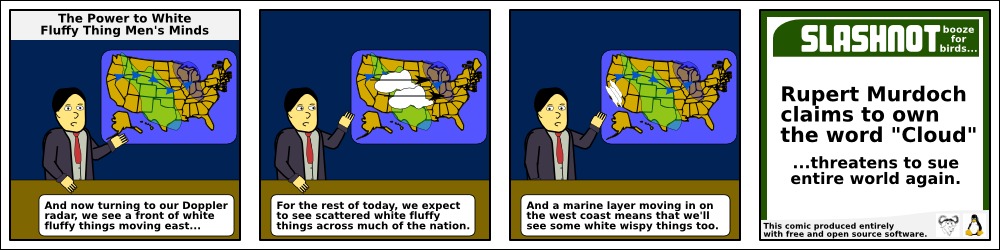

- Ubuntu, the cloud OS, by Neil Levine

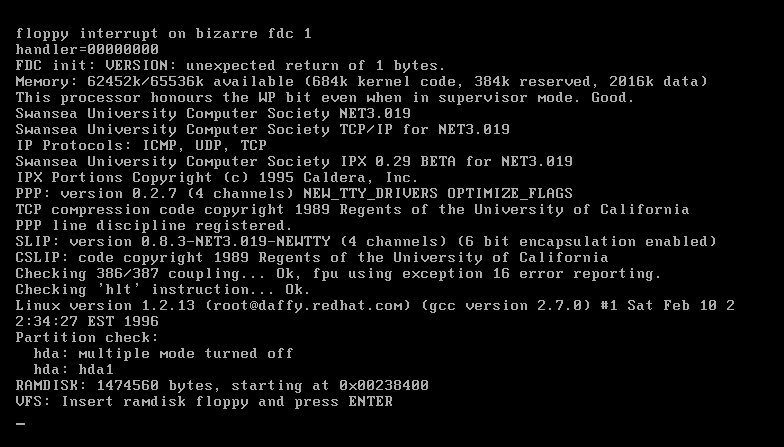

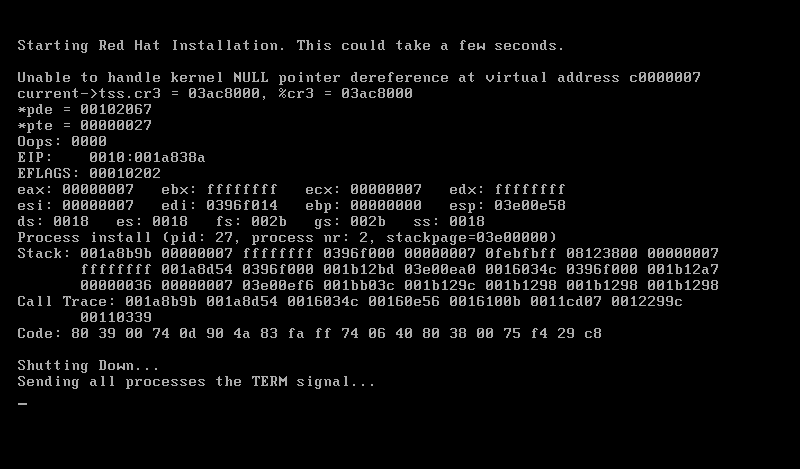

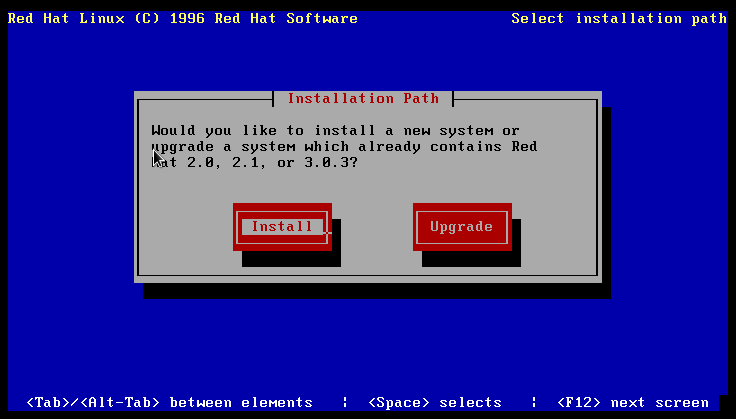

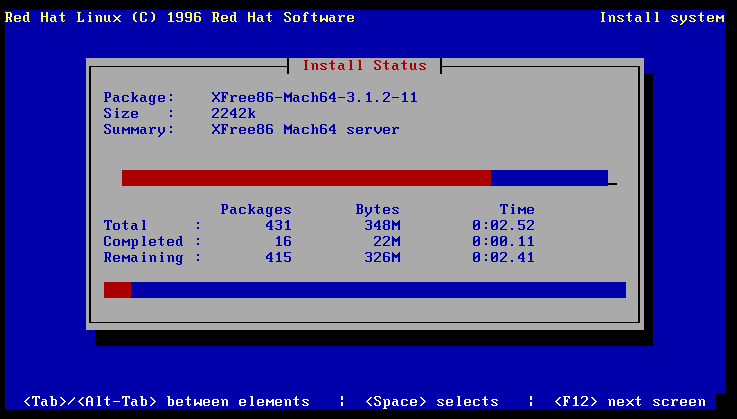

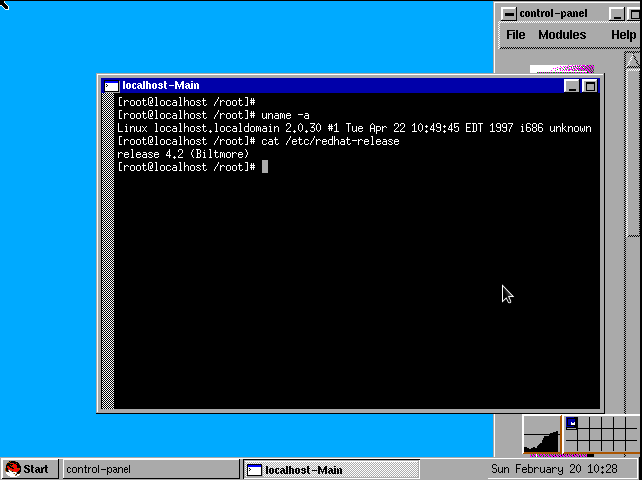

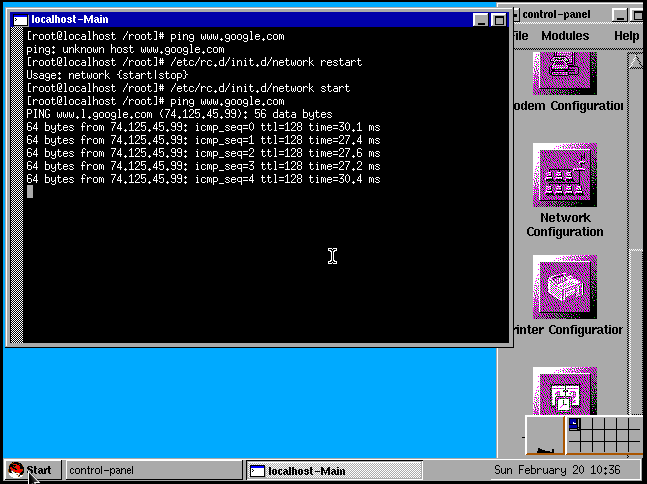

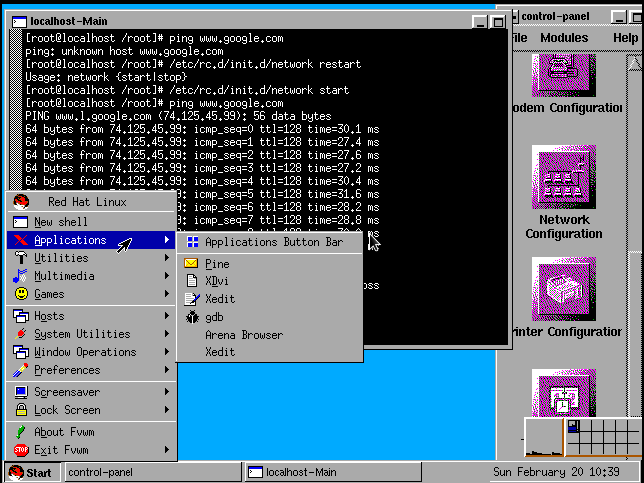

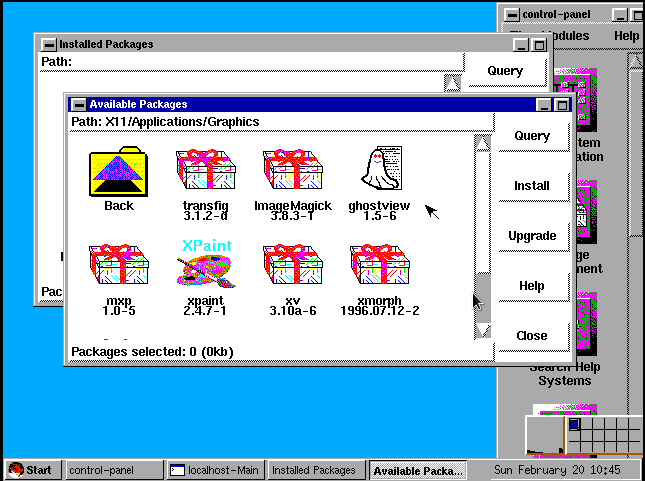

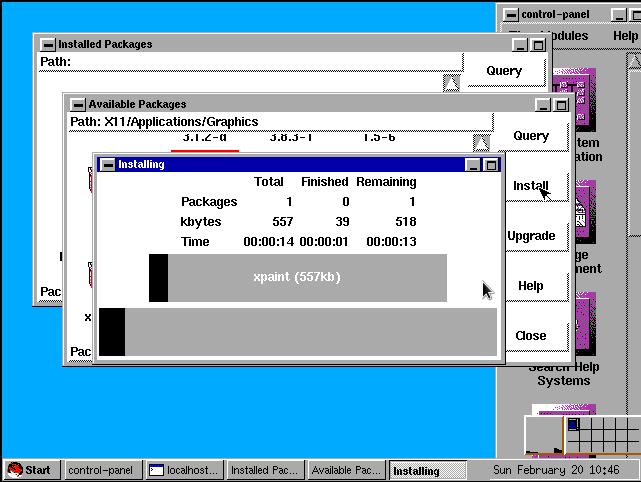

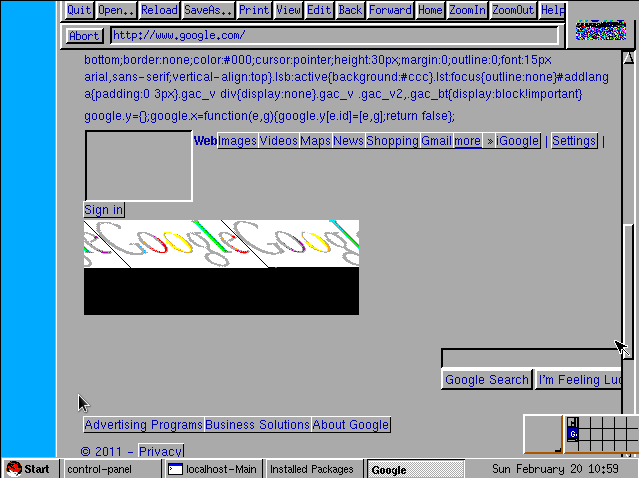

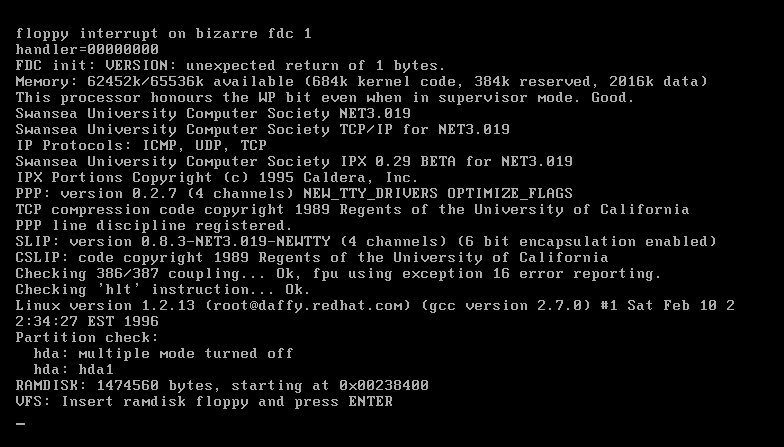

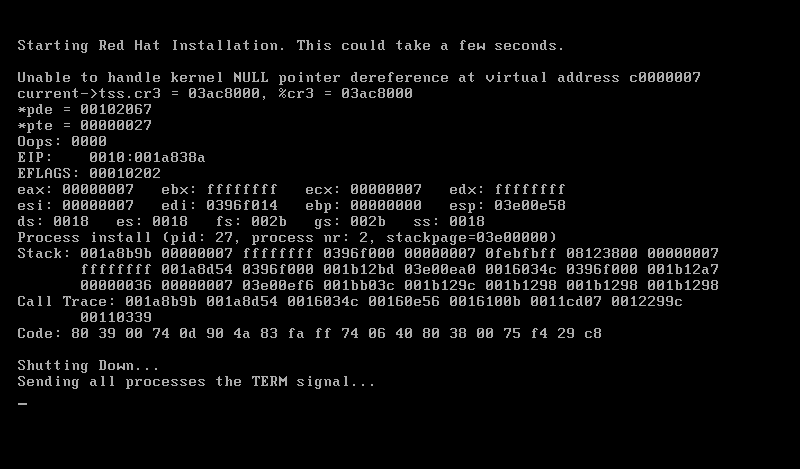

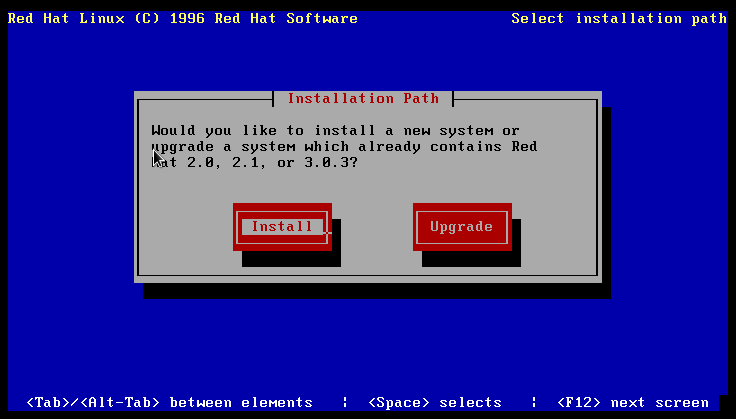

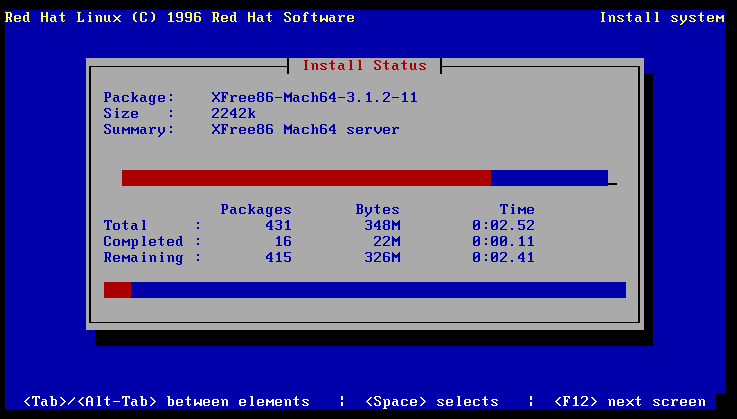

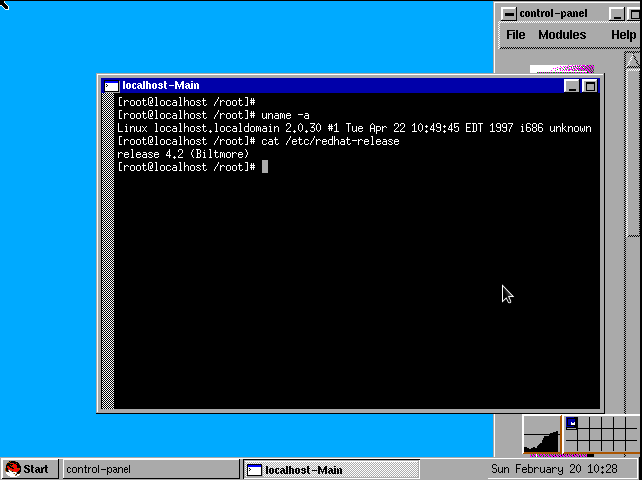

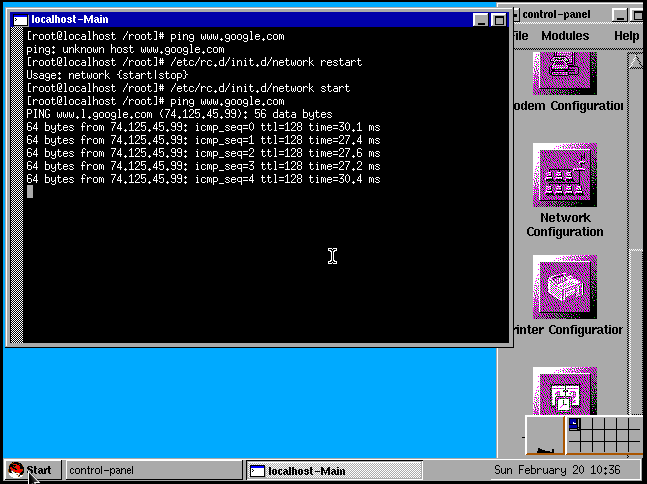

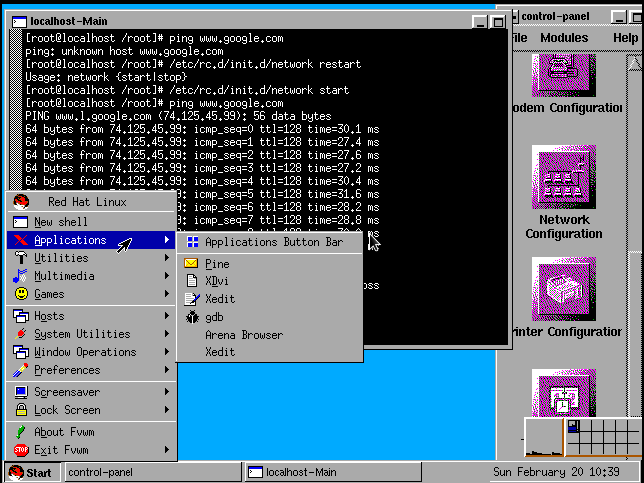

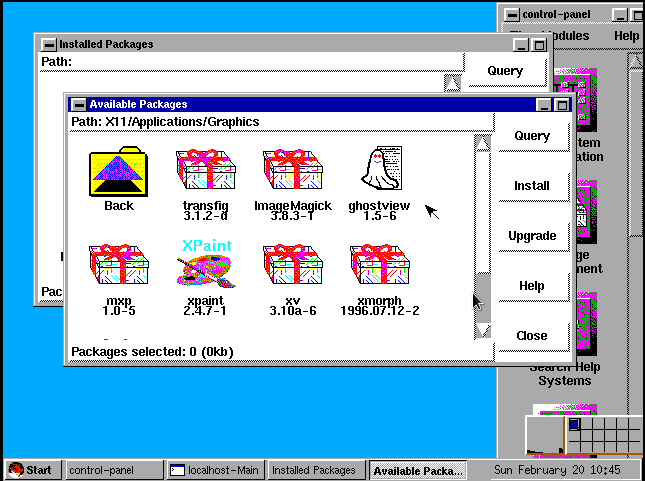

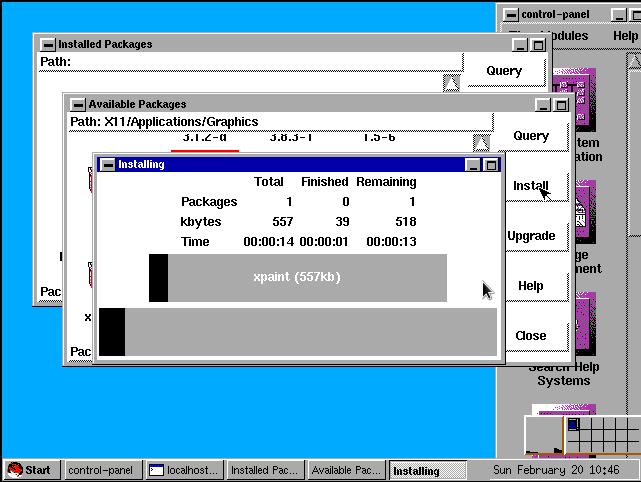

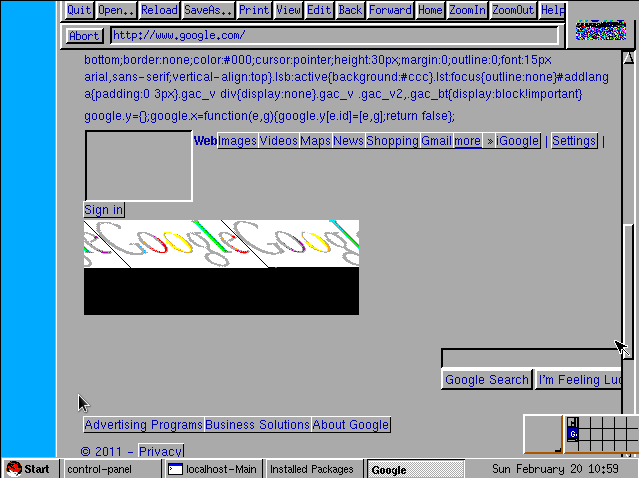

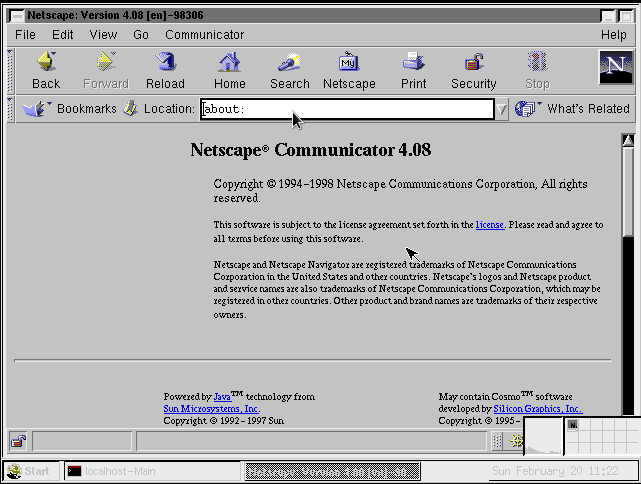

- Back in the Day: Red Hat Linux 3.0.3 - 4.2, 1996-1997, by Anderson Silva

- Taking the Pain Out of Authentication, by Yvo Van Doorn

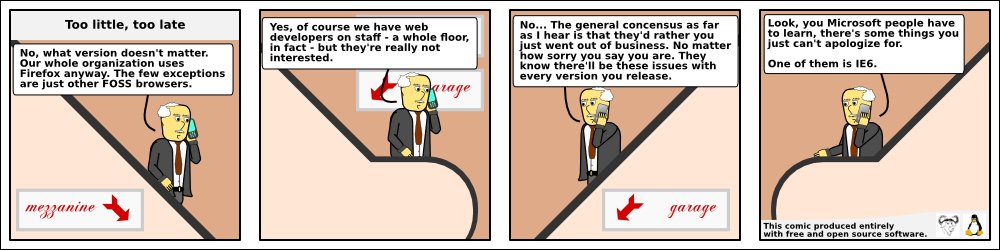

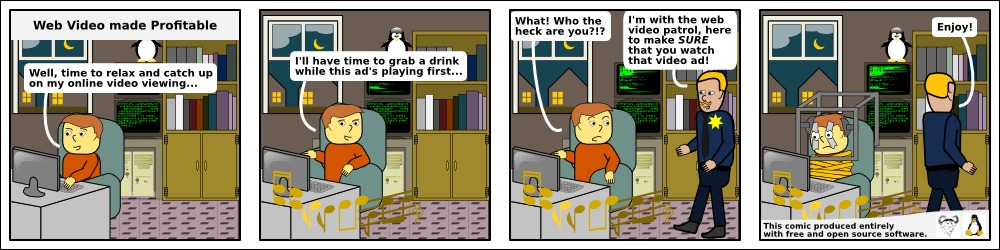

- HelpDex, by Shane Collinge

- XKCD, by Randall Munroe

- Doomed to Obscurity, by Pete Trbovich

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net. Deividson can also be reached via twitter.

News in General

Next Ubuntu will shed 'Netbook' Edition

Next Ubuntu will shed 'Netbook' Edition

The next release of Ubuntu, code-named Natty Narwal, is expected in

the next few weeks, but there will be only 2 major platforms targeted.

The development of future Ubuntu distributions will merge into a

single version of Ubuntu for desktops and netbooks, known simply as

"Ubuntu" for Version 11.04. See more details in the Distros section,

below.

20th Anniversary of Linux Celebrations Kick Off at the LF Summit

20th Anniversary of Linux Celebrations Kick Off at the LF Summit

The Linux community will come together to celebrate 20 years of Linux.

In August of 1991, Linux creator Linus Torvalds posted what today is a

famous or infamous

message

to share with the world that he was building a new operating

system. The Linux Foundation will kick-off months of 20th Anniversary

celebrations starting at the Collaboration Summit in San Francisco,

April 5-8. Attendees will learn what else is in store for the months

leading up to the official celebration in August of 2011.

The Linux Foundation invites everyone in the Linux community to participate in

celebrating this important milestone. There will be a variety of ways

to get involved, including an opportunity to record a personal message

to the rest of the community about Linux' past, present and/or future

in the the "20th Anniversary Video Booth." The Booth will be located

in the lobby area of the Collaboration Summit, and the messages will

be compiled into a display to be shared online and at LinuxCon in

August.

"The Linux Foundation Collaboration Summit continues to be one of our

most important events of the year.," said Jim Zemlin, executive

director at The Linux Foundation. "The Summit will showcase how Linux

is maturing while kicking off an important year for the OS that will

include 20th anniversary celebrations where people from throughout the

community can participate online or at our events in a variety of

different ways."

Android Developers Confident about Oracle-Google Litigation

Android Developers Confident about Oracle-Google Litigation

Attendance at AnDevCon in March was almost a sellout and indicative of

the rapid growth of Android in the Open Source Community. Google IO

did sell out in less than an hour of open registration. At this

event, 36% of attendees came from organizations with more than 1000

developers and about 50% came from organizations with more than 100

developers.

Oracle sued Google in August of 2010 over its possible use of Java

source code in Android without licensing.

During the conference, representatives from Black Duck and competing

firm Protocode both told LG that most large firms involved in

developing Android apps seem relatively unconcerned about the legal

battle between Oracle and Google. Many of these issues have played

out before for Linux veterans and a relative calm pervades the Android

community.

Said Peter Vescuso of Black Duck software, "Google is the goat to

sacrifice at Oracle's altar", meaning that most developers do not

expect any serious legal threats to be directed at them.

He added that many of their customers were using Android, including 8

of 10 biggest hand set makers. "The most shocking thing about the law

suit is that there is almost no reaction from customers; it's not

their problem."

Black Duck made an analysis of 3800 mobile open source companies in

2010. This has been roughly doubling every year since 2008. When Black

Duck looked at development platform, 55% listed Android, 39% listed IOS,

which was not surprising as even iPhone apps use chunks of open source

code. Android has the advantage of being GPL-based, while IOS is not.

According to Protocode's Kamil Hassin, some of their smaller

Android customers have been hesitant about the potential outcome of

Oracle's litigation but many are now moving more into Andriod

development.

He noted that Black Duck focused on bigger companies with large code

bases, while Protocode's products were lighter weight and focused more

on where FOSSw occured internally, especially code from Apache Ant,

Derby, MySQL, and even more on PostgreSQL.

Google Delays Honeycomb Delivery to Open Source

Google Delays Honeycomb Delivery to Open Source

Google will delay releasing its Honeycomb source code for the new

series of Android-based tablet computers as it works on bugs and

hardware compatibility issues. Honeycomb is called Android 3.0, while

mobile phone developers are expecting the release of Android 2.4

shortly.

Google has indicated that some UI improvements will be back-ported to

Android phones in 2.4.

Speaking with Bloomberg News on March 25th, Andy Rubin, Google's

top honcho for Android development, said that they would not release the

source code for the next several months

because there was a lot more work to do to make the newer OS ready for

"other device types including phones". Instead, source code access

will remain only with major partners such as HTC and Motorola.

Developer blogs initially showed mixed opinions of the delay, with a

few developers recognizing that Google was trying to get a better and

more consistant user-experience for future Android tablets, especially

less expensive models that will come from small third world

manufacturers.

Oracle dropping future Itanium development for its Database

Oracle dropping future Itanium development for its Database

In March, Oracle announced that it would discontinue development work

for future versions of the Oracle database on Intel Itanium RISC

platform. In a press statement, Oracle said, "Intel management made

it clear that their strategic focus is on their x86 microprocessor and

that Itanium was nearing the end of its life."

Oracle added, "Both Microsoft and RedHat have already stopped

developing software for Itanium."

Oracle pointed to trends that show no real growth in Itanium

platforms, which are almost exclusively sold by its rival HP. Intel

publically defended its chip platform and said work would continue on

the next 2 generations of Itanium - Poulsen and Kittson, but did not

state clear support for the future of Itanium after that.

Oracle support would continue for current versions of its database

running on Itanium for the next several years, but would be stopping

development on new versions. This would have a negative effect on

future sales of HP SuperDome servers and force current customers to

reconsider strategic vendor relationships.

According to a blog at Forrester Research, "Oracle's database is a

major workload on HP's Itanium servers, with possibly up to 50% of

HP's flagship Superdome servers running Oracle. A public statement

from Oracle that it will no longer develop their database software

will be a major drag on sales for anyone considering a new Oracle

project on HP-UX and will make customers and prospects nervous about

other key ISV packages as well."

For more Forrester analysis of this development, go

here.

Oracle revenues and profits were up for the first quarter of 2011

and exceeded analysts' expectations. Total revenue for the quarter

ending Feb. 28 gained 37 percent to $8.76 billion.

Oracle CEO Larry Ellison said that the products and IP acquired from

Sun Microsystems get the lion's share of the credit. Ellison noted

that revenue from the Sun-built Exadata database server - which he

claimed was the fastest database server in the world - has doubled

to $2 billion.

APT Breach at RSA may undermine SecureID

APT Breach at RSA may undermine SecureID

Security stalwart and edifice RSA, the home of the ubiquitous RSA

algorithm and provider of 2-factor security devices, announced that it

had been breached by sophisticated attackers who were after the

Intellectual Property used in such devices. RSA has filed an 8-K

SecureCare document and the Federal Government is investigating the

incidents. In the first few days that followed, RSA did not offer

details about the exploit or the specifics of what was taken.

The announcement of the breach came on St. Patrick's Day and led to a

lot of reflection by network and systems security experts. In the

forefront of many on-line comments is concern for the very large

customer base around the world using RSA's SecureID products.

RSA said:

"Our investigation has led us to believe that the attack is in the

category of an Advanced Persistent Threat (APT). Our investigation

also revealed that the attack resulted in certain information being

extracted from RSA's systems. Some of that information is specifically

related to RSA's SecurID two-factor authentication products. While at

this time we are confident that the information extracted does not

enable a successful direct attack on any of our RSA SecurID customers,

this information could potentially be used to reduce the effectiveness

of a current two-factor authentication implementation as part of a

broader attack. We are very actively communicating this situation to

RSA customers and providing immediate steps for them to take to

strengthen their SecurID implementations."

LG spoke with Steve Shillingford, president and CEO, Solera Networks,

who pointed to the value of Network Forensics in this type of attack

and explained that, "RSA is able to say specifically what happened -

Advanced Persistent Threat (APT) - and what was and was not

breached - SecurID code, but no customer records. Compare RSA to the

many companies breached in the Aurora attacks, and the difference in

insight is striking. Intel had stated that though they knew they had

been breached they had no specific evidence of IP taken - very

different than the evidence that RSA cites."

Shillingford also told LG: "Many companies and organizations are using

NF for complete network visibility and situational awareness, to limit

the kind of exposure RSA is noting. NF enables superior security

practice, which is especially important when you're working with

private customer security records and systems, in this case. It is

difficult for customers to put trust in an organization that has

difficulty calculating the extent of an attack and its source,

especially today."

Shillingford recommended that RSA take the following steps:

- Take steps to further obfuscate the SecurID code that was breached,

to limit what can be done with the compromised information

- Take an 'open' approach, recognizing that the exposed code will

produce reaction/response that accomplishes tighter SecurID security.

Securosis, another security firm, drew similar lessons in a company

blog and asked questions such as "Are all customers [e]ffected or only

certain product versions and/or configurations?"

Securosis recommended waiting a few days if you use SecureID for

banking or at other financial institutions and then requesting updated

software and security tokens. RSA is already offering their customers

advice on hardening SecureID transactions, including the obvious

lengthening and obscuring of passwords used with 2-factor

authentication. See their blog posting at:

http://securosis.com/blog/rsa-breached-secureid-affected.

And NSS Labs, a security testing service, recommends that

organizations using SecureID to protect sensitive information should

consider eliminating remote access until all issues are resolved.

NSS Labs has prepared an Analytical Brief on the RSA situation and

points that need to be taken into consideration by enterprises needing

to protect critical information. That brief is available here:

http://www.nsslabs.com/research/analytical-brief-rsa-breach.html.

RSA Conference Offers Multiple Data Breach Reports

RSA Conference Offers Multiple Data Breach Reports

Multiple reports on the state of cyber-security were available at this

year's RSA security conference and the conclusions are that the trends

are getting worse. And this was before RSA itself was breached.

Every year, Verizon Business publishes the Data Breach Investigations

Report (DBIR), which presents a unique and detailed view into how

victims get breached, as well as how to prevent data breaches. It is

released early in the year, usually in time for the RSA security

conference or just preceeding it.

Much of this data is collected by the Veris website, an anonymous data

site maintained by ICSA Labs and Verizon, located

here.

In order to better to facilitate more information sharing on security

incidents, Verizon released VERIS earlier this year for free public

use.

Data breaches continue to plague organizations worldwide, and the DBIR

series, with additional info from the US Secret Service, now spans

six years, more than 900 breaches, and over 900 million compromised

records. The joint goal is to distinguish who the attackers are, how

they're getting in, and what assets are targeted.

Two shocking stats from the 2010 DBIR were:

- 86% of victims had evidence of the breach in their log files;

- 96% of breaches were avoidable through simple or intermediate

controls (+9%).

This leads to the conclusion that simple scans and basic data mining

from these logs could alert most organizations to anomalous activity.

Find the Executive Summary of the 2010 Data Breach Investigations

Report

here.

Organizations are encountering more cybersecurity events but the

events, on average, are costing significantly less than in the

previous year, according to the 2011 CyberSecurity Watch Survey

conducted by CSO magazine, and sponsored by Deloitte. Twenty-eight

percent of respondents have seen an increase in the number of events

in the 2011 study and 19% were not impacted by any attacks, compared

to 40% in the 2010 study.

More than 600 respondents, including business and government

executives, professionals and consultants, participated in the survey that is

a cooperative effort of CSO, the U.S. Secret Service,

the Software Engineering Institute CERT Program at Carnegie Mellon

University and Deloitte.

The 2011 CyberSecurity Watch Survey uncovered that more attacks (58%)

are caused by outsiders (those without authorized access to network

systems and data) versus 21% of attacks caused by insiders (employees

or contractors with authorized access) and 21% from an unknown source;

however 33% view the insider attacks to be more costly, compared to

25% in 2010. Insider attacks are becoming more sophisticated, with a

growing number of insiders (22%) using rootkits or hacker tools

compared to 9% in 2010, as these tools are increasingly automated and

readily available.

Another cybersecurity threat are increasing cyber-attacks from foreign

entities, which has doubled in the past year from 5% in 2010 to 10% in

2011.

By mid-2010, Facebook recorded half a billion active users, Not

surprisingly, this massive user base is heavily targeted by scammers

and cybercriminals, with the number and diversity of attacks growing

steadily throughout 2010 - malware, phishing and spam on social

networks have all continued to rise in the past year.

The survey found that:

- 40% of social networking users quizzed have been sent malware

such as worms via social networking sites, a 90% increase since

April 2009;

- Two thirds (67%) say they have been spammed via social

networking sites, more than double the proportion less than two

years ago;

- 43% have been on the receiving end of phishing attacks, more

than double the figure since April 2009.

The US continues to be the home of most infected web pages. However,

over the past six months alone, European countries have become a more

abundant source of malicious pages, with France in particular

displacing China from the second spot, increasing its contribution

from 3.82% to 10.00% percent of global malware-hosting websites.

The full Security Threat Report 2011 contains much more information

and statistics on cybercrime in 2010, as well as predictions for

emerging trends, and can be

downloaded free of charge.

Apple Revenue to Surpass IBM and HP

Apple Revenue to Surpass IBM and HP

According a Bloomberg News interview with Forrester founder and CEO

George Colony, Apple revenues, fueled by mobile 'apps', may grow about

50% this year. At that rate, Apple's revenues would surpass IBM's in

2011 and then HP's in 2012. In 2010, Apple's market valuation passed

that of Microsoft.

Several analysts surveyed by Bloomberg think that Apple's revenue will

exceed $100 billion, IBM's current revenue, with a growing share of

its revenue coming from internet apps. Colony also thought the trend

to such mini-applications may undermine revenues for other companies

from websites and web advertising. This could spell some trouble for

Google and Yahoo, among others, although the Android market place for

apps is catching up to Apple's.

But Colony also saw a downside to Apple's reliance on iconic Steve

Jobs to get the best designs and technology into future Apple

products. "Remember, every two years they have to fill that store

with new stuff. Without Steve Jobs as the CEO, I think it will be

much harder for them to do that. That would be a massive, massive hit

to the valuation."

See the Bloomberg article here.

Conferences and Events

- SugarCon '11 - SugarCRM Developer Conference

-

April 4-6, 2011, Palace Hotel, San Francisco, CA

http://www.sugarcrm.com/crm/events/sugarcon.

- Storage Networking World (SNW) USA

-

April 4-7 2011, Santa Clara, California

http://www.snwusa.com/.

- Linux Foundation Collaboration Summit 2011

-

April 6-8, Hotel Kabuki, San Francisco, CA

http://events.linuxfoundation.org/events/collaboration-summit.

- Impact 2011 Conference

-

April 10-15, Las Vegas, NV

http://www-01.ibm.com/software/websphere/events/impact/.

- Embedded Linux Conference 2011

-

April 11-13, Hotel Kabuki, San Francisco, Calif.

http://events.linuxfoundation.org/events/embedded-linux-conference.

- MySQL Conference & Expo

-

April 11-14, Santa Clara, CA

http://en.oreilly.com/mysql2011/.

- Splunk>Live! North America

-

April 12, 2011, Westin Buckhead, Atlanta, Georgia

http://live.splunk.com/forms/SL_Atlanta_041211.

- Splunk>Live! Australia

-

April 12, 2011, Park Hyatt, Melbourne, Victoria

http://www.splunk.com/goto/SplunkLive_Melbourne_Aug09.

- For other Splunk>Live! events in April and May, see:

-

http://www.splunk.com/page/events.

- Ethernet Europe 2011

-

April 12-13, London, UK

http://www.lightreading.com/live/event_information.asp?event_id=29395.

- Cloud Slam - Virtual Conference

-

April 18-22, 2011 , On-line

http://www.cloudslam.org.

- O'Reilly Where 2.0 Conference

-

April 19-21, 2011, Santa Clara, CA

http://where2conf.com/where2011.

- InfoSec World 2011

-

April 19-21, 2011 in Orlando, FL.

http://www.misti.com/infosecworld.

- Lean Software and Systems Conference 2011

-

May 3-6, 2011, Long Beach California

http://lssc11.leanssc.org/.

- Red Hat Summit and JBoss World

-

May 3-6, 2011, Boston, MA

http://www.redhat.com/promo/summit/2010/.

- USENIX/IEEE HotOS XIII - Hot Topics in Operating Systems

-

May 8-10, Napa, CA

http://www.usenix.org/events/hotos11/.

- Google I/O 2011

-

May 10-11, Moscone West, San Francisco, CA

[Sorry - this conference filled in about an hour - We missed it too!].

- OSBC 2011 - Open Source Business Conference

-

May 16-17, Hilton Union Square, San Francisco, CA

http://www.osbc.com.

- Scrum Gathering Seattle 2011

-

May 16-18, Grand Hyatt, Seattle, WA.

http://www.scrumalliance.org/events/285-seattle.

- RailsConf 2011

-

May 16-19, Baltimore, MD

http://en.oreilly.com/rails2011.

- FOSE 2011.

-

May 19-21, Washington, DC

http://fose.com/events/fose-2010/home.aspx.

- Citrix Synergy 2011.

-

May 25-27, Convention Center, San Francisco, CA

http://www.citrixsynergy.com/sanfrancisco/.

- USENIX HotPar '11- Hot Topics in Parallelism

-

May 26-27, Berkeley, CA

http://www.usenix.org/events/hotpar11/.

- LinuxCon Japan 2011

-

June 1-3, Pacifico Yokohama, Japan

http://events.linuxfoundation.org/events/linuxcon-japan.

- Semantic Technology Conference

-

June 5-9, 2011, San Francisco, CA

http://www.semanticweb.com.

- The Open Mobile Summit 2011

-

June 8-9 London, UK

https://www.openmobilesummit.com/.

- Ottawa Linux Symposium (OLS)

-

June 13 - 15 2011, Ottawa, Canada

http://www.linuxsymposium.org/2011/.

- Creative Storage Conference

-

June 28, 2011 in Culver City, CA.

http://www.creativestorage.org.

- Mobile Computing Summit 2011

-

June 28-30, Burlingame, California

http://www.netbooksummit.com/.

Distro News

Natty Narwhal Alpha 3 will become Ubuntu 11.04

Natty Narwhal Alpha 3 will become Ubuntu 11.04

Alpha 3 is the third in a series of milestone CD images that will be

released throughout the Natty development cycle. The 11.04 version of

Ubuntu is expected some time in April or early May.

In March, Cannonical announced that Ubuntu releases from 11.4 would no

longer have separate netbook editions. The desktops will be

standardized for all users and simply be called Ubuntu 11.04, and the

server edition will be called Ubuntu Server 11.04.

Pre-releases of Natty are not recommended for anyone needing a stable

system or anyone who is not comfortable with occasional or frequent

lockup or breakage. They are, however, recommended for Ubuntu

developers and those who want to help in testing and reporting and

fixing bugs in the 11.04 release.

New packages showing up in this release include:

- LibreOffice 3.3.1

- Unity 3.6.0

- Linux Kernel 2.6.38-rc6.

- Upstart 0.9

- Dpkg 1.16.0-pre + multi-arch snapshot

The Alpha images are claimed to be reasonably free of show-stopper CD

build or installer bugs, while representing a very recent snapshot of

Natty. You can download it here:

Ubuntu Desktop and

Server

Ubuntu Server

for UEC and EC2.

openSUSE 11.4 now out

openSUSE 11.4 now out

In March, the 11.4 version of the openSUSE brought significant

improvements along with the latest in Free Software applications.

Combined with new tools, projects and services around the release,

11.4 shows growth for the openSUSE Project.

11.4 is based around Kernel 2.6.37 which improves the scalability of

virtual memory management and separation of tasks executed by terminal

users, leading to better scalability and performance and less

interference between tasks. The new kernel also brings better hardware

support, with open Broadcom Wireless drivers, improved Wacom support

and many other new or updated drivers. It also supports the

improvements to graphic drivers in the latest Xorg and Mesa shipped,

so users will enjoy better 2D and 3D acceleration.

New tools for an enhanced boot process. The latest gfxboot 4.3.5

supports VirtualBox and qemu-kvm while Vixie Cron has been replaced

with Cronie 1.4.6 supporting the PAM and the SELinux security

frameworks. More experimental software options include GRUB2 and

systemd.

The KDE Plasma Desktop 4.6 introduces script-ability to window manager

KWin and easier Activity management as well as improvements to network

and bluetooth handling. Stable GNOME 2.32 improves usability and

accessibility. 11.4 also has GNOME Shell, part of the upcoming GNOME3,

available for testing.

Firefox 4.0, first to ship in 11.4, introduces a major redesign of

the user interface with tabs moved to the top of the toolbar, support

for pinning of tabs and more. Firefox Sync synchronizes bookmarks,

history, passwords and tabs between all your installations. Firefox 4

also supports newer web standards like HTML5, WebM and CSS3. Version

11.4 of openSUSE includes up to date Free Software applications as

it's the first major distribution to ship LibreOffice 3.3.1 with

features like import and edit SVG files in Draw, support for up to 1

million rows in Calc and easier slide layout handling in Impress. 11.4

also debuts the result of almost 4 years of work with the Scribus 1.4

release based on Qt 4 and Cairo technology. Improved text rendering,

undo-redo, image/color management and vector file import are

highlights of this release.

11.4 brings the latest virtualization stack with Xen 4.0.2 introducing

memory overcommit and a VMware Workstation/player driver, VirtualBox

4.0.4 supporting VMDK, VHD, and Parallels images, as well as resizing

for VHD and VDI and KVM 0.14 with support for the QEMU Enhanced Disk

format and the SPICE protocol. As guest, 11.4 includes open-vm-tools

and virtualbox-guest-tools, and seamlessly integrates clipboard

sharing, screen resizing and un-trapping your mouse.

openSUSE is now available for immediate

download.

Gentoo Linux LiveDVD 11.0

Gentoo Linux LiveDVD 11.0

Gentoo Linux announced the availability of a new LiveDVD to celebrate

the continued collaboration between Gentoo users and developers. The

LiveDVD features a superb list of packages, among which are Linux

Kernel 2.6.37 (with Gentoo patches), bash 4.1, glibc 2.12.2, gcc

4.5.2, python 2.7.1 and 3.1.3, perl 5.12.3, and more.

Desktop environments and window managers include KDE SC 4.6, GNOME

2.32, Xfce 4.8, Enlightenment 1.0.7, Openbox 3.4.11.2, Fluxbox 1.3.1,

XBMC 10.0 and more.

Office, graphics, and productivity applications include OpenOffice

3.2.1, XEmacs 21.5.29 gVim 7.3.102, Abiword 2.8.6, GnuCash 2.2.9,

Scribus 1.9.3, GIMP 2.6.11, Inkscape 0.48.1, Blender 2.49b, and much

more.

The LiveDVD is available in two flavors: a hybrid x86/x86_64 version,

and an x86_64 multilib version. The livedvd-x86-amd64-32ul-11.0

version will work on 32-bit x86 or 64-bit x86_64. If your CPU

architecture is x86, then boot with the default gentoo kernel. If your

arch is amd64 then boot with the gentoo64 kernel. This means you can

boot a 64bit kernel and install a customized 64bit userland while

using the provided 32bit userland. The livedvd-amd64-multilib-11.0

version is for x86_64 only.

Software and Product News

New Firefox 4 available for Linux and other platforms

New Firefox 4 available for Linux and other platforms

Mozilla has released Firefox 4, the newest version of the popular,

free and open source Web browser.

Firefox 4 is available to download for Windows, Mac OS X and Linux in

more than 80 languages. Firefox 4 will also be available on Android

and Maemo devices soon.

Firefox 4 is the fastest Firefox yet. With dramatic speed and

performance advancements across the board, Firefox is between two and

six times faster than previous releases.

The latest version of Firefox also includes features like App Tabs and

Panorama, industry-leading privacy and security features like Do Not

Track and Content Security Policy to give users control over their

personal data. Firefox Sync gives users access to their history,

bookmarks, open tabs and passwords across computers and mobile

devices.

Firefox supports modern Web technologies, including HTML5. These

technologies are the foundation for building amazing websites and Web

applications.

Novell Offers Free Version of Sentinel Log Manager

Novell Offers Free Version of Sentinel Log Manager

Novell is offering a free version of its Sentinel Log Manager. The new

version will allow customers to leverage key capabilities of Sentinel

Log Manager including log collection at 25 events per second, search

and reporting capabilities for compliance requirements and security

forensics. Customers can easily upgrade to a full license that

supports additional features such as distributed search and log

forwarding.

The release of the free version of Novell Sentinel Log Manager makes

it simpler for customers to start using log management as a tool to

improve security and ensure regulatory compliance. Novell Sentinel Log

Manager includes pre-configured templates to help customers quickly

and easily generate custom compliance reports. Novell Sentinel Log

Manager also uses non-proprietary storage technology to deliver

intelligent data management and greater storage flexibility.

"Strained IT departments are struggling to keep up with stringent

regulatory compliance mandates. With the release of the free version

of Sentinel Log Manager, Novell is showing a strong commitment to

reducing the burden of regulatory compliance," said Brian Singer,

senior solution manager, Security, Novell. "Log management also

provides the foundation for user activity monitoring, an important

capability that's becoming mandatory to combat emerging threats."

Sentinel Log Manager is an easy-to-deploy log management tool that

provides higher levels of visibility into IT infrastructure

activities. Built using SUSE Studio Novell's web-based appliance

building solution, Sentinel Log Manager helps customers significantly

reduce deployment cost and complexity in highly distributed IT

environments.

Novell Sentinel Log Manager is the one of the latest products to ship

from Novell's Security Management product roadmap for the intelligent

workload management market. Novell Sentinel Log Manager easily

integrates with Novell Sentinel and the Novell Identity Management

suite, providing organizations with an easy path to user activity

monitoring and event and information management.

The free download of Sentinel Log Manager is

here.

Oracle Enhances MySQL Enterprise Edition

Oracle Enhances MySQL Enterprise Edition

An update to MySQL 5.5 Enterprise Edition is now available from

Oracle.

New in this release is the addition of MySQL Enterprise Backup and

MySQL Workbench along with enhancements in MySQL Enterprise Monitor.

Recent integration with MyOracle Support allows MySQL customers to

access the same support infrastructure used for Oracle Database

customers. Joint MySQL and Oracle customers get faster problem

resolution by using a common technical support interface.

With support for multiple operating systems, including Linux,

Solaris, and Windows, MySQL Enterprise Edition can enable customers

to achieve significant TCO savings over Microsoft SQL Server.

MySQL Enterprise Edition is a subscription offering that includes

MySQL Database plus monitoring, backup and design tools, with 24x7,

worldwide customer support.

Rackspace Powers 500 Startups, Touches iPad

Rackspace Powers 500 Startups, Touches iPad

Kicking Off SXSW Interactive in March, Rackspace Announced its

Rackspace Startup Program and also the availability of Rackspace Cloud

2.0 for iPad, iPhone and iPod.

The Rackspace Startup Program provides cloud computing resources to

startups participating in programs such as 500 Startups,TechStars, Y

Combinator and General Assembly. Through the program Rackspace will

provide one-on-one guidance and cloud compute resources to help power

these startups to success.

In addition to the startup program, Rackspace also announced the

availability of its application, Rackspace Cloud 2.0, now available on

the iPhone, iPad and iPod Touch. It is free of charge and available

via iTunes.

The new application allows system administrators a life away from

their computer to manage the Rackspace Cloud and customize OpenStack

clouds while on the go. In addition, the application allows quick

access to Rackspace Cloud Servers, Cloud Files and supports multiple

Rackspace cloud accounts, including connecting to the UK and US

Clouds. The new application includes Chef integration helping automate

many processes and saving system administrators hours of time and is

one of the first to run on Apple's iOS 4.3, connecting audio and video

on Cloud Files from the application directly to Apple TV.

Rackspace Hosting is a specialist in the hosting and cloud computing

industry, and the founder of OpenStack, an open source cloud platform.

Linpus demos MeeGo-based tablet solution at CeBIT

Linpus demos MeeGo-based tablet solution at CeBIT

Linpus, a provider of open source operating system solutions, demoed

its MeeGo-based tablet solution at CeBIT in March. Linpus has created

a ready-to-go tablet solution, based around MeeGo and Intel Atom

processors, with a custom user interface and application suite.

Linpus originally announced its solution, Linpus Lite for MeeGo

Multi-Touch Edition, last November and has since been working to

improve the performance and stability of the product. The solution is

aimed at OEMs and ODMs looking to get a tablet product in the market

as quickly as possible.

Linpus created their own user interface, with an emphasis on

multi-touch interaction, on top of MeeGo. It includes an icon based

application launcher and an additional desktop for organising media

and social streams. Linpus also developed a number of custom

applications, which have all been written in Qt: E-reader, Media

Player, Webcam, Media Sharing, Photo Viewer, Browser, Personalization

and Virtual Keyboard that supports 17 languages and can be customized.

See screen shots here.

The solution is MeeGo compliant, which means users will be able to

take advantage of the Intel AppUp store for additional content. Linpus

is not delivering a device itself, rather it would provide its

software to a device manufacturer looking to create a tablet product.

The minimum specifications for such a device would be an Intel Atom

(Pinetrail or Moorestown), 512 MB RAM, at least 3GB of storage (hard

disk or SSD), a 1024 x 600 display, WiFi/Bluetooth and 3G

connectivity.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Linux based SMB NAS System Performance Optimization

By Sagar Borikar and Rajesh Palani

Introduction

SMB NAS systems range from the low end, targeting small offices (SOHO) that

might have 2 to 10 servers, requiring 1 TB to 10 TB of storage, to the high

end, targeting the commercial SMB (Small to Medium Business) market.

Customers are demanding a rich feature set at a lesser cost, given the

relatively cheap solutions available in the form of disk storage, compared

to what was available 5 years ago. One of the key factors in deciding the

unique selling proposition of the NAS (Network Attached Storage) in the

SOHO market is the network and storage performance. Due to extremely

competitive pricing, many NAS vendors are focused on reducing the

manufacturing costs, so one of the challenges is dealing with the scarcity

of system hardware resources without compromising the performance. This

article describes the optimizations (code optimizations, parameter tuning,

etc.) required to support the performance requirements in a

resource-constrained Linux based SMB NAS system.

System Overview

A typical SMB NAS hardware platform contains the following blocks:

1. 500MHz RISC processor

2. 256 MB RAM

3. Integrated Gigabit Ethernet controller

4. PCI 2.0

5. PCI-to-Serial ATA Host Controller

We broadly target the network and storage path in the kernel to

improve the performance. While focusing on the network path

optimization, we look at device level, driver level and stack level

optimization sequentially and with decreasing order of priority.

Before starting the optimization, the first thing to measure is the

current performance for CIFS, NFS and FTP accesses. We can use tools

such as IOzone, NetBench or bonnie++ to characterize the NAS box for

network and storage performance. The system level bottlenecks can be

identified using tools like Sysstat, LTTng or SystemTap that make use

of less intrusive instrumentation techniques. OProfile is another

tool which can be helpful to understand the functional bottlenecks.

1. Networking Optimization

1.1. Device level optimization

Many hardware manufacturers typically add a lot of bells and whistles

in their hardware for accelerating specific functions. The key ones

that have an impact on the NAS system performance are described below.

1.1.1 Interrupt Coalescence

Most of the gigabit Ethernet devices support interrupt coalescence,

which when clubbed with NAPI will provide an improved performance.

(Please refer to driver level optimization later). Choosing the

coalescence value for the best performance is a purely iterative job

and highly dependent on the target application. For the systems which

are targeted for excessive network load of large packets, specifically

jumbo frames, and CPU intensive applications or CPUs with lesser

frequency, this value plays a significant role in reducing the

frequent interruptions of the CPU. Even though there is no rule of

thumb to decide the coalescence value, keeping the following points in

mind for reducing the number of interrupts will be helpful:

- For the systems with the memory size of 256-512 MB, it helps to

choose the smaller value of the coalescence, clubbed with a smaller

budget value (The budget parameter specifies how many packets the

driver is allowed to pass to the network stack on a poll() call).

Note that the coalescence value also depends upon the type of the

interface of the device, viz. Gigabit or Fast Ethernet. For a Gigabit

Ethernet device and 256 MB memory size, interrupt coalescence timeout

value of 64 will be helpful.

- Driver budget parameter will decide the buffering capacity which

needs to be set, keeping in mind the coalescence value, and this could

also be kept at 64 for the above configuration.

1.1.2 MAC Filters

Enabling MAC filters in the device would greatly offload CPU

processing of non-required packets and help to drop the packets at the

device level itself.

1.1.3 Hardware Checksum

Most of the devices support hardware checksum calculation. Setting

CHECKSUM_HW as the CRC calculation will greatly offload the CPU from

the CRC computation and rejection of packets if there is a mismatch.

Note that this is only the physical layer CRC and not the protocol

level.

1.1.4 DMA support

The DMA controller tracks the buffer address and ownership bits for

the transmit and receive descriptors. Based on the availability of

buffers in the memory, the DMA controller will transfer the packets to

and from system memory. This is the standard behavior of any Ethernet

device IP. The performance can be improved by moving the descriptors

to onchip SRAM if available in the processor. As transmit and receive

descriptors would be accessed most frequently, moving them to the SRAM

will help with faster access to the buffers.

1.2 Driver level optimization

This optimization scope is vast and needs to be treated based on

the application requirement. The treatment can be different if the

need is to optimize only for packet forwarding performance, rather

than TCP level performance. Also, it varies based on the system

configuration and resources viz, other important applications making

use of the system resources, DMA channels, system memory and whether

the Ethernet device is PCI based or directly integrated to the System-

on-Chip (SoC) through the system bus. In the DUT, the Ethernet device

was hooked up directly to the system bus through the bridge so we'll

not focus on PCI based driver optimization for now, although to some

extent, this can be applied to PCI devices as well. Most of the NAS

systems would be subject to heavy stress when they are up and running

for several days and a lot of data transfer is going on over the

period. Specifically, with the Linux based NAS systems the free buffer

count keeps on reducing over the period and as the pdflush algorithm

is not optimized in the kernel for this specific use case, and given

that the cache flush mechanism is left to the individual file systems,

it depends upon how efficiently the flushing algorithm is implemented

in the filesystem. It also depends upon how efficient the underlying

hardware is, viz. SATA controller, the disks used etc.Following

factors need to be considered when tweaking the Ethernet device

driver:

1.2.1 Transmit interrupts

We can mask off transmit interrupts and avoid interrupt jitter

occurring because of transmission of the packets. If the transmit

gets blocked, there is not much performance impact on the overall

network process as such, and what matters is only the receive

interrupts.

1.2.2 Error interrupts

Disable the error interrupts in the device unless you really want to

take some action based on the error occurred and pass the status up to

the application level to let it know that there is some problem in the

device.

1.2.3 Memory alignment for descriptors

Even though there are some gaps added through padding in the

descriptors, it always helps to have efficient access to the

descriptors when they are cache line size aligned and bus width

aligned.

1.2.4 Memory allocation for the packets

You may want to make a trade off for memory allocation in the receive

path, viz. either choose to pre-allocate all the buffers, preferably

fixed sized, fixed number of buffers and use them recursively or make

dynamic allocations of buffers on a need basis. Each has its own

advantages and disadvantages. In the prior case, you will consume

memory and other applications in the system can't make use of the

buffers. This may lead to internal fragmentation of the buffers as

well as not knowing the exact size of the packet to be received. But

it will significantly reduce the memory allocation and deallocation of

the buffers in the receive path. As we rely on kernel memory

allocation / deallocation routines, there is a possibility that we may

end up starving or looping in the receive context to get the free

buffers, or if the required memory is not available then it may end up

in page allocation failures. Dynamic memory allocation will

significantly save on memory in a memory constrained system. This will

help other applications to run freely without any issues even when the

system is exposed to the heavy stress of IO. But you will have to pay

the penalty in the time required to allocate and free the buffers at

runtime.

1.2.5 SKB Recycling

Socket buffers(skb) are allocated and freed by the driver on arrival

of packets in standard Ethernet drivers. By implementing SKB

recycling, the sockets are pre-allocated and would be provided to the

driver when requested and would be put back in the recycle queue when

freed in kfree implementation.

1.2.6 Cache coherency

Cache coherency is usually not supported in most of the hardware to

reduce the BOM, but if supported, it makes the system highly

performance driven as software doesn't need to invalidate the cache

line and flush the cache. Especially under heavy IO stress, this can

have a worse impact on the network performance, which will get

propagated to the storage stack as well.

1.2.7 Prefetching

If the underlying CPU supports prefetching, it can help to

dramatically improve the system performance under heavy stress.

Specifically under heavy stress, this helps to improve the performance

by avoiding cache misses. Note that the cache needs to be invalidated

if the coherency is not supported in the hardware, else it will

greatly hamper the performance.

1.2.8 RCU locks

Spin locks / IRQ locks are expensive compared to light weight RCU

locks. The lock/unlock mechanism of RCU locks is much lighter than the

spin locks and helps a lot in performance improvement.

1.3 Stack level optimization

TCP/IP stack parameters are defined considering all the supported

protocols in the network. Based on the priority of the path followed,

we can choose the parameters as per our need. For example, NAS uses

CIFS, NFS protocols primarily for the data transfer.

1.3.1 TCP buffer sizes

We could choose the following parameters:

net.core.rmem_max = 64K

net.core.rmem_default = 109568

While this would provide ample space for the TCP packets to get queued

up in the memory and yield better performance, it eats up the system

memory, and therefore the memory required by other applications in the

system to run smoothly needs to be considered. With low system memory,

following options can be tried out, to check the performance

improvement. Again these changes are highly application dependent and

would not necessarily yield a similar performance improvement as

observed in our DUT.

1.3.2 Virtual Memory (VM) parameters

1.3.2.1 min_free_kbytes

This parameter decides when pdflush will kick in to flush out the

kernel cache to the disk. Setting this value high will spawn the

pdflush threads frequently to flush out the data to the disks. This

will help with freeing the buffers for the rest of the applications,

when the system is exposed to heavy stress. The flip side is that,

this may end up causing the system to thrash and consume CPU cycles

for freeing the buffer more frequently, and hence dropping packets.

1.3.2.2 pdflush threads

The default number of threads in the system is 2. By increasing the

number to 4, we can achieve better performance for NFS. This will help

to flush the data promptly and not get queued up for long, thus making

space available for free buffers for other applications.

2. Storage Optimization

Storage optimization highly depends upon the filesystem used along

with the characteristics of the storage hardware path i.e. the type of

the interface, viz. whether it has integrated SATA controller or over

PCI. It also depends on whether it has hardware RAID controller or

software RAID controller. Hardware RAID controller increases the BOM

dramatically. Hence for SOHO solutions, software RAID manager "mdam"

is used. In addition to HW support for RAID functionality, the

performance depends upon the software IO path which includes the block

device layer, device driver and filesystem used. NAS usually has

journaling file systems such as ext3 or XFS. XFS is more commonly

used for sequential writes or reads due to its extent based

architecture, whereas ext3 is well suited for sector wise reads or

writes which work on 512 bytes rather than big chunks. We used XFS in

our system. The flip side of the XFS is the fragmentation which will

be discussed in the next section. The following areas were tweaked

for performance improvements in the storage path:

2.1 Software RAID manager

There are two crucial parameters which can decide the performance of

the RAID manager.

2.1.1. Chunk size

We should set the chunk size to 64K which will

help in mirroring redundancy application, viz. RAID1 or RAID10

2.1.2. Stripe Cache size

Stripe cache size decides the stripe size

per disk. Stripe size should be judiciously decided as it will decide

the data that needs to be written in per disk of the RAID controller.

In case of journaled file system, there will be duplicate copy of the

disk and would bog down the IO if the chunk and stripe size value is

high.

2.2 Memory fragmentation

Due to its architecture, XFS requires memory in multiples of extents.

This leads to severe internal fragmentation if the blocks are of

smaller sizes. XFS demands the memory in multiples of 4K and if the

buddy allocator doesn't have enough room in order 2 or order 3 hash,

then the system may slow down till the other applications release the

memory and the kernel can join them back in the buddy allocator. This

will specifically be seen under heavy stress, after the system has

been running for a couple of days. This can be improved by tweaking

the xfs syncd centisecs (see below) to flush the stale data to disks

at higher frequency.

2.3 External journal

Journal or log plays an important role in defining XFS performance.

Any journaled file system doesn't write the data directly at the

destined sectors, but maintains the copy in the log and later writes

to the sectors. To achieve better performance, XFS writes the log at

the center of the disk whereas the data is stored in the tracks at the

outer periphery of the disk. This helps the actuator arm of the disk

to have reduced spindle movements, thereby increasing performance. If

we remove the dependency of maintaining the log in the same disk where

the actual data is stored, it increases performance dramatically.

Having NAND flash of 64M to store the log would certainly help reduced

spindle movements.

2.4 Filesystem optimization

2.4.1. Mount options

While mounting the filesystem, we can choose the mount options

as logsize=64M,noatime,nodiratime. Removing the access time for files

and directories would help relieve the CPU from continuously checking

the inodes for files and directories.

2.4.2. fs.xfs.xfssyncd_centisecs

This is the frequency at which XFS

flushes the data to disk. It will flush the log activity and clean up

the unlinked inodes. Setting this parameter to 500 will help increase

the frequency of flushing the data. This would be required if the

hardware performance is limited. Especially when the system is exposed

to constant writes, this helps a lot.

2.4.3. fs.xfs.xfsbufd_centisecs

This is the frequency at which XFS

cleans up the metadata. Keeping it low would lead to frequent cleanup

of the metadata for extents which are marked as dirty.

2.4.4. fs.xfs.age_buffer_centisecs

This is the frequency at which

xfsbufd flushes the dirty metadata to the disk. Setting it to 500

will lead to frequent cleanup of the dirty metadata.

The parameters listed in b,c and d above will lead to significant

performance improvement when the log is kept in a separate disk or

flash, compared to keeping it in the same media as the data.

2.5 Random disk IO

Simultaneous writes at non-sequential sectors would lead to a lot of

actuator arm and spindle movement, inherently leading to the

performance drop and impact on the overall throughput of the system.

Although there is very little we can do here to avoid random disk IO,

what we can control is the fragmentation.

2.6 Defragmentation

Any journaled filesystem will always have this issue when the system

is running over a long period of time or exposed to heavy stress. XFS

provides a utility named xfs_fsr which can be run periodically to

reduce the fragmentation in the disk, although it works only on the

dirty extents which are currently not in use. In fact, random disk IO

and the fragmentation issue go hand in hand. More the fragmentation

more will be the random disk IO and lesser the throughput. It is of

utmost importance to keep the fragmentation under control to reduce

the random IO.

2.7 Results

We can easily figure out that when the system is under heavy stress,

the amount of time taken by the kernel for packet processing is more

than the user space processing for the end-to-end path, i.e. from

Ethernet device to hard disk.

After implementing the above features, we captured the performance

figures on the same DUT.

For RAID creation, use '--chunk=1024'; for RAID5, use

echo 8192 > /sys/block/md0/md/stripe_cache_size

Around 37% degradation in performance was observed after 99%

fragmentation of the filesystem, as compared to when the filesystem

was 5% fragmented. Note that these figures were captured using the

bonnie++ tool.

2.7.2 Having an external log gives around 28% improvement in

performance compared to having the log in the same disk where the

actual data is stored, on a system that was 99% fragmented.

2.7.3 Running the defragmentating utility xfs_fsr on a system that is

99% fragmented gave an additional 37% improvement in performance.

2.7.4 CIFS write performance for a 1G file on a system that was

exposed to 7 days of continuous writes, increased by 133% after

implementing the optimizations. Note that this is end to end

performance that includes network and storage path.

2.7.5. The NFS write performance was measured using IOzone. NFS write

performance for a 1G file on a system that was exposed to 7 days of

continuous writes, increased by 180% after implementing the

optimizations. Note again that this is the end to end performance

that includes network and storage path.

4. Conclusion:

While Linux based commercial SMB NAS systems have the luxury of

throwing more powerful hardware to address the performance

requirements, SOHO NAS systems have to make do with resource

constrained hardware platforms, in order to meet the system cost

requirements. Although Linux is a general purpose OS that has a lot

of code to run a wide variety of applications, and is not necessarily

purpose built for an embedded NAS server application, it is possible

to configure and tune the OS to obtain good performance for this

application. Linux has a lot of knobs that can be tweaked to improve

system performance for a specific application. The challenge is in

figuring out the right set of knobs that need to be tweaked. Using

various profiling and benchmarking tools, the performance hotspots and

the optimizations to address the same, for a NAS server application

have been identified and documented.

Test data:

Bonnie++ benchmarks (ODS)

MagellanCIFS/XP (CSV)

MagellanNFS (CSV)

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/borikar.jpg)

I got introduced to Linux through the very well-known Tanenbaum

vs. Torvalds debate. Till that time, all I'd heard was that Linux

was just another Unix-like operating system. But looking at the immense

confidence of a young college graduate who was arguing with the

well-known professor, Minix writer, and network operating systems

specialist got me interested in learning more about it. From that day

forward, I couldn't leave Linux alone - and won't leave it in the future

either. I started by understanding the internals, and the more I delved

into it, the more gems I discovered in this sea of knowedge. Open

Source development really motivates you to learn and help others learn!

Currently, I'm actively involved in working with the 2.6 kernel and

specifically the MIPS architecture. I wanted to contribute my 2 cents to

the community - whatever I have gained from understanding and working

with this platform. I am hoping that my articles will be worthwhile for

the readers of LG.

![[BIO]](../gx/2002/note.png)

Raj Palani works as a Senior Manager, Software Engineering at EMC. He

has been designing and developing embedded software since 1993. His

involvement with Linux development spans more than a decade.

Activae: towards a free and community-based platform for digital asset management

By Manuel Domínguez-Dorado

Abstract

Every day, more businesses, organizations, and institutions of all kinds

generate media assets that become part of their value chains, their

know-how and production processes. We understand a multimedia digital asset

not only as a media file (audio, video, or image) but as the combination of

the file itself and its associated metadata; this allows, from

an organizational perspective, exploiting, analysis, and documentation.

About Activae project

From this perspective, it is essential that organizations have platforms

that allow the management of multimedia assets. There are several

platforms of this kind on the market [1]:

Canto Cumulus, Artesia DAM, Fuzion Media Asset Management, Nuexeo DAM, and

others. However, the number of simple and scalable platforms based on open

source software is not remarkable and that is why CENATIC [2] (Spanish

National Competence Center for the Application of Open Source

Technologies) has developed Activae [3]; a platform for digital and

multimedia asset management that is highly scalable, open source,

vendor-independent, fully developed in Python, and designed for the

Ubuntu Server.

If we were to list the features of activae according to their importance,

these would be [4]:

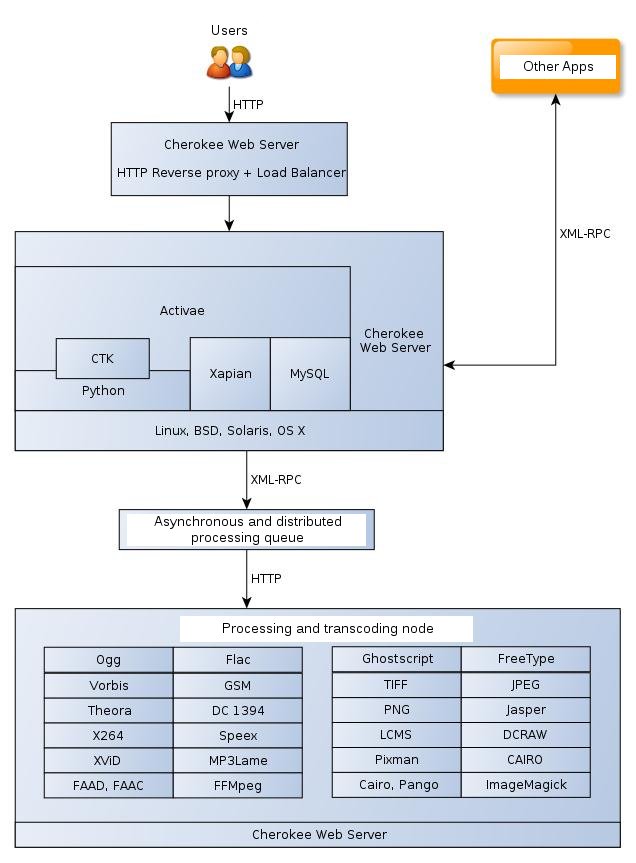

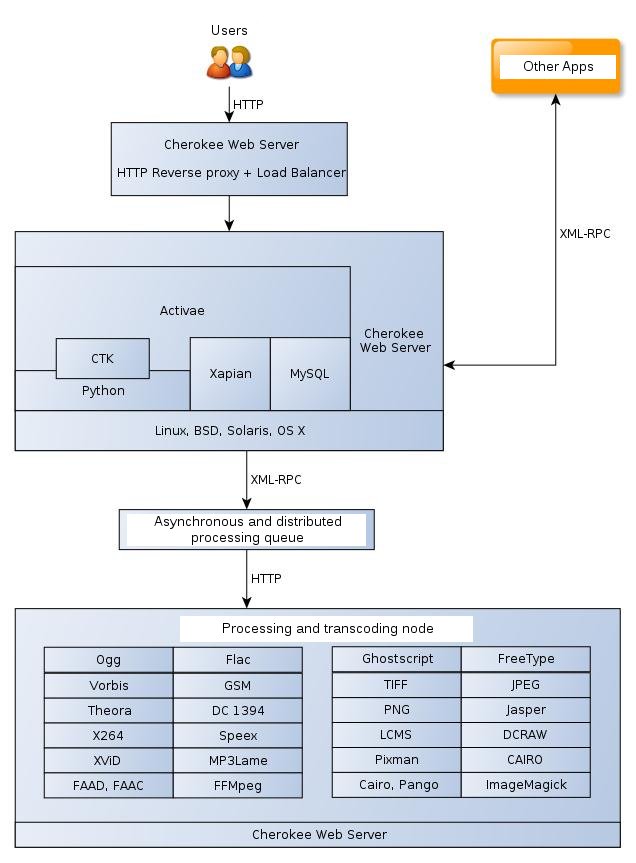

- Scalability: Activae consists of four modules:

transcoding, data access, web frontend, and an API exported via XML/RPC.

Each one of them allows load balancing and high availability, so the

scalability possibilities are broad. It can all run on one machine or

additional machines can be arranged as required. If the use of the platform

requires more storage, you can easily hot-plug it. If you use a lot of

transcoding of multimedia formats, machines can be dedicated specifically

to this work and even more can be added as you see the need.

- Load balancing: is provided by the internal engine of

the platform, the Cherokee web server [5]. Access to the application can be configured

in this way to provide service in those cases where visitors to

the platform are abundant.

- Transcoding: Activae allows advanced format

transcoding in the platform itself. If, for example, a video asset

having DivX AVI format is added, it can be transformed seamlessly into a video MPEG

H.264, or FLV to web. You can even convert a video to an MP3;

Activae is responsible for automatically eliminating the video layer and

extracting the audio. It supports transcoding between 200 different

formats/containers, and most importantly, the transcoding is done

asynchronously - decoupled and detached (in the background) while you can

continue working with Activae. It is a non-blocking process.

- 100% AJAX GUI: provided by the CTK library [6]

(Cherokee Tool Kit), a jQuery wrapper written in Python that allows the

development of advanced, light and professional user interfaces.

- The platform is fully developed in Python 2.5,

familiar to many readers: the API that is exported via

XML/RPC, the AJAX user interface, the control and business logic, the

database access, etc...

- It is open source. Activae is licensed under the

New BSD License terms. This allows modification, use, distribution, sale, or

relicensing, which is an advantage to any individual, company, or

organization that wishes to use their properties for personal use or to

provide services to third parties, expanding its portfolio of products and

services and ultimately expanding their business opportunities.

As for the technical soundness of the platform, it is ensured by being

based on renowned open source components: Xapian, Cherokee, Python,

ImageMagick, FFMpeg, etc. The following figure shows more specifically

the modules that are used by Activae, how they are interconnected, and how it

used each one of them.

Model of community and government

The project is currently housed in the Forge of CENATIC [7] . For now,

the CENATIC staff is managing its evolution, although we are

completely open to incorporate more participants: the more players, the

better. Workers from companies such as Octality [8] and ATOS Origin

[9] are involved in

the process; various developers and beta testers of other

entities are conducting pilot projects to deploy the platform

(museums, universities, television networks, associations, media companies,

...)

The development model used is the "rolling release". That is, there is no

single development branch constantly being evolved. Stable packages that

contain highly tested features are released on a frequent basis. To the

extent that the merits of the developers dictate, they go on to have enough

permissions to manage their own community around the platform. No one is

excluded on grounds of origin, sex, race, age, or ideology. The community

is a meritocracy: open, flexible, and dynamic, with the main goal of

changing the product for the benefit of all.

Call for participation

The project has been released very recently. This implies that the user and

developer community around the platform is still small, although the growth

rate is quite high. Therefore, CENATIC calls for the participation in the

project to all Python developers of the open source community that want to

be part of an innovative and forward-looking project. The way to get

started is to sign up in the Forge of CENATIC and request to join the

project. But you can also work anonymously downloading the sources via

Subversion platform.

svn checkout https://svn.forge.morfeo-project.org/activae/dam

All necessary information for participation can be found in the project

Wiki [10].

Any input is welcome: developers, translators, graphic designers, beta

testers, distributors... or just providing ideas for improvements or future

features. Although the website is currently available only in Spanish, we

are working hard to get the English version up as soon as possible. In any

case, the internal documentation of the source code as well as the comments

for each commit are completely written in English.

About CENATIC

CENATIC is the Spanish National Competence Center for the Application of

Open source Technologies. It is a public foundation, promoted by the

Ministry of Industry, Tourism, and Commerce of the Spanish Government

(through the Secretary of State for Telecommunications and Information

Society and the public entity red.es) and the Extremadura Regional

Government. Members of the Board are also the Regional Governments of

Andalusia, Asturias, Aragon, Cantabria, Catalonia, the Balearic Islands, and

the Basque Country, as well as the Atos Origin, Telefonica and GPEX companies.

CENATIC's mission is to promote knowledge and use of open source software

in all areas of society, with special attention to government, businesses,

the ICT sector - providers or users of open source technologies - and

development communities. In addition, the established Spanish regulatory

framework corresponds to CENATIC general advice on legal issues,

technology, and methodology most appropriate for the release of software

and knowledge.

Related links and references

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dorado.jpg)

Manuel Domínguez-Dorado was born in Zafra, Extremadura (SPAIN) and he

completed his studies at the Polytechnic School of Cáceres (University

of Extremadura) where he received the Computer Engineering and Diploma

in Computer Science in 2004 and 2006, respectively. He worked for

several companies in the ICT sector before returning back to the

University of Extremadura as a researcher. In 2007, he obtained a

Certificate of Research Proficiency. Nowadays he works for CENATIC

(Spanish National Competence Center for the Application of open source

technologies) as Project Management Office (PMO) lead while complete his

doctoral thesis. His areas of interest include multiprotocol

technologies, interdomain routing and interdomain Path Computation

Element (PCE) enviroments, as well as the work of planning,

coordination, measurement and management in large-scale software

projects.

Away Mission for April 2011

By Howard Dyckoff

There are quite a few April events of interest to the Linux and Open

Source professional. The menu includes hard-core Linux development,

Android, MySQL, system and network security, and cloud computing.

USENIX NSDI '11

NSDI '11 is actually March 30-April 1 in Boston. It is co-located

with and preceeded by LEET '11, the Large-Scale Exploits and Emergent

Threats seminar.

NSDI '11 will focus on the design, implementation, and practical evaluation

of large-scale networked and distributed systems. It is primarily a forum

for researchers and the papers presented there represent the leading

research on these topics. Of course, it starts before April 1st. But it

is worth noting and, if you are in Boston, you might catch the end of it.

I attended LEET and NSDI in San Francisco 3 years back and was impressed

with the technical depth and the level of innovative thinking. But I also

have to say that many of the presentations represent advanced grad student

work at leading universities. Part of the conversation is about the

footnotes and several of the presentations are follow-ups to research

presented at earlier NSDI conferences or related events. I can honestly

say that none of the presentations were duds but equally you couldn't split

your attention with email or twitter... unless you were in that research

community.

All of the papers are available after the conference and past papers from

past Symposia are available in the NSDI archive:

http://www.usenix.org/events/bytopic/nsdi.html

Here is the link to the abstracts for this year's NSDI:

http://www.usenix.org/events/nsdi11/tech/techAbstracts.html

Multiple Linux Foundation events

The Linux Foundation Collaboration Summit is in its 3rd year. It has

always been a kind of family week for the extended community on the West

Coast, especially as several other major Linux events often occur in the

Boston area.

The Linux Foundation Collaboration Summit is an invitation-only gathering

of folks like core kernel developers, distro maintainers, system vendors,

ISVs, and leaders of community organizations who gather for plenary

sessions and face-to-face workgroup meetings in order to tackle and solve

pressing issues facing Linux. Keynote speakers include the CTO of Red Hat,

Brian Stevens, and the head of Watson Supercomputer Project, David

Ferrucci.

This year, because the merger of the Foundation with the CE Linux Forum

(CELF) in 2010, the Summit is followed by an updated Embedded Linux

Conference at the same San Francisco venue, allowing for down time between

events and lots of socializing. Co-located with the 2011 Embedded Linux

Conference is the day and a half Android Builders Summit, beginning

mid-day Wednesday, April 13th and continuing on April 14th with a full day

of content.

The ELC conference, now in its 6th year, has the largest collection of

sessions dedicated exclusively to embedded Linux and embedded Linux

developers. CELF is now a workgroup of the Linux Foundation because the

principals believe that by combining resources they can better enable the

adoption of Linux in the Consumer Electronics industry.

You can use this link to register for both of the embedded Linux events:

http://events.linuxfoundation.org/events/embedded-linux-conference/register

Also, Camp KDE is co-located with the Collaboration Summit and actually

precedes it on April 4-5. So the festivities actually run from the 4th

until the 14th.

There will be "Ask the Expert" open discussion forums on several technical

topics and Summit Conference sessions on the following key topics: Btrfs,

SystemTap, KVM Enterprise Development, File and Storage for Cloud Systems

and Device Mapper, Scaling with NFS and Tuning Systems for Low Latency.

An annual favorite at the Summit is the round table discussion on the Linux

Kernel. Moderated by kernel developer Jon Corbet, the panel will address

the technology, the process and the future of Linux. Participants will

include James Bottomley, Distinguished Engineer at Novell's SUSE Labs and

Linux Kernel maintainer of the SCSI subsystem; Thomas Gleixner, main author

of the hrtimer subsystem; and Andrew Morton, co-maintainer of the Ext3

filesystem. In previous Summits, this has been both lively and detailed.

Last year, the Collaboration Summit was where the partnership of Nokia,

Intel, and The Linux Foundation on MeeGo was announced. I understand that

there should be more such announcements this year, especially as there is

now also a mini-Android conference at the end of the 2 week period.

View the full program here:

http://events.linuxfoundation.org/events/end-user-summit/schedule

Interested in the 2010 presentations? Use this link:

http://events.linuxfoundation.org/archive/2010/collaboration-summit/slides

InfoSec World 2011

InfoSec World will be taking place on April 19-21, 2011 in Orlando, FL. The

event features over 70 sessions, dozens of case studies, 9 tracks, 12

in-depth workshops, and 3 co-located summits. The last last of these

summits, the Secure Cloud & Virtualization Summit, will take place on the

Friday after InfoSec. It features a session on VMware Attacks and How to

Prevent Them. This is a serious and professional security conference.

The opening keynote at InfoSec this year is "Cybersecurity Under Fire:

Government Mis-Steps and Private Sector Opportunities" by Roger W. Cressey,

counterterrorism analysmmmt and former pesidential advisor.

I attended an InfoSec event in 2008 and found the level of presentations to

be excellent. For instance, there was a detailed session on detecting and

exploiting stack overflows and another 2-part session with a deep dive on

Identity Management.

And their "Rock Star" Panel promised and delivered challenging opinions on

the then-hot information security topics. In 2008, the panelists discussed

hardware hacking and included Greg Hoglund, CEO of the now more famous

HBGary, Inc. and founder of www.rootkit.com, and Fyodor Vaskovich, Creator

of Nmap and Insecure.org, among other notables. (This is actually the

pseudonym of Gordon Lyon.)

My problem with prior security events hosted by MISTI is that it had been

very hard to get most of the presentation slides after the event and

nothing was available to link to for perspective attendees who might want

to sample the content - or to readers of our Away Mission column. This

year the management at MISTI is aiming to do better.

Among the sessions presented this year at InfoSec World, these specifically

will be accessible by the public after the conference - sort of the

opposite of RSA's preview mini sessions:

- - Cloud Computing: Risks and Mitigation Strategies- Kevin Haynes

- - Computer Forensics, Fraud Detection and Investigations- Thomas Akin

- - Using the Internet as an Investigative Tool- Lance Hawk

- - Social Networking: How to Mitigate Your Organization's Risk- Brent Frampton

- - Securing Content in SharePoint: A Road Map- David Totten

- - Cloud and Virtualization Security Threats- Edward Haletky

- - Hacking and Securing the iPhone, iPad and iPod Touch DEMO- Diana Kelley

- - Google Hacking: To Infinity and Beyond - A Tool Story DEMO- Francis Brow/ Rob Ragan

- - Penetration Testing in a Virtualized Environment DEMO- Tim Pierson

For more information about InfoSec World 2011, visit:

http://www.misti.com/infosecworld

MySQL User Conference

After Sun's purchase of MySQL a few years ago, and recently Oracle's

purchase of Sun, the bloom may be coming off the open source flower that

MySQL has been. Many of the original developers are working on the fork of

MySQL that is Maria. And O'Reilly has made it somewhat difficult for Linux

Gazette to attend this and other events that they host. But there is still

a huge user base for MySQL and this is the annual user conference.

Last year's event seemed a bit more compressed -- fewer tracks, fewer

rooms in the hotel adjacent to the SC Convention Center, fewer vendors in

the expo hall. Also, in a sign of the depressed economy, there was no

breakfast in the mornings. There were coffee and tea islands between the

session rooms, but the signature Euro breakfast at the MySQL conference was

missing, as were many of the Europeans. This is now clearly an American

corporate event.

That being said, there were better conference survey prizes last year -- an