...making Linux just a little more fun!

March 2010 (#172):

- Mailbag

- Talkback

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Recovering FAT Directory Stubs with SleuthKit, by Silas Brown

- The Next Generation of Linux Games - GLtron and Armagetron Advanced, by Dafydd Crosby

- Away Mission - Recommended for March, by Howard Dyckoff

- Tunnel Tales 1, by Henry Grebler

- Logging to a Database with Rsyslog, by Ron Peterson

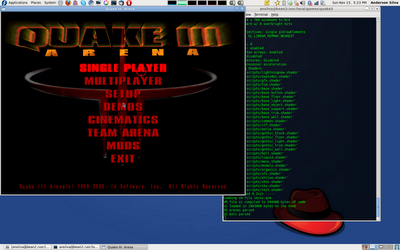

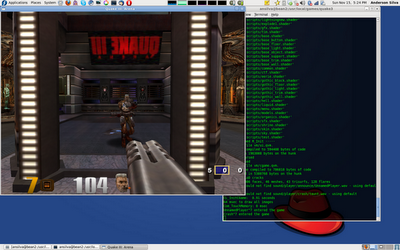

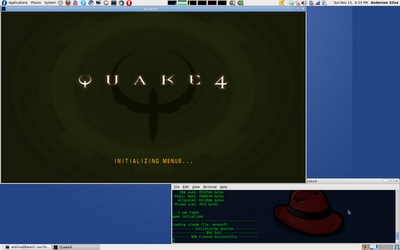

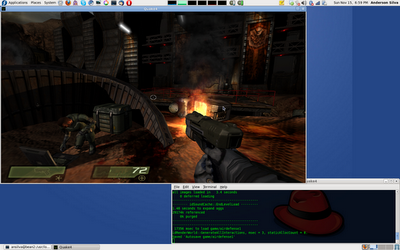

- Running Quake 3 and 4 on Fedora 12 (64-bit), by Anderson Silva

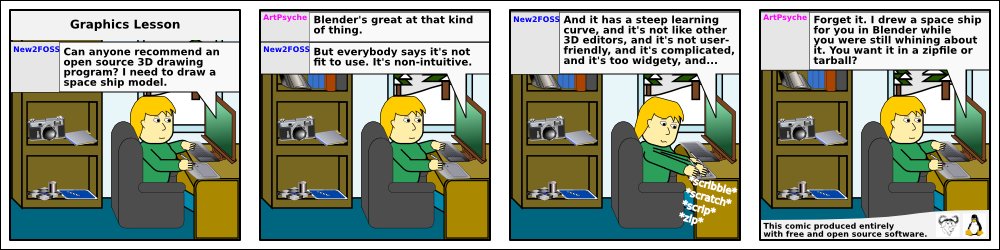

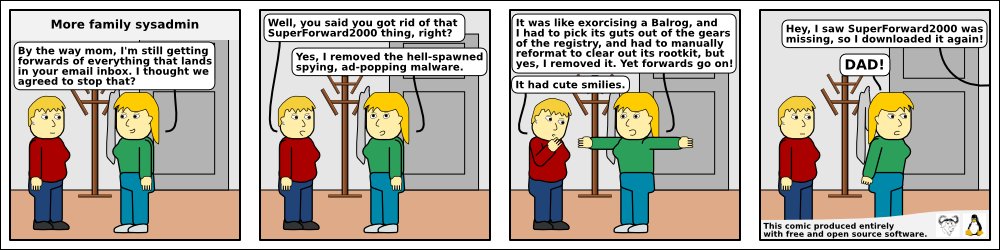

- HelpDex, by Shane Collinge

- Doomed to Obscurity, by Pete Trbovich

- The Backpage, by Ben Okopnik

Mailbag

This month's answers created by:

[ Ben Okopnik, René Pfeiffer, Thomas Adam ]

...and you, our readers!

Our Mailbag

mbox selective deletion

Sam Bisbee [sbisbee at computervip.com]

Fri, 5 Feb 2010 19:28:52 -0500

Hey gang,

Here's the deal: I'm trying to delete a message from an mbox with Bash. I have

the message number that I got by filtering with `frm` (the message is

identified by a header that holds a unique SHA crypt). You've problem guessed

by now, but mailutils is fair game.

I don't want to convert from mbox to maildir in /tmp on each run, because it's

reasonable that the script would be run every minute. Also, I don't want to put

users through that pain with a large mbox.

Also, I really don't want to write a "delete by message number" program in C

using the libmailutils program, but I will resort to it if needed.

I saw http://www.argon.org/~roderick/mbox-purge.html, but would like to have

"common" packages as dependencies only.

Is there some arg that I missed in `mail`? Should I just try and roll

mbox-purge in? All ideas, tricks and release management included, are welcome.

Cheers,

--

Sam Bisbee

[ Thread continues here (10 messages/30.08kB) ]

Vocabulary lookup from the command line?

René Pfeiffer [lynx at luchs.at]

Thu, 4 Feb 2010 01:08:04 +0100

Hello, TAG!

I am trying to brush up my French - again. So I bought some comics in

French and I try to read them. Does anyone know of a command line tool

or even a "vocabulary shell" that allows me to quickly look up words,

prreferrably French words with Germen or English translations?

I can always hack a Perl script that queries dict.leo.org or similar

services, but a simple lookup tool should be around already.

Salut,

René.

[ Thread continues here (7 messages/11.42kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 172 of Linux Gazette, March 2010

Talkback

Talkback:168/lg_tips.html

[ In reference to "2-Cent Tips" in LG#168 ]

Amit Saha [amitsaha.in at gmail.com]

Mon, 1 Feb 2010 20:43:28 +0530

Hell Bob McCall:

On Mon, Feb 1, 2010 at 8:27 PM, Bob McCall <rcmcll@gmail.com> wrote:

> Amit:

> I saw your two cent tip on gnuplot. Have you seen this?

>

> http://ndevilla.free.fr/gnuplot/

>

> best,

> Bob

Nice! Thanks for the pointer, I am CCing TAG.

Best,

Amit

--

Journal: http://amitksaha.wordpress.com,

µ-blog: http://twitter.com/amitsaha

Freenode: cornucopic in #scheme, #lisp, #math,#linux, #python

Talkback: Discuss this article with The Answer Gang

Published in Issue 172 of Linux Gazette, March 2010

2-Cent Tips

Two-cent Tip: Retrieving directory contents

Ben Okopnik [ben at linuxgazette.net]

Wed, 3 Feb 2010 22:35:08 -0500

During a recent email discussion regarding pulling down the LG archives

with 'wget', I discovered (perhaps mistakenly; if so, I wish someone

would enlighten me [1]) that there's no way to tell it to pull down all

the files in a directory unless there's a page that links to all those

files... and the directory index doesn't count (even though it contains

links to all those files.) So, after a minute or two of fiddling with

it, I came up with a following solution:

#!/bin/bash

# Created by Ben Okopnik on Fri Jan 29 14:41:57 EST 2010

[ -z "$1" ] && { printf "Usage: ${0##*/} <URL> \n"; exit; }

# Safely create a temporary file

file=`tempfile`

# Extract all the links from the directory listing into a local text file

wget -q -O - "$1"|\

URL="${1%/}" perl -wlne'print "$ENV{URL}/$2" if /href=(["\047])([^\1]+)\1/' > $file

# Retrieve the listed links

wget -i $file && rm $file

To summarize, I used 'wget' to grab the directory listing, parse it

to extract all the links, prefixing them with the site URL, and saved

the result into a local tempfile. Then, I used that tempfile as a source

for 'wget's '-i' option (read the links to be retrieved from a file.)

I've tested this script on about a dozen directories with a variety of

servers, and it seems to work fine.

[1] Please test your proposed solution, though. I'm rather cranky at

'wget' with regard to its documentation; perhaps it's just me, but I

often find that the options described in its manpage do something rather

different from what they promise to do. For me, 'wget' is a terrific

program, but the documentation has lost something in the translation

from the original Martian.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

Two-cent Tip: How big is that directory?

Dr. Parthasarathy S [drpartha at gmail.com]

Tue, 2 Feb 2010 09:57:02 +0530

At times, you may need to know exactly how big is a certain directory

(say top directory) along with all its contents and subdirectories(and

their contents). You may need this if you are copying a large diectory

along with its contents and structure. And you may like to know if what

you got after the copy, is what you sent. Or you may need this when

trying to copy stuff on to a device where the space is limited. So you

want to make sure that you can accomodate the material you are planning

to send.

Here is a cute little script. Calling sequence::

howmuch <top directory name>

You get a summary, which gives the total size, the number of

subdirectories, and the number of files (counted from the top

directory). Good for book-keeping.

###########start-howmuch-script

# Tells you how many files, subdirectories and content bytes in a

# directory

# Usage :: how much <directory-path-and-name>

# check if there is no command line argument

if [ $# -eq 0 ]

then

echo "You forgot the directory to be accounted for !"

echo "Usage :: howmuch <directoryname with path>"

exit

fi

echo "***start-howmuch***"

pwd > ~/howmuch.rep

pwd

echo -n "Disk usage of directory ::" > ~/howmuch.rep

echo $1 >> ~/howmuch.rep

echo -n "made on ::" >> ~/howmuch.rep

du -s $1 > ~/howmuch1

tree $1 > ~/howmuch2

date >> ~/howmuch.rep

tail ~/howmuch1 >> ~/howmuch.rep

tail --lines=1 ~/howmuch2 >> ~/howmuch.rep

cat ~/howmuch.rep

# cleanup

rm ~/howmuch1

rm ~/howmuch2

#Optional -- you can delete howmuch.rep if you want

#rm ~/howmuch.rep

echo "***end-howmuch***"

#

########end-howmuch-script

--

---------------------------------------------------------------------------------------------

Dr. S. Parthasarathy | mailto:drpartha@gmail.com

Algologic Research & Solutions |

78 Sancharpuri Colony |

Bowenpally P.O | Phone: + 91 - 40 - 2775 1650

Secunderabad 500 011 - INDIA |

WWW-URL: http://algolog.tripod.com/nupartha.htm

---------------------------------------------------------------------------------------------

[ Thread continues here (5 messages/9.85kB) ]

Two-cent tip: GRUB and inode sizes

René Pfeiffer [lynx at luchs.at]

Wed, 3 Feb 2010 01:07:03 +0100

Hello!

I had a decent fight with a stubborn server today. It was a Fedora Core

6 system (let's not talk about how old it is) that was scheduled for a

change of disks. This is fairly straightforward - until you have to

write the boot block. Unfortunately I prepared the new disks before

copying the files. As soon as I wanted to install GRUB 0.97 it told me

that it could not read the stage1 file. The problem is that GRUB only

deals with 128-byte inodes. The prepared / partition has 256-byte

inodes. So make sure to use

mkfs.ext3 -I 128 /dev/sda1

when preparing disks intended to co-exist with GRUB. I know this is old

news, but I never encountered this problem before.

http://www.linuxplanet.com/linuxplanet/tutorials/6480/2/ has more hints

ready.

Best,

René,

who is thinking about moving back to LILO.

[ Thread continues here (3 messages/2.77kB) ]

Two-cent Tip: backgrounding the last stopped job without knowing its job ID

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Mon, 22 Feb 2010 16:14:09 +0700

For most people, to send a job to background after stopping a task,

he/she will take a note the job ID and then invoke "bg" command

appropriately like below:

$ (while (true); do yes > /dev/null ; done)

^Z

[2]+ Stopped ( while ( true ); do

yes > /dev/null;

done )

$ bg %2

[2]+ ( while ( true ); do

yes > /dev/null;

done ) &

Can we omit the job ID? Yes, we can. Simply replace the above "bg %2"

with "bg %%". It will refer to the last stopped job ID. This way,

command typing mistake could be avoided too.

--

regards,

Mulyadi Santosa

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (4 messages/4.27kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 172 of Linux Gazette, March 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net. Deividson can also be reached via twitter.

News in General

Red Hat Announces Fourth Annual Innovation Awards

Red Hat Announces Fourth Annual Innovation Awards

The fourth annual Red Hat Innovation awards will be presented at the

2010 Red Hat Summit and JBoss World, co-located in Boston, from June 22 to

25. The Innovation Awards recognize the creative use of Red Hat and JBoss

solutions by customers, partners and the community.

Categories for the 2010 Innovation Awards include:

- Optimized Solutions: Recognition of striking performance,

scalability and/or usability enhancements delivered with open source

solutions;

- Superior Alternatives: Recognition of the most successful

migration from proprietary solutions to open source alternatives;

- Extensive Ecosystem: Recognition of the use of Red Hat or JBoss'

expanding partner ecosystem to create innovative architectures based

on open source solutions;

- Carved out Costs: Recognition of customers who have leveraged

open source solutions to significantly cut costs and extract added

value from existing systems;

- Outstanding Open Source Architecture: Recognition of the use of

Red Hat, JBoss and partner offerings to create innovative

architectures based on open source solutions.

Submissions for the Innovations Awards will be accepted until April

15, 2010. Four categories will each recognize two winning submissions,

one from Red Hat and one from JBoss, and the Outstanding Open Source

Architecture category will recognize one winner who is deploying both

Red Hat and JBoss solutions. From these category winners, one Red Hat

Innovator of the Year and one JBoss Innovator of the Year will be

selected by the community through online voting, and will be announced

at an awards ceremony at the Red Hat Summit and JBoss World. To view

last year's winners, visit:

http://press.redhat.com/2009/09/10/red-hat-and-jboss-innovators-of-the-year-2009/.

For more information, or to submit a nomination for the 2010

Innovation Awards, visit: www.redhat.com/innovationawards. For

more information on the 2010 Red Hat Summit and JBoss World, visit:

http://www.redhat.com/promo/summit/2010/.

Novell and LPI Partner on Linux Training and Certification

Novell and LPI Partner on Linux Training and Certification

Novell and The Linux Professional Institute (LPI) have announced an

international partnership to standardize their entry-level Linux

certification programs on LPIC-1. Under this program, Linux

professionals who have earned their LPIC-1 status will also satisfy

the requirements for the Novell Certified Linux Administrator (CLA)

certification. In addition, Novell Training Services has formally

agreed to include required LPIC-1 learning objectives in its CLA course

training material.

Adoption of Linux, including SUSE Linux Enterprise from Novell, is

accelerating as the industry pursues cost saving solutions that deliver

maxiumum reliability and manageability. A 2009 global survey of IT

executives revealed that 40 percent of survey participants plan to deploy

additional workloads on Linux over the next 12-24 months and 49 percent

indicated Linux will be their primary server platform within five

years.

"This partnership represents the strong support the LPI certification

program has within the wider IT and Linux community. This historical

support has included contributions from vendors such as Novell and has

assisted LPI to become the most widely recognized and accepted Linux

certification," said Jim Lacey, president and CEO of LPI. "We look forward

to working with Novell to promote the further development of the Linux

workforce of the future. In particular, by aligning its training and exam

preparation curriculum to support LPIC-1 objectives, Novell has recognized

the industry's need for a vendor-neutral certification program that

prepares IT professionals to work with any Linux distribution in an

enterprise environment."

Under the terms of the agreement, all qualified LPIC-1 holders - except

those in Japan - will have the opportunity to apply for Novell CLA

certification without additional exams or fees. Novell Training Services

will include LPIC-1 objectives into its Linux Administrator curriculum and

programs which include self study, on demand, and partner-led classroom

training.

More information about acquiring dual certification status can be

found at Novell here: http://www.novell.com/training/certinfo/cla/ and at

the Linux Professional Institute here: http://www.lpi.org/cla/.

Conferences and Events

- ESDC 2010 - Enterprise Software Development Conference

-

March 1 - 3, San Mateo Marriott, San Mateo, Ca

http://www.go-esdc.com/.

- RSA 2010 Security Conference

-

March 1 - 5, San Francisco, CA

http://www.rsaconference.com/events.

- Oracle + Sun Customer Events

-

March 2010, US & International Cities:

March 2, New York

March 3, Toronto

March 11, London

March 16, Hong Kong

March 18, Moscow

http://www.oracle.com/events/welcomesun/index.html

- Enterprise Data World Conference

-

March 14 - 18, Hilton Hotel, San Francisco, CA

http://edw2010.wilshireconferences.com/index.cfm.

- Cloud Connect 2010

-

March 15 - 18, Santa Clara, CA

http://www.cloudconnectevent.com/.

- Open Source Business Conference (OSBC)

-

March 17 - 18, Palace Hotel, San Francisco, CA

https://www.eiseverywhere.com/ehome/index.php?eventid=7578.

- EclipseCon 2010

-

March 22 - 25, Santa Clara, CA

http://www.eclipsecon.org/2010/.

- User2User - Mentor Graphics User Group

-

April 6, Marriott Hotel, Santa Clara, CA

http://user2user.mentor.com/.

- Texas Linux Fest

-

April 10, Austin, TX

http://www.texaslinuxfest.org/.

- MySQL Conference & Expo 2010

-

April 12 - 15, Convention Center, Santa Clara, CA

http://en.oreilly.com/mysql2010/.

- Black Hat Europe 2010

-

April 12 - 15, Hotel Rey Juan Carlos, Barcelona, Spain

http://www.blackhat.com/html/bh-eu-10/bh-eu-10-home.html.

- 4th Annual Linux Foundation Collaboration Summit

-

Co-located with the Linux Forum Embedded Linux Conference

April 14 - 16, Hotel Kabuki, San Francisco, CA

By invitation only.

- eComm - The Emerging Communications Conference

-

April 19 - 21, Airport Marriott, San Francisco, CA

http://america.ecomm.ec/2010/.

- STAREAST 2010 - Software Testing Analysis & Review

-

April 25 - 30, Orlando, FL

http://www.sqe.com/STAREAST/.

- Usenix LEET '10, IPTPS '10, NSDI '10

-

April 27, 28 - 30, San Jose, CA

http://usenix.com/events/.

- Citrix Synergy-SF

-

May 12 - 14, San Francisco, CA

http://www.citrix.com/lang/English/events.asp.

- Black Hat Abu Dhabi 2010

-

May 30 - June 2, Abu Dhabi, United Arab Emirates

http://www.blackhat.com/html/bh-ad-10/bh-ad-10-home.html.

- Uber Conf 2010

-

June 14 - 17, Denver, CO

http://uberconf.com/conference/denver/2010/06/home/.

- Semantic Technology Conference

-

June 21 - 25, Hilton Union Square, San Francisco, CA

http://www.semantic-conference.com/.

- O'Reilly Velocity Conference

-

June 22 - 24, Santa Clara, CA

http://en.oreilly.com/velocity/.

Distro News

RHEL 5.5 Beta Available

RHEL 5.5 Beta Available

Red Hat has announced the beta release of RHEL 5.5

(kernel-2.6.18-186.el5) for the Red Hat Enterprise Linux 5 family of

products including RHEL 5 Server, Desktop, and Advanced Platform for x86,

AMD64/Intel64, Itanium Processor Family, and Power Systems

micro-processors.

The supplied beta packages and CD and DVD images are intended for

testing purposes only. Benchmark and performance results cannot be

published based on this beta release without explicit approval from

Red Hat.

The beta of RHEL 5.5, called Anaconda, has an "upgrade" option for an

upgrade from Red Hat Enterprise Linux 4.8 or 5.4 to the Red Hat Enterprise

Linux 5.5 beta. However Red Hat cautions that there is no guarantee the

upgrade will preserve all of a system's settings, services, and

custom configurations. For this reason, Red Hat recommends a fresh

install rather than an upgrade. Upgrading from beta release to the GA

product is not supported.

A public general discussion mailing list for beta testers is at:

https://www.redhat.com/mailman/listinfo/rhelv5-beta-list/

The beta testing period is scheduled to continue through March 16,

2010.

SimplyMEPIS 8.5 Beta5

SimplyMEPIS 8.5 Beta5

MEPIS has announced SimplyMEPIS 8.4.97, the fifth beta of the forthcoming

MEPIS 8.5, now available from MEPIS and public mirrors. The ISO

files for 32 and 64 bit processors are SimplyMEPIS-CD_8.4.97-b5_32.iso

and SimplyMEPIS-CD_8.4.97-b5_64.iso respectively. Deltas, requested by the

MEPIS community, are also available.

According to project lead Warren Woodford, "We are coming down the home

stretch on the development of SimplyMEPIS version 8.5. There is some work

to be done on MEPIS components, but the major packages are locked in to

what may be their final versions. We've been asked to make more package

updates, but we can't go much further without switching to a Debian Squeeze

base. Currently, we have kernel 2.6.32.8, KDE 4.3.4, gtk 2.18.3-1,

OpenOffice 3.1.1-12, Firefox 3.5.6-2, K3b 1.70.0-b1, Kdenlive 0.7.6,

Synaptic 0.63.1, Gdebi 0.5.9, and bind9 9.6.1-P3."

Progress on SimplyMEPIS development can be followed at

http://twitter.com/mepisguy/ or at http://www.mepis.org/.

ISO images of MEPIS community releases are published to the 'released'

subdirectory at the MEPIS Subscriber's Site and at MEPIS public

mirrors.

Tiny Core Releases V2.8

Tiny Core Releases V2.8

Tiny Core Linux is a very small (10 MB) minimal Linux GUI Desktop. It

is based on Linux 2.6 kernel, Busybox, Tiny X, and Fltk. The core runs

entirely in ram and boots very quickly. Also offered is Micro Core a 6

MB image that is the console based engine of Tiny Core. CLI versions

of Tiny Core's program allows the same functionality of Tiny Core's

extensions only starting with a console based system.

It is not a complete desktop nor is all hardware completely supported.

It represents only the core needed to boot into a very minimal X

desktop typically with wired Internet access.

Extra applications must be downloaded from online repositories or

compiled using tools provided.

The theme for this newest release is to have a single directory for

extensions and dependencies. This greatly improves systems resources

by having a single copy of dependencies, also greatly improves

flexibility in "moving" applications present upon boot, dependency

auditing, and both batch and selective updating.

Tiny Core V2.8 is now posted at

http://distro.ibiblio.org/pub/linux/distributions/tinycorelinux/2.x/release/.

FreeBSD 7.3 Expected March 2010

FreeBSD 7.3 Expected March 2010

The FreeBSD 7.3 Beta-1 for legacy systems was released on January

30. The production release is expected in early March.

Software and Product News

Ksplice "Abolishes the Reboot"

Ksplice "Abolishes the Reboot"

Ksplice Inc. announced its Uptrack service patching service in

February, eliminating the need to restart Linux servers when

installing crucial updates and security patches.

Based on technology from the Massachusetts Institute of Technology,

Ksplice Uptrack is a subscription service that allows IT

administrators to keep Linux servers up-to-date without the disruption

and downtime of rebooting.

In 2009, major Linux vendors asked customers to install a kernel

update roughly once each month. Before Uptrack, system administrators

had to schedule downtime in advance to bring Linux servers up-to-date,

because updating the kernel previously required rebooting the

computer. By allowing IT administrators to install kernel updates

without downtime, Uptrack dramatically reduces the cost of system

administration.

Ksplice Uptrack is now available for users of six leading versions of

Linux: Red Hat Enterprise Linux, Ubuntu, Debian GNU/Linux, CentOS,

Parallels Virtuozzo Containers, and OpenVZ. The subscription fee

starts at $3.95 per month per system, after a 30-day free trial. The

rate drops to $2.95 after 20 servers are covered. A free version is

also available for Ubuntu.

Visit the Ksplice website here: http://www.ksplice.com/.

MariaDB Augments MySQL 5.1

MariaDB Augments MySQL 5.1

MariaDB 5.1.42, a new branch of the MySQL database - which includes

all major open source storage engines, myriad bug fixes, and many

community patches - has been released.

MariaDB is kept up to date with the latest MySQL release from the same

branch. MariaDB 5.1.42 is based on MySQL 5.1.42 and XtraDB 1.0.6-9.

In most respects MariaDB will work exactly as MySQL: all commands,

interfaces, libraries and APIs that exist in MySQL also exist in

MariaDB.

MariaDB contains extra fixes for Solaris and Windows, as well as

improvements in the test suite.

For information on installing MariaDB 5.1.42 on new servers or

upgrading to MariaDB 5.1.42 from previous releases, please check out

the installation guide at

http://askmonty.org/wiki/index.php/Manual:Installation/.

MariaDB is available in source and binary form for a variety of

platforms and is available from the download pages:

http://askmonty.org/wiki/index.php/MariaDB:Download:MariaDB_5.1.42/.

Moonlight 3.0 Preview Now Available

Moonlight 3.0 Preview Now Available

The Moonlight project has released the second preview of Moonlight 3.0

in time for viewing the Winter Olympics. The final release will

contain support for some features in Silverlight 4.

Moonlight is an open-source implementation of Microsoft's Silverlight

platform for creating and viewing multiple media types.

This release updates the Silverlight 3.0 support in Moonlight 2.0,

mostly on the infrastructure level necessary to support the rest of

the features. This release includes:

- MP4 demuxer support. The demuxer is in place but there are no

codecs for it yet;

- Initial work on UI Virtualization;

- Platform Abstraction Layer: the Moonlight core is now separated

from the windowing system engine. This should make it possible for

developers to port Moonlight to other windowing/graphics systems that

are not X11/Gtk+ centric;

- New 3.0 Binding/BindingExpression support.

Download Moonlight here: http://www.go-mono.com/moonlight/.

OpenOffice.org 3.2 Faster, More Secure

OpenOffice.org 3.2 Faster, More Secure

At the start of its tenth anniversary year, the OpenOffice.org

Community

announced OpenOffice.org 3.2. The latest version starts faster,

offers new functions and better compatibility with other office

software. The newest version also fixes bugs and potential security

vulnerabilities.

In just over a year from its launch, OpenOffice.org 3 had recorded

over one hundred million downloads from its central download site alone.

OpenOffice.org 3.2 new features include:

- faster start up times;

- improved compatibility with ODF and proprietary file formats;

- improvements to all components, particularly the Calc spreadsheet;

- usability makeover and new chart types for the Chart module.

A full guide to new features is available at

http://www.openoffice.org/dev_docs/features/3.2/.

All users are encouraged to upgrade to the new release, because of the

potential security vulnerabilities addressed in 3.2. Any people still

using OpenOffice.org 2 should note that this version was declared 'end

of life' in December 2009, with no further security patches or

bugfixes

being issued by the Community. For details, see

http://development.openoffice.org/releases/eol.html.

The security bulletin covering fixes for the potential vulnerabilities is

here: http://www.openoffice.org/security/bulletin.html.

Download OpenOffice.org 3.2 here: http://download.openoffice.org.

New Release of Oracle Enterprise Pack for Eclipse 11g

New Release of Oracle Enterprise Pack for Eclipse 11g

In February, Oracle released the Oracle Enterprise Pack for Eclipse

11g, a component of Oracle's Fusion Middleware.

The Enterprise Pack for Eclipse is a free set of certified plug-ins

that enable developers to build Java EE and Web Services applications

for the Oracle Fusion Middleware platform where Eclipse is the

preferred Integrated Development Environment (IDE). This release

provides an extension to Eclipse with Oracle WebLogic Server features,

WYSIWYG Web page editing, SCA support, JAX-WS Web Service validation,

an integrated tag and data palette, and smart editors.

Also new with this release is Oracle's AppXRay feature, a design time

dependency analysis and visualization tool that makes it easy for Java

developers to work in a team setting and reduce runtime debugging.

The new features in Oracle Enterprise Pack for Eclipse 11g allow

WebLogic developers to increase code quality by catching errors at

design time.

FastSwap support enables WebLogic developers to use FastSwap in

combination with AppXRay to allow changes to Java classes without

requiring re-deployment, also reducing the amount of time spent

deploying/re-deploying an application.

GWOS Monitor Enterprise Release 6.1 Adds Ubuntu Support

GWOS Monitor Enterprise Release 6.1 Adds Ubuntu Support

GWOS Monitor Enterprise Release 6.1 has added support for Ubuntu

servers. It also includes many improvements and strengthens the GWOS

platform for monitoring heterogeneous, complex IT environments. This

new release, part of the ongoing evolution of the GWOS solution stack,

is available to Enterprise customers with a current subscription

agreement. GroundWorks is also available in a community edition.

Highlights in this new release include:

- Ubuntu Server 8.04 LTS and Ubuntu Server 9.10 are now supported

platforms;

- Tighter integration between the event view and state-oriented

views, reducing the time to diagnose a problem anywhere in the

monitored environment;

- Connect to affected systems directly from the status viewer with

two clicks. This feature, subject to administrator control, enables

faster remediation of detected problems by operations staff. Supports

console (SSH), web-based (HTTP/HTTPS) and graphical (VNC, RDP)

administration;

- Viewing all historical and performance information over

arbitrary time periods;

- Direct web links to specific service groups, services, host

groups and hosts within the portal application. Back-links to the

monitoring system are particularly useful when included in

notification messages and ticketing systems.

- Updated components including Nagios Core project 3.2 and the

JBoss Portal;

- Performance improvements for large systems configuration.

GroundWork 6.1, Enterprise Edition with Ubuntu support, is only $49

for the first 100 devices monitored. Download it here:

http://www.groundworkopensource.com/newExchange/flex-quickstart/.

Also in January, GroundWork released Brooklyn, their iPhone app for

GroundWork Monitor and Nagios. The iPhone app costs $8.99 at the Apple

App Store.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Recovering FAT Directory Stubs with SleuthKit

By Silas Brown

When I accidentally dropped an old Windows Mobile PocketPC onto the floor

at the exact moment it was writing to a memory card, the memory card's

master FAT was corrupted and several directories disappeared from the root

directory. Since it had not been backed up for some time, I connected the

memory card to a Linux system for investigation. (At this point it is

important not to actually mount the card. If you have an automounter, turn

it off before connecting. You have to access it as a device, for example

/dev/sdb1. To see which device it is, you can do ls

/dev/sd* both before and after connecting it and see what appears. The

following tools read from the device directly, or from an image of it

copied using the dd command.)

fsck.vfat -r offered to return over 1 GB of data to the free

space. This meant there was over 1 GB of data that was neither indexed in

the FAT nor listed as free space, i.e. that was the lost directories. It

was important not to let fsck.vfat mark it as free yet, as this

would impair data recovery. fsck.vfat can also search this data

for contiguous blocks and reclaim them as files, but that would not have

been ideal either because the directory structure and all the filenames

would have been lost, leaving thousands of meaninglessly-named files to be

sorted out by hand (some of which may end up being split into more than one

file).

The directory structure was in fact still there; only the entries for the

top-level directories had been lost. The listings of their subdirectories

(including all filenames etc) were still present somewhere on the disk, but

the root directory was no longer saying where to find them. A few Google

searches seemed to suggest that the orphaned directory listings are

commonly known as "directory stubs" and I needed a program that could

search the disk for lost directory stubs and restore them. Unfortunately

such programs were not very common. The only one I found was a commercial

one called CnW Recovery, but that

requires administrator access to a Windows PC (which I do not have).

SleuthKit to the rescue

A useful utility is SleuthKit, available as a package on most distributions

(apt-get install sleuthkit) or from sleuthkit.org. SleuthKit consists of

several commands, the most useful of which are fls and

icat. The fls command takes an image file (or device) and

an inode number, and attempts to display the directory listing that is

stored at that inode number (if there is one). This directory listing will

show files and other directories, and the inode numbers where they are

stored. The icat command also takes an image file and an inode

number, and prints out the contents of the file stored at that inode number

if there is one. Hence, if you know the inode number of the root directory,

you can chase through a normal filesystem with fls commands

(following the inode numbers of subdirectories etc) and use icat

to access the files within them. fls also lists deleted entries

(marked with a *) as long as those entries are still present in

the FAT. (Incidentally, these tools also work on several other filesystems

besides FAT, and they make them all look the same.)

The range of valid inode numbers can be obtained using SleuthKit's

fsstat command. This tells you the root inode number (for example

2) and the maximum possible inode number (for example 60000000).

fsstat will also give quite a lot of other information, so you may

want to pipe its output through a pager such as more or

less (i.e. type fsstat|more or fsstat|less) in

order to catch the inode range near the beginning of the output.

Scanning for directory stubs

Because the root FAT had been corrupted, using fls on it did not

reveal the inode locations of the lost directories. Therefore it was

necessary to scan through all possible inode numbers in order to find them.

This is a lot of work to do manually, so I wrote a Python script to call

the necessary fls commands automatically. First it checks the root

directory and all subdirectories for the locations of "known" files and

directories, and then it scans all the other inodes to see if any of them

contain directory listings that are not already known about. If it finds a

lost directory listing, it will try to recover all the files and

subdirectories in it with their correct names, although it cannot recover

the name of the top-level directory it finds.

Sometimes it finds data that just happens to pass the validity check for a

directory listing, but isn't. This results in it creating a "recovered"

directory full of junk. But often it correctly recovers a lost directory.

image = "/dev/sdb1"

recover_to = "/tmp/recovered"

import os, commands, sys

def is_valid_directory(inode):

exitstatus,outtext = commands.getstatusoutput("fls -f fat "+image+" "+str(inode)+" 2>/dev/null")

return (not exitstatus) and outtext

def get_ls(inode): return commands.getoutput("fls -f fat "+image+" "+str(inode))

def scanFilesystem(inode, inode2path_dic, pathSoFar=""):

if pathSoFar: print " Scanning",pathSoFar

for line in get_ls(inode).split('\n'):

if not line: continue

try: theType,theInode,theName = line.split(None,2)

except: continue # perhaps no name (false positive inode?) - skip

if theInode=='*': continue # deleted entry (theName starts with inode) - ignore

assert theInode.endswith(":") ; theInode = theInode[:-1]

if theType=="d/d": # a directory

if theInode in inode2path_dic: continue # duplicate ??

inode2path_dic[theInode] = pathSoFar+theName+"/"

scanFilesystem(theInode, inode2path_dic, pathSoFar+theName+"/")

elif theType=="r/r": inode2path_dic[theInode] = pathSoFar+theName

print "Finding the root directory"

i=0

while not is_valid_directory(i): i += 1

print "Scanning the root directory"

root_inode2path = {}

scanFilesystem(i,root_inode2path)

print "Looking for lost directory stubs"

recovered_directories = {}

while i < 60000000:

i += 1

if i in root_inode2path or i in recovered_directories: continue # already known

sys.stdout.write("Checking "+str(i)+" \r") ; sys.stdout.flush()

if not is_valid_directory(i): continue

inode2path = root_inode2path.copy()

scanFilesystem(i,inode2path)

for n in inode2path.keys():

if n in root_inode2path: continue # already known

p = recover_to+"/lostdir-"+str(i)+"/"+inode2path[n]

if p[-1]=="/": # a directory

recovered_directories[n] = True

continue

print "Recovering",p

os.system('mkdir -p "'+p[:p.rindex("/")]+'"')

os.system("icat -f fat "+image+" "+str(n)+' > "'+p+'"')

Note that the script might find a subdirectory before it finds its

parent directory. For example if you have a lost directory A which

has a subdirectory B, it is possible that the script will find

B first and recover it, then later when it finds A it

will recover A, re-recover B, and place the new copy of

B inside the recovered A, so you will end up with both

A, B and A/B. You have to manually decide which

of the recovered directories are actually the top-level ones. The script

does not bother to check for .. entries pointing to a directory's

parent, because these were not accurate on the FAT storage card I had (they

may be more useful on other filesystems). If you want you can modify the

script to first run the inode scan to completion without

recovering anything, then analyze them, discarding any top-level ones that

are also contained within others. However, running the scan to completion

is likely to take far longer than looking at the directories by hand.

As it is, you can interrupt the script once it has recovered what you want.

If Control-C does not work, use Control-Z to suspend it and do kill

%1 or whatever number bash gave you when you suspended the

script.

This script can take several days to run through a large storage card. You

can speed it up by using dd to take an image of the card to the

hard disk (which likely has faster access times than a card reader); you

can also narrow the range of inodes that are scanned if you have some idea

of the approximate inode numbers of the lost directories (you can get such

an idea by using fls to check on directories that are still there

and that were created in about the same period of time as the lost ones).

After all the directories have been recovered, you can run fsck.vfat

-r and let it return the orphaned space back to the free space, and

then mount the card and copy the recovered directory structures back onto

it.

Some GNU/Linux live CDs have a forensic mode that doesn't touch local

storage media. For example if you boot the GRML

live CD you can select "forensic mode" and can safely inspect attached harddisks

or other media. AFAIK Knoppix has a similar option. -- René

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/brownss.jpg)

Silas Brown is a legally blind computer scientist based in Cambridge UK.

He has been using heavily-customised versions of Debian Linux since

1999.

Copyright © 2010, Silas Brown. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 172 of Linux Gazette, March 2010

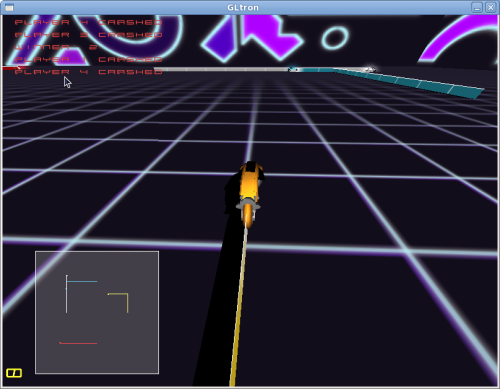

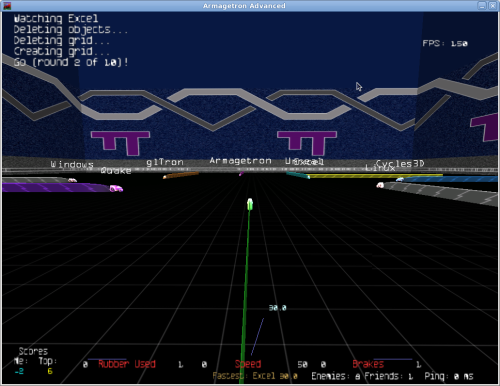

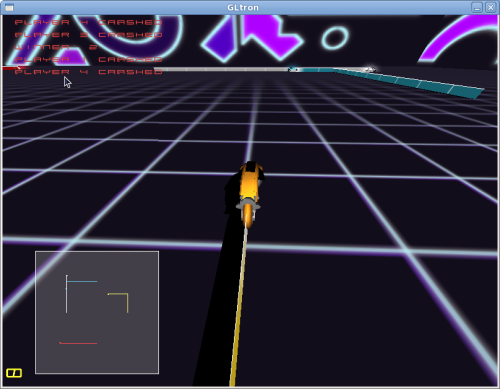

The Next Generation of Linux Games - GLtron and Armagetron Advanced

By Dafydd Crosby

This month in NGLG, I'm taking a look at a couple of 3D games that don't

require too much horsepower, but are still fun diversions.

Lightcycle games should seem familiar to anyone who has played the

'snake' type games that are common on cell phones. The goal is to box in

your opponents with your trail, all the while making sure you don't slam

into their trails (as well as your own!). Often, the games mimic the

look and feel from the 1982 film Tron, and require quick reflexes

and a mind for strategy.

First up is GLtron, which has a good original soundtrack, highly

detailed lightcycles, and very simple controls. The programmers have done a

great job in making sure the game can be expanded with different artpacks,

and the game is quite responsive and fluid. The head programmer, Andreas

Umbach, gave an update on the game's site a few months ago about his plans

to picking the game back up, and it sounds like network play might be

coming out shortly. That's a good thing, because without network play the

game doesn't get quite the replay value that it should. There's a

split-screen multiplayer mode, but those with small screens will have a

hard time with it.

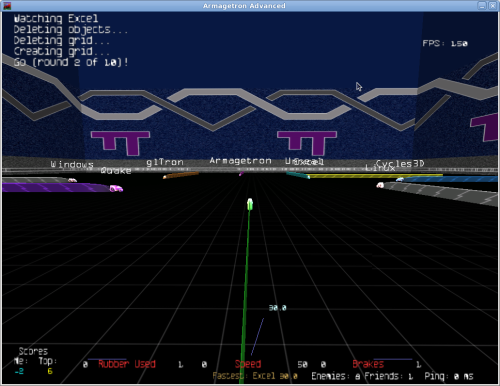

Looking for an online multiplayer version of Tron-clones, I came across

Armagetron Advanced. This was originally a fork of Armagetron, as the lead,

Manual Moos, had stopped updating the original. After the extension by

several open source contributors, Manual returned, and the fork officially

obsoleted the original Armagetron. If you've only played the original, you

might want to give the Advanced version a try.

Armegetron Advanced not only has network multiplayer capability, but it

also has other gameplay elements that add to the fun of the game, such as

'death zones' and lines that diminish over time. Getting on a server was a

snap, and the lag was minimal (a necessity for this type of game). The

folks behind the game have done a fantastic job putting up documentation on

their site: not just on how to tweak the game for that extra little bit of

speed, but also the various tactics and strategies you can use to master

the game.

GLtron's website has the source and

other helpful information about the game, and it's likely that your

distribution already has this packaged. The Armagetron Advanced website has server lists, a

well-maintained wiki, and packages for the major distros.

There are Mac OS X and Windows versions of these games, so you can play

along with your friends who might not use Linux.

If there's a game you would like to see reviewed, or have an update on

one already covered, just drop me a

line.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/crosby.jpg)

Dafydd Crosby has been hooked on computers since the first moment his

dad let him touch the TI-99/4A. He's contributed to various different

open-source projects, and is currently working out of Canada.

Copyright © 2010, Dafydd Crosby. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 172 of Linux Gazette, March 2010

Away Mission - Recommended for March

By Howard Dyckoff

The Great Recession's impact continues to grow and there are fewer IT and

Open Source conferences on the horizon. However, three upcoming events are

worth mentioning - the annual RSA security confab at the beginning of

March, the Open Source Business Conference (OSBC), and EclipseCon. All

three are in the SF Bay Area and you could spend a great month dropping in on

all three venues. Of course, you will have to risk getting swabbed down by

TSA agents to get there. Security first.

This year, much of the discussion at RSA will focus on the Advanced

Pervasive Threat, or APT. These are the increasingly sophisticated,

multi-pronged attacks and compromises from criminal syndicates and,

reportedly, from foreign state cyberwarfare agencies. Perhaps WW-IV has

already started - but you'll have to come to this year's RSA to find out.

As always, or at least in recent years, a prominent security Fed will be

highlighted in a keynote. This year, the high-profile industry experts

include:

- Robert Mueller, Director of the FBI

- Richard Clarke, former U.S. Cyber Security Czar

- Michael Chertoff, former U.S. Secretary of Homeland Security

- P.W. Singer, Director of the Brookings Institution's 21st Century Defense Initiative

If you would like to attend, visit this link: http://www.rsaconference.com/2010/usa/last-chance/prospects.htm

On the other hand, some vendors like Tipping Point and Qualsys are offering

free expo passes that that will allow you to attend the Monday sessions

organized by the Trusted Computing Group (TCG). I definitely recommend

going to the Monday TCG sessions. It may be a little late for this deal,

but try code SC10TPP.

OSBC is in its 7th year and is the leading business-oriented venue for

discussing open source companies and business models. However, some of the

sessions do seem to be updates from the previous years.

It will be held again at the attractive and compact Palace Hotel in

downtown San Francisco which is a bit of a tourist magnet by itself. The

West exit of the Montgomery St. BART station brings you up right by the

entrance.

For this year the keynote addresses look at the developing open source

market:

- Jim Whitehurst, CEO of Red Hat, will speak on evolving open source market.

- Facebook insider David Recordon discusses how the popular social media site has scaled up using open source as a major part of its infrastructure.

- Tim O'Reilly will discuss the varied opportunities that open source presents.

- and Bob Sutor, IBM VP for Open Source and Linux, asks the hard questions about open source software and the communities that produce it.

Here's the agenda for this year's Open Source Business Conference: http://www.osbc.com/ehome/index.php?eventid=7578&tabid=3659

And this link shows the sessions held in 2009: http://www.infoworld.com/event/osbc/09/osbc_sessions.html

The EclipseCon schedule gets morphed for 2010. It will offer EclipseCon

2010 talks on dozens of topics, going from 12-minute lightning talks,

which introduce new topics, to three-hour tutorials. For four days, there will be

morning hands-on tutorials, then technical sessions in the afternoon,

followed later by panel discussions. This format might led to less

conference burnout.

EclipseCon this year will offer a new Unconference in addition to its usual

evening BOFs. The first three nights (Monday-Wednesday) will offer three

rooms with AV and a chance to present any Eclipse-relevant topic on a

first-come, first-served basis. These presentations must be short (a

maximum of 25 minutes) and focused.

If you are quick, you can save a bit on the EclipseCon price - the advance

registration price is $1795 until March 19, 2010. Here's the link to

register: http://www.eclipsecon.org/2010/registration/

Last year's EclipseCon was enjoyable but compressed and filled with session

conflicts. To view many, many recorded videos from last year's eclipse,

just go to http://www.eclipsecon.org/2009

and select a session to play. I would recommend the sessions on Android

and the Eclipse Ecosystem, e4 - flexible resources for next generation

applications and tools, and Sleeping Around: Writing tools that work in

Eclipse, Visual Studio, Ruby, and the Web.

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 172 of Linux Gazette, March 2010

Tunnel Tales 1

By Henry Grebler

Justin

I met Justin when I was contracting to one of the world's biggest

computer companies, OOTWBCC, building Solaris servers for one of

Australia's biggest companies (OOABC). Justin is in EBR (Enterprise

Backup and Recovery). (OOTWBCC is almost certainly the world's most

prolific acronym generator (TWMPAG).) I was writing scripts to

automate much of the install of EBR.

To do a good job of developing automation scripts, one needs a test

environment. Well, to do a good job of developing just about anything,

one needs a test environment. In our case, there was always an

imperative to rush the machines we were building out the door and

into production (pronounced BAU (Business As Usual) at TWMPAG).

Testing on BAU machines was forbidden (fair enough).

Although OOTWBCC is a huge multinational, it seems to be reluctant to

invest in hardware for infrastructure. Our test environment consisted

of a couple of the client's machines. They were "network orphans",

with limited connectivity to other machines.

Ideally, one also wants a separate development environment, especially

a repository for source code. Clearly this was asking too much, so

Justin and I shrugged and agreed to use one of the test servers as a

CVS repository.

The other test machine was constantly being trashed and rebuilt from

scratch as part of the test process. Justin started to get justifiably

nervous. One day he came to me and said that we needed to back up the

CVS repository. "And while we're at it, we should also back up a few

other directories."

Had this been one of the typical build servers, it would have had

direct access to all of the network, but, as I said before, this one

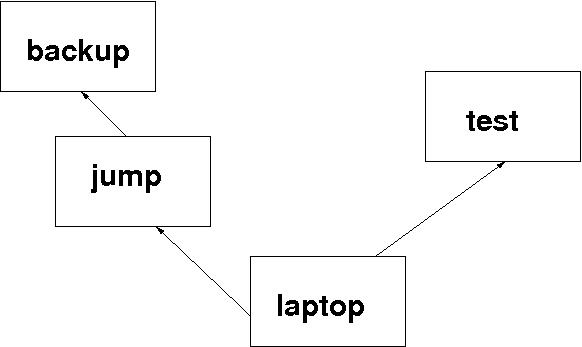

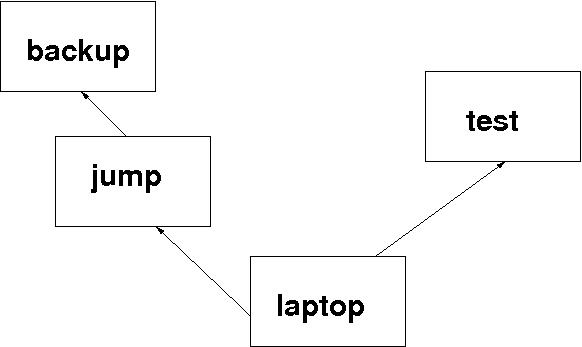

was a network orphan. Diagram 1 indicates the

relevant connectivity.

test the test machine and home of the CVS repository

laptop my laptop

jump an intermediate machine

backup the machine which partakes in regular tape backup

If we could get the backup data to the right directory on

backup, the corporate EBR system would do the rest.

The typical response I got to my enquiries was, "Just copy the stuff to

your laptop, and then to the next machine, and so on." If this were a

one-off, I might do that. But what's the point of a single

backup? If this activity is not performed at least daily, it will soon

be useless. Sure, I could do it manually. Would you?

Step by Step

I'm going to present the solution step by step. Many of you will find

some of this just motherhoods[1]. Skip ahead.

My laptop ran Fedora 10 in a VirtualBox under MS Windows XP. All my

useful work was done in the Fedora environment.

Two machines

If I want to copy a single file from the test machine to my laptop,

then, on the laptop, I would use something like:

scp -p test:/tmp/single.file /path/to/backup_dir

This would create the file

/path/to/backup_dir/single.file on

my laptop.

To copy a whole directory tree once, I would use:

scp -pr test:/tmp/top_dir /path/to/backup_dir

This would populate the directory

/path/to/backup_dir/top_dir.

Issues

-

Why did I say "once"? scp is fine if you want to copy

a directory tree once. And it's fine if the directory tree is not

large. And it's fine if the directory tree is extremely volatile (ie

frequently changes completely (or pretty much)).

But what we have here is a directory tree which simply accumulates

incremental changes. I guess over 80% of the tree will be the same

from one day to the next. Admittedly, the tree is not large, and the

network is pretty quick, but even so, it's nice to do it the right way

- if possible.

-

There is another problem, potentially a much bigger problem.

The choice of scp or some other program is about

efficiency and elegance. This problem can be a potential roadblock:

permissions.

The way scp works, I have to log in to test.

But I can only directly log in as myself (my user id on

test). If I want root privileges I have to use

su or sudo. In either case, I'd have to supply

another password. I could do it that way, but it requires even

stronger magic than I'm using so far (and I think it could be a bit

less secure than the solution I plan to present).

-

Have another look at Diagram 1. Notice the

arrows? Yes, Virginia, they really are one-way arrows. (The link

between jump and backup is probably

two-way in real life, but the exercise is more powerful if it's

one-way, so let's go with the diagram as it is.)

To get from my laptop to the test machine, I go via an SSH proxy,

which I haven't drawn because it would complicate the diagram

unnecessarily. A firewall might be set up the same way. In either

case, I can establish an SSH session from my laptop to the other

machine; but I can't do the reverse. It's like a diode.

I'm going to show you how an SSH tunnel allows access in the other

direction. Not only that, but it will make jump

directly accessible from test as well!

-

One final point about ssh/scp. If I do nothing special, when

I run those scp commands above, I'll get a prompt

like:

henry@test's password:

and I will have to enter my password before the copy will take place.

That's not very helpful for an automatic process.

Look, ma! No hands!

Whenever I expect to go to a machine more than once or twice, I take

the time to set up $HOME/.ssh/authorized_keys on the

destination machine. See ssh(1). Instead of using passwords,

the SSH client on my laptop

proves that it has access to the private key and the server

checks that the corresponding public key is authorized to

accept the account.

- ssh(1)

It all happens "under the covers". I invoke

scp, and the

files get transferred. That's convenient for me, absolutely essential

for a cron job.

Permissions

There's more than one way to skin this cat. I decided to use a cron

job on test to copy the required backup data to an

intermediate repository. I don't simply copy the directories, I

package them with tar, and compress the tarfile with

bzip2. I then make myself the owner of the result. (I could

have used zip.)

The point of the tar is to preserve the permissions of all

the files and directories being backed up. The point of the

bzip2 is to make the data to be transferred across the

network, and later copied to tape, as small as possible.

(Theoretically, some of these commendable goals may be defeated to

varying degrees by "smart" technology. For instance,

rsync has the ability to compress; and most modern

backup hardware performs compression in the tape drive.) The point of

the chown is to make the package accessible to a cron job on

my laptop running as me (an unprivileged user on

test).

Here's the root crontab entry:

0 12 * * * /usr/local/sbin/packitup.sh >/dev/null 2>&1 # Backup

At noon each day, as the root user, run a script called

packitup.sh:

#! /bin/sh

# packitup.sh - part of the backup system

# This script collates the data on test in preparation for getting it

# backed up off-machine.

# Run on: test

# from: cron or another script.

BACKUP_PATHS='

/var/cvs_repository

/etc

'

REPO=/var/BACKUPS

USER=henry

date_stamp=`date '+%Y%m%d'`

for dir in $BACKUP_PATHS

do

echo Processing $dir

pdir=`dirname $dir`

tgt=`basename $dir`

cd $pdir

outfile=$REPO/$tgt.$date_stamp.tar.bz2

tar -cf - $tgt | bzip2 > $outfile

chown $USER $outfile

done

exit

If you are struggling with any of what I've written so far, this

article may not be for you. I've really only included much of it for

completeness. Now it starts to get interesting.

rsync

Instead of scp, I'm going to use rsync which invokes

ssh to access remote machines. Both scp and

rsync rely on SSH technology; this will become relevant when

we get to the tunnels.

Basically, rsync(1) is like scp on steroids. If I

have a 100MB of data to copy and 90% is the same as before,

rsync will copy a wee bit more than 10MB, whereas

scp will copy all 100MB. Every time.

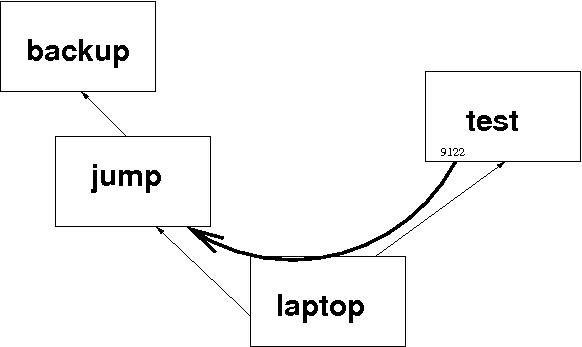

Tunnels, finally!

Don't forget, I've already set up certificates on all the remote

machines.

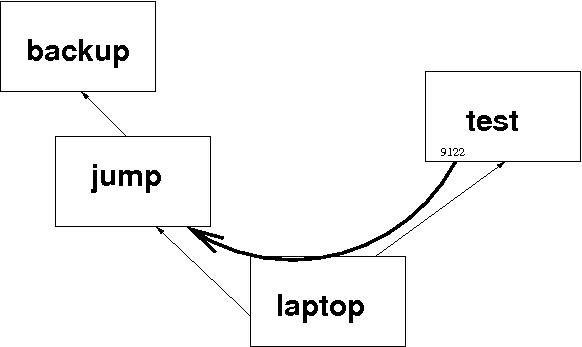

To set up a tunnel so that test can access

jump directly, I simply need:

ssh -R 9122:jump:22 test

Let's examine this carefully because it is the essence of this

article. The command says to establish an SSH connection to

test. "While you're at it, I want you to listen on a

port numbered 9122 on test. If

someone makes a connection to port 9122 on

test, connect the call through to port

22 on jump." The result looks like

this:

So, immediately after the command in the last box, I'm actually logged

in on test. If I now issue the command

henry@test:~$ ssh -p 9122 localhost

I'll be logged in on

jump. Here's what it all looks

like (omitting a lot of uninteresting lines):

henry@laptop:~$ ssh -R 9122:jump:22 test

henry@test:~$ ssh -p 9122 localhost

Last login: Wed Feb 3 12:41:18 2010 from localhost.localdomain

henry@jump:~$

It's worth noting that you don't "own" the tunnel; anyone can use it.

And several sessions can use it concurrently. But it only exists while

your first ssh command runs. When you exit from

test, your tunnel disappears (and all sessions using

the tunnel are broken).

Importantly, by default, "the listening socket on the server will be

bound to the loopback interface only" - ssh(1). So, by

default, a command like the following won't work:

ssh -p 9122 test # usually won't work

ssh: connect to address 192.168.0.1 port 9122: Connection refused

Further, look carefully at how I've drawn the tunnel. It's like that

for a reason. Although, logically the tunnel seems to be a

direct connection between the 2 end machines, test

and jump, the physical data path is via

laptop. You haven't managed to skip a machine; you've

only managed to avoid a manual step. There may be performance

implications.

Sometimes I Cheat

The very astute amongst my readers will have noticed that this hasn't

solved the original problem. I've only tunneled to

jump; the problem was to get the data to

backup. I could do it using SSH tunnels, but until

next time, you'll have to take my word for it. Or work it out for

yourself; it should not be too difficult.

But, as these things sometimes go, in this case, I had a much simpler

solution:

henry@laptop:~$ ssh jump

henry@jump:~$ sudo bash

Password:

root@jump:~# mount backup:/backups /backups

root@jump:~# exit

henry@jump:~$ exit

henry@laptop:~$

I've NFS-mounted the remote directory

/backups on its

local namesake. I only need to do this once (unless someone reboots

jump). Now, an attempt to write to the directory

/backups on

jump results in the data

being written into the directory

/backups on

backup.

The Final Pieces

Ok, in your mind, log out of all the remote machines mentioned in

Tunnels, finally!. In real life, this is going

to run as a cron job.

Here's my (ie user henry's) crontab entry on

laptop:

30 12 * * * /usr/local/sbin/invoke_backup_on_test.sh

At 12:30 pm each day, as user

henry, run a script

called

invoke_backup_on_test.sh:

#! /bin/sh

# invoke_backup_on_test.sh - invoke the backup

# This script should be run from cron on laptop.

# Since test cannot access the backup network, it cannot get to the

# real "backup" directly. An ssh session from "laptop" to "test"

# provides port forwarding to allow ssl to access the jump machine I

# have nfs-mounted /backups from "backup" onto the jump machine.

# It's messy and complicated, but it works.

ssh -R 9122:jump:22 test /usr/local/sbin/copy2backup.sh

Of course I had previously placed copy2backup.sh on

test.

#! /bin/sh

# copy2backup.sh - copy files to be backed up

# This script uses rsync to copy files from /var/BACKUPS to

# /backups on "backup".

# 18 Sep 2009 Henry Grebler Perpetuate (not quite the right word) pruning.

# 21 Aug 2009 Henry Grebler Avoid key problems.

# 6 Aug 2009 Henry Grebler First cut.

#=============================================================================#

# Note. Another script, prune_backups.sh, discards old backup data

# from the repo. Use rsync's delete option to also discard old backup

# data from "backup".

PATH=$PATH:/usr/local/bin

# Danger lowbrow: Do not put tabs in the next line.

RSYNC_RSH="ssh -o 'NoHostAuthenticationForLocalhost yes' \

-o 'UserKnownHostsFile /dev/null' -p 9122"

RSYNC_RSH="`echo $RSYNC_RSH`"

export RSYNC_RSH

rsync -urlptog --delete --rsync-path bin/rsync /var/BACKUPS/ \

localhost:/backups

Really important stuff

Notes on copy2backup.sh.

Recap

-

Everyday at noon packitup.sh on

test gathers the data to be backed up into a local

holding repository.

-

Everyday, half an hour later, if my laptop is turned on, a local

script, invoke_backup_on_test.sh is invoked. It

simply connects to test, establishing an SSH tunnel

as it does, and invokes the script which performs the backup,

copy2backup.sh.

-

copy2backup.sh does the actual copy over the SSH

tunnel using rsync to transport the data.

-

When copy2backup.sh completes, it exits, causing the

ssh command to exit and the SSH tunnel to be torn down.

-

Next day, it all starts over again.

Wrinkles

It's great when you finally get something like this to work. All the

pieces fall into place - it's very satisfying.

Of course, you monitor things carefully for the first few days. Then

you check less frequently. You start to gloat.

... until a few weeks elapse and you gradually develop a gnawing

concern. The data is incrementally increasing in size as more days

elapse. At first, that's a good thing. One backup good, two backups

better, ... Where does it end? Well, at the moment, it doesn't. Where

should it end? Good question. But, congratulations on realising that

it probably should end.

When I did, I wrote prune_backups.sh. You can see

when this happened by examining the history entries in

copy2backup.sh: about 6 weeks after I wrote the first

cut. Here it is:

#! /bin/sh

# prune_backups.sh - discard old backups

# 18 Sep 2009 Henry Grebler First cut.

#=============================================================================#

# Motivation

# packitup.sh collates the data on test in preparation for getting

# it backed up off-machine. However, the directory just grows and

# grows. This script discards old backup sets.

#----------------------------------------------------------------------#

REPO=/var/BACKUPS

KEEP_DAYS=28 # Number of days to keep

cd $REPO

find $REPO -type f -mtime +$KEEP_DAYS -exec rm {} \;

Simple, really. Just delete anything that is more than 28 days old. NB

more than rather than

equal to. If for

some reason the cron job doesn't run for a day or several, when next

it runs it will catch up. This is called

self-correcting.

Here's the crontab entry:

0 10 * * * /usr/local/sbin/prune_backups.sh >/dev/null 2>&1

At 10 am each day, as the root user, run a script called

prune_backups.sh.

But, wait. That only deletes old files in the repository on

test. What about the copy of this data on

jump?!

Remember the --delete above? It's an rsync option; a

very dangerous one. That's not to say that you shouldn't use it; just

use it with extra care.

It tells rsync that if it discovers a file on the destination

machine that is not on the source machine, then it should delete the

file on the destination machine. This ensures that the local and

remote repositories stay truly in sync.

However, if you screw it up by, for instance, telling rsync

to copy an empty directory to a remote machine's populated directory,

and you specify the --delete option, you'll delete

all the remote files and directories. You have been warned: use it

with extra care.

Risks and Analysis

There is a risk that port 9122 on test may be in use

by another process. That happened to me a few times. Each time, it

turned out that I was the culprit! I solved that by being more

disciplined (using another port number for interactive work).

You could try to code around the problem, but it's not easy.

ssh -R 9122:localhost:22 fw

Warning: remote port forwarding failed for listen port 9122

Even though it could not create the tunnel (aka port forwarding), ssh

has established the connection. How do you know if port forwarding

failed?

More recent versions of ssh have an option which caters for

this: ExitOnForwardFailure, see ssh_config(5).

If someone else has created a tunnel to the right machine, it doesn't

matter. The script will simply use the tunnel unaware that it is

actually someone else's tunnel.

But if the tunnel connects to the wrong machine?

Hey, I don't provide all the answers; I simply mention the risks,

maybe make some suggestions. In my case, it was never a serious

problem. Occasionally missing a backup is not a disaster. The scripts

are all written to be tolerant to the possibility that they may not

run every day. When they run, they catch up.

A bigger risk is the dependence on my laptop. I tried to do something

about that but without long-term success. I'm no longer there; the

laptop I was using will have been recycled.

I try to do the best job possible. I can't always control my environment.

Debugging

Because this setup involves cron jobs invoking scripts which in turn

invoke other scripts, this can be a nightmare to get right. (Once it's

working, it's not too bad.)

My recommendation: run the pieces by hand.

So start at a cron entry (which usually has output redirected to

/dev/null) and invoke it manually (as the relevant user)

without redirecting the output.

If necessary, repeat, following the chain of invoked scripts. In other

words, for each script, invoke each command manually. It's a bit

tiresome, but none of the scripts is very long. Apart from the comment

lines, they are all very dense. The best example of density is the

ssh command which establishes the tunnel.

Use your mouse to copy and paste for convenience and to avoid

introducing transcription errors.

Coming Up

That took much longer than I expected. I'll leave at least one other

example for another time.

[1]

A UK/AU expression, approximately "boring stuff you've heard before".

-- Ben

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry was born in Germany in 1946, migrating to Australia in 1950. In

his childhood, he taught himself to take apart the family radio and put

it back together again - with very few parts left over.

After ignominiously flunking out of Medicine (best result: a sup in

Biochemistry - which he flunked), he switched to Computation, the name

given to the nascent field which would become Computer Science. His

early computer experience includes relics such as punch cards, paper

tape and mag tape.

He has spent his days working with computers, mostly for computer

manufacturers or software developers. It is his darkest secret that he

has been paid to do the sorts of things he would have paid money to be

allowed to do. Just don't tell any of his employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 172 of Linux Gazette, March 2010

Logging to a Database with Rsyslog

By Ron Peterson

Introduction

RSyslog extends and improves on

the venerable syslogd service. It supports the standard configuration

syntax of its predecessor, but offers a number of more advanced

features. For example, you can construct advanced filtering expressions

in addition to the simple and limiting facility.priority selectors. In

addition to the usual log targets, you can also write to a number of

different databases. In this article, I'm going to show you how to

combine these features to capture specific information to a database.

In addition, I'll show you how to use trigger functions to parse the log

messages into a more structured format.

Prerequisites

Obviously you'll need to have rsyslog installed. My examples will be

constructed using the packaged version of rsyslog available in the

current Debian Stable (Lenny). You'll also need the plugin module

for writing to PostgreSQL.

1122# dpkg -l "*rsyslog*" | grep ^i

ii rsyslog 3.18.6-4 enhanced multi-threaded syslogd

ii rsyslog-pgsql 3.18.6-4 PostgreSQL output plugin for rsyslog

I'll be using PostgreSQL version 8.4.2, built with plperl support. I'm

using plperl to write my trigger functions, to take advantage of Perl's

string handling.

I'm not going to go into any detail about how to get these tools

installed and running, as there are any number of good resources (see

below) already available to help with that.

RSyslog Configuration

My distribution puts the RSyslog configuration files in two places.

It all starts with /etc/rsyslog.conf. Near the top of this file, there

is a line like this, which pulls additional config files out of the

rsyslog.d directory:

$IncludeConfig /etc/rsyslog.d/*.conf

I'm going to put my custom RSyslog configuration in a file called

/etc/rsyslog.d/lg.conf. We're going to use this file to do several

things:

- Load the database driver module

- Configure RSyslog to buffer database output

- Define a template for mapping syslog output to the database

- Define filter expressions to net just the log messages we care about

Let's start with something easy - loading the database module.

$ModLoad ompgsql.so

That wasn't too bad now, was it? :)

So let's move on. The next thing we'll do is configure RSyslog to

buffer the output headed for the database. There's

a good

section about why and how to do this in the RSyslog documentation.

The spooling mechanism we're configuring here allows RSyslog to queue

database writes during peak activity. I'm also including a commented

line that shows you something you should not do.

$WorkDirectory /var/tmp/rsyslog/work

# This would queue _ALL_ rsyslog messages, i.e. slow them down to rate of DB ingest.

# Don't do that...

# $MainMsgQueueFileName mainq # set file name, also enables disk mode

# We only want to queue for database writes.

$ActionQueueType LinkedList # use asynchronous processing

$ActionQueueFileName dbq # set file name, also enables disk mode

$ActionResumeRetryCount -1 # infinite retries on insert failure

Now we're going to define an RSyslog template that we'll refer to in

just a minute when we write our filter expression. This template

describes how we're going to stuff log data into a database table. The

%percent% quoted strings are RSyslog 'properties'. A list of the

properties you might use can be found in

the fine

documentation. You'll see why I call this 'unparsed' in just a

moment.

$template mhcUnparsedSSH, \

"INSERT INTO unparsed_ssh \

( timegenerated, \

hostname, \

tag, \

message ) \

VALUES \

( '%timegenerated:::date-rfc3339%', \

'%hostname%', \

'%syslogtag%', \

'%msg%' );", \

stdsql

All that's left to do on the RSyslog side of things is to find some

interesting data to log. I'm going to log SSH login sessions.

if $syslogtag contains 'sshd' and $hostname == 'ahost' then :ompgsql:localhost,rsyslog,rsyslog,topsecret;mhcUnparsedSSH

This all needs to be on one line. It probably helps at this juncture

to look at some actual log data as it might appear in a typical log file.

Feb 2 10:51:24 ahost imapd[16376]: Logout user=ausername host=someplace.my.domain [10.1.56.8]

Feb 2 10:51:24 ahost sshd[17093]: Failed password for busername from 10.1.34.3 port 43576 ssh2

Feb 2 10:51:27 ahost imapds[17213]: Login user=cusername host=anotherplace.outside.domain [256.23.1.34]

Feb 2 10:51:27 ahost imapds[17146]: Killed (lost mailbox lock) user=dusername host=another.outside.domain [256.234.34.1]

Feb 2 10:51:27 ahost sshd[17093]: Accepted password for busername from 10.1.34.3 port 43576 ssh2

This is real log data, modified to protect the innocent, from

/var/log/auth.log on one of my servers. In a standard syslog setup,

this data would be captured with a configuration entry for the 'auth'

facility. As you can see, it contains authorization information for

both IMAP and SSH sessions. For my current purposes, I only care about

SSH sessions. In a standard syslog setup, teasing this information

apart can be a real pain, because you only have so many facility.

selectors to work with. With RSyslog, you can

write advanced