...making Linux just a little more fun!

Mailbag

This month's answers created by:

[ Sayantini Ghosh, Amit Kumar Saha, Ben Okopnik, Kapil Hari Paranjape, Karl-Heinz Herrmann, René Pfeiffer, Minh Van Nguyen, Neil Youngman, Paul Sephton, Rick Moen, Suramya Tomar, Thomas Adam ]

...and you, our readers!

2-Cent Tips

2 cent tip: juxtapose image and text in LaTeX

Minh Nguyen [nguyenminh2 at gmail.com]

Mon, 8 Oct 2007 10:05:37 +1000

Greetings LG readers,

When using LaTeX, there are times when you might want to place an

image and a short paragraph side by side. Here's an illustration of

what I'm referring to:

+---------------------+---------------------+

|image goes here | short paragraph |

| | goes here |

| | |

| | |

| | |

| | |

| | |

| | |

+---------------------+---------------------+

In that case, try using the minipage environment. The following

snippet of LaTeX macro can be used to implement the above

illustration:

\begin{center}

\begin{minipage}[l][4.5cm][t]{4cm}

\includegraphics[scale=0.7]{path/to/your/eps/image/file}

\end{minipage} \qquad\qquad

%

\begin{minipage}[l][4.5cm][t]{6.5cm}

A short paragraph of text goes here.

\end{minipage}

\end{center}

Play around with the various options to get your desired effect.

Regards

Minh Van Nguyen

2 cent tip: boot Slackware 12.0 using grub

Minh Nguyen [nguyenminh2 at gmail.com]

Mon, 8 Oct 2007 09:58:55 +1000

Greetings LG readers,

The default boot loader in Slackware is LILO. On some platforms, such

as the IBM ThinkPad R40, my experience is that LILO can take quite

some time before it boots Slackware 12.0. You can add the option

"compact" to your /etc/lilo.conf to speed up the process, but this is

not guaranteed to work on all platforms. An alternative is to use the

GRUB boot loader.

If you boot Slackware 12.0 with GNU GRUB 0.97, you might see the

following boot message on your screen:

[snip]

> Testing root filesystem

>

> * ERROR: Root partition has already been mounted read-write. Cannot check!

>

> For filesystem checking to work properly, your system must initially mount

> the root partition as read only. Please modify your kernel with 'rdev' so that

> it does this. If you're booting with LILO, add a line:

>

> read-only

>

> to the Linux section in your /etc/lilo.conf and type 'lilo' to reinstall it.

>

> If you boot from a kernel on a floppy disk, put it in the drive and type:

> rdev -R /dev/fd0 1

>

> If you boot from a bootdisk, or with Loadlin, you can add the 'ro' flag.

>

> This will fix the problem AND eliminate this annoying message. :^)

>

> Press ENTER to continue.

The boot process hangs until you actually press the ENTER button on

your keyboard. To get rid of this boot message and the pause, try the

GRUB boot option "ro", or add this option to your /boot/grub/menu.lst.

For example, here is a snippet of my /boot/grub/menu.lst:

[snip]

> # Booting Linux distribution Slackware 12.0

> title Slackware 12.0

> root (hd0,2)

> kernel /boot/vmlinuz root=/dev/hda3 vga=0x31a resume=/dev/hda2 splash=verbose showopts ro

[snip]

Regards

Minh Van Nguyen

2 Cent tip: Finding your Kernel using Grub

Martin J Hooper [martinjh at blueyonder.co.uk]

Mon, 08 Oct 2007 15:16:39 +0100

If you have done something such as adding a new partition that

changes all your partition numbers so that grub can't find the

right kernel do this at a grub prompt:

find <kernel>

Grub will then spit out the fully qualified path to the kernel in

question and allow you to edit your menu.lst to suit.

[ Thread continues here (5 messages/4.99kB) ]

Our Mailbag

article "A Question Of Rounding" in issue #143

Ben Okopnik [ben at linuxgazette.net]

Wed, 3 Oct 2007 07:36:22 -0500

On Wed, Oct 03, 2007 at 12:36:53PM +0200, Pierre Habouzit wrote:

> Hi,

>

> I would like to report that the article "A Question Of Rounding" in

> your issue #143 is completely misleading, because its author doesn't

> understand how floating point works. Hence you published an article that

> is particularly wrong.

Thanks for your opinion; I've forwarded your response to the author.

> I'm sorry, but this article is very wrong, and give false informations

> on a matter that isn't very well understood by many programmers, hence I

> beg you to remove this article, for the sake of the teacher that already

> have to fight against enough preconceived ideas about ieee 754 numbers

> already.

Sorry, that's not in the cards - but we'll be happy to publish your

email in the next Mailbag.

I understood, even before I approved the article for publication, that a

lot of people had rather strong feelings and opinions on this issue;

i.e., the author getting flamed when he tried to file a bug report on

this was a bit of a clue. Those opinions, however, don't make him wrong:

whatever other evils can be ascribed to Micr0s0ft, their approach to

IEEE-754 agrees with his - and is used by the majority of programmers in

the world. That's not a guarantee that they (or he) are right - but it

certainly implies that his argument stands on firm ground and has merit.

You are, of course, welcome to write an article that presents your

viewpoint. If it meets our requirements and guidelines

(http://linuxgazette.net/faq/author.html), I'd be happy to publish it.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (36 messages/102.86kB) ]

rsync not working when trying to update LG mirror

Suramya Tomar [security at suramya.com]

Wed, 03 Oct 2007 22:33:18 +0530

Hey everyone,

I maintain a mirror for LG at my site and recently it was brought to

my attention that the mirror had gone out of date. I had the update

script set up as a cron job so didn't monitor it. But apparently it has

been failing with the following errors:

u38576182:~/public_html/suramya.com/linux/gazette > ./sync_mirror.sh

rsync: failed to connect to linuxgazette.net: Connection refused

rsync error: error in socket IO (code 10) at clientserver.c(97)

rsync: failed to connect to linuxgazette.net: Connection refused

rsync error: error in socket IO (code 10) at clientserver.c(97)

The sync script contains the following code:

u38576182:~/public_html/suramya.com/linux/gazette > cat sync_mirror.sh

RSYNC_RSH=/usr/bin/ssh

export RSYNC_RSH

rsync -avz --exclude /ftpfiles/ linuxgazette.net::lg-all www_root

rsync -avz linuxgazette.net::lg-ftp ftpfiles

This only happens on this one particular system. When I try it on my

local system it runs without issues. Any idea why the lingazette.net is

rejecting the rsync connection? If I try to ssh to linuxgazette.net from

the server I get a password prompt.

This server has rsync version 2.5.6cvs protocol version 26 installed.

Its a shared hosting account so I don't have root access on the server.

Any help would be appreciated

Thanks,

Suramya

--

Name : Suramya Tomar

Homepage URL: http://www.suramya.com

[ Thread continues here (16 messages/21.87kB) ]

480% CPU?

Neil Youngman [ny at youngman.org.uk]

Mon, 22 Oct 2007 13:07:52 +0100

Would anyone care to hazard a guess why top is claiming that the top 3 process

are between them using 480% CPU on a dual core box?

Neil

[ Thread continues here (6 messages/5.22kB) ]

problem with usb support

nishith datta [nkdiitd2002 at yahoo.com]

Sat, 20 Oct 2007 11:59:07 -0700 (PDT)

hi

I got a hp pavillion dv1000 series laptop and it's loaded with Redhat

I am unable to get the usb support working on it.

I checked out the lsmod and it shows the uhci and ohci drivers inserted.

I however donot see any hid driver or usbcore module. I did a slocate on my system but didnot find them either. I definitely chose to have these supports when compiling my 2.6.22.6 kernel.

What am I doing wrong ?

nishith

[ Thread continues here (2 messages/1.70kB) ]

using smp kernel, get 100% cpu usage one one cpu without any real load on the system

jim ruxton [cinetron at passport.ca]

Mon, 08 Oct 2007 14:21:21 -0400

Hi I'm on a P4 running an smp kernel ie.

uname -a :

Linux jims-laptop 2.6.20-16-386 #2 Sun Sep 23 19:47:10 UTC 2007 i686

GNU/Linux

For some reason occasionaly one of my cpu's runs away and starts showing

100% cpu usage, however top only shows the program using the most cpu at

7% or less. Where is that 93% going? This is driving me crazy. I have to

reboot the machine to get it back to normal. I've tried Fedora and

Kubuntu. Though Kubuntu appeared more stable it still happened. Played

around with changing max_cstate without success.I've just downgraded to

a 386 kernel to see if that helps. This isn't a real solution however.

Do you have any suggestions? I've asked around on forums and irc

channels without luck. Thanks.

Jim

output of cat /proc/cpuinfo :

processor : 0

vendor_id : GenuineIntel

cpu family : 15

model : 2

model name : Intel(R) Pentium(R) 4 CPU 3.06GHz

stepping : 9

cpu MHz : 3059.352

cache size : 512 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 1

fdiv_bug : no

hlt_bug : no

f00f_bug : no

coma_bug : no

fpu : yes

fpu_exception : yes

cpuid level : 2

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov

pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe cid xtpr

bogomips : 6123.68

clflush size : 64

[ Thread continues here (13 messages/29.23kB) ]

Unix

Terry T [timburwa at gmail.com]

Mon, 8 Oct 2007 09:16:18 +0200

Hie

I am new UNIX.I want to copy 30 files with different names using the

following command.

ftp -i -s:filename > logfilename.log

The command works well.

My problem is to type the same command 30 times for each file name.

How do I transfer all the 30 files at the same time?

[ Thread continues here (7 messages/6.50kB) ]

A couple of Perl questions...

Jimmy ORegan [joregan at gmail.com]

Tue, 9 Oct 2007 19:20:41 +0100

I have a couple of scripts that almost work, and I was wondering if

anyone (Ben?  could tell me why...

could tell me why...

First, I want to convert a list of tags in the IPA PAN's corpus format

(subst:pl:dat:f) to Apertium's tag format (n.f.pl.dat). I have this:

#!/usr/bin/perl

use warnings;

use strict;

# tags to replace

my %terms = qw(n nt pri p1 sec p2 ter p3 subst n);

while (<>)

{

my @in = split/:/;

my @out = map { ($terms{$_} ne "") ? $terms{$_} : $_ } @in;

if ($#out > 3) {

my $type = $out[3];

$out[3] = $out[2];

$out[2] = $out[1];

$out[1] = $type;

}

print join '.', @out;

}

That's broken, because it only works for tag sets which have more than

4 entries, but changing the if to "($#out >= 3)" gives me this:

".sg.nomxxs.m3" from "xxs:sg:nom:m3". I also get a lot of warnings:

Use of uninitialized value in string ne at foo.pl line 11, <> line 1085.

Use of uninitialized value in string ne at foo.pl line 11, <> line 1086.

Next, I have a list of names extracted from a Polish morphology

dictionary[1] that I'm trying to convert to a list of word stems and

endings. I have this, which works (aside from a couple of errors):

#!/usr/bin/perl

use warnings;

use strict;

use String::Diff qw/diff_fully/;

use Data::Dumper;

#test();

while(<>)

{

s/,\W+$//;

my $endings = $_;

my @a = split/, /;

my $stem = find_stem(@a);

$endings =~ s/$stem//g;

print $stem;

if ($endings =~ /?/) {print ":n.f:";}

elsif ($endings =~ /owie/) {print ":n.m1:";}

else {print ":n.??:";}

print $endings . "\n";

}

sub test()

{

my $test = "Adam, Adama, Adaemie, Adamowi, Adamem, Adamach, Adamami, Adamom";

my @t = split/, /, $test;

print find_stem(@t);

print "\n";

}

sub find_stem()

{

my @in = @_;

my ($r, $l, $cur, $last);

my $i=0;

while ($i<($#in))

{

($r, $l) = diff_fully($in[$i], $in[$i+1]);

$cur = $r->[0]->[1];

$last = $cur if (!$last);

if ($cur ne $last) {

($r, $l) = diff_fully($last, $cur);

$last = $r->[0]->[1];

}

$i++;

}

return $last;

}

but if I change the end of the while() to this:

else {print ":n.??:";}

my @ends = split/, /, $endings;

sort(@ends);

$endings = join(',', at ends);

print $endings . "\n";

to sort the endings, it... doesn't. What am I missing?

[1] "S?ownik alternatywny", under the GPL: http://www.kurnik.pl/slownik/odmiany/

[ Thread continues here (5 messages/16.04kB) ]

Please help me :(...

Lin, Hong [hlin at devry.edu]

Mon, 29 Oct 2007 18:09:11 -0500

Hi:

I have a wired problem and I hope I can get some help in your place.

I received a "Forbidden / You don't have permission to access

/~user/index.html on this server." Error while I try to display my web

page.

The OP is Fedora 7

Apache is 2.2.6

Permissions of all directories and files are set to rwxr-xr-x leading

from /home all the way to the files inside public_html.

I have modified the httpd.conf file to make sure it looks for the

/~user/public_html directory.

I did not touch any other file or area.

If I "killall httpd" and run /usr/sbin/httpd, then the index.html under

the /~user/public_html displays. That means that it seems worked for my

purpose.

However, after I run "/etc/init.d/httpd restart", it displayed stopping

httpd [ok] starting httpd [ok], but I will not be able to see my

index.html file under the /~user/public_html

The system index.html (the testing page) always worked.

The wired thing is after I taking out all the #lines within the

/etc/init.d/httpd file, I can use "/etc/init.d/httpd restart" to make it

work.

However, when the machine reboots, it does not work again. I have tried

to put "/etc/init.d/httpd restart" at end of the rc.local to force the

web serve stop and start at the boot. The server will stop and then

start, but it does not display my web page.

I have also tried to put "/etc/init.d/httpd stop" and "/usr/sbin/httpd"

at end of rc.local. It does not display my web either.

The S85httpd is linked with .../init.d/httpd

I have tried with "/usr/sbin/apachectl start", it does not work either

manually or inside the rc.local

Here is the summary:

Manually /usr/sbin/httpd worked (after I killed all the httpd process)

Manually /etc/init.d/httpd worked (only after I took out all the #lines,

and I don't know why it matters)

Apachectl never worked.

The machine boot up does not work.

May you help me?

Thanks in advance.

Hong

[ Thread continues here (5 messages/11.51kB) ]

Copyright & Copyleft

Ramanathan Muthaiah [rus.cahimb at gmail.com]

Wed, 3 Oct 2007 00:05:16 +0530

Just wondering, why all the GNU/Linux man pages refer to copyright

only and do not have no reference / brief statement regd "copyleft".

Quicky browsing through the

"http://www.gnu.org/copyleft/copyleft.html", I did understand that

this concept of copyleft is incorporated by applying copyright and

then adding terms of distribution.

To quote from the above URL,

"To copyleft a program, we first state that it is copyrighted; then we

add distribution terms, which are a legal instrument that gives

everyone the rights to use, modify, and redistribute the program's

code or any program derived from it but only if the distribution terms

are unchanged . . . "

Any thoughts ?

/Ram

[ Thread continues here (3 messages/3.03kB) ]

Thoughts on "Mercurial"

Amit Kumar Saha [amitsaha.in at gmail.com]

Sun, 28 Oct 2007 12:09:51 +0530

Hi all!

I am working on an article titled "Distributed Version Control with Mercurial".

Basically, I am a newbie to Mercurial, so the article will be a result

of learning, and putting it in a simple, as-i-did-it way.

1. Now, I would like to know thoughts, experiences on Mercurial from

those of you who have already used it.

2. If you have made the switch from CVS/SVN to mercurial, then why did

you do it?

3. Automated tools you use to facilitate CVS/SVN repos to Mercurial.

All comments, insights are welcome.

Thanks,

Amit

--

Amit Kumar Saha

*NetBeans Community Docs

Contribution Coordinator*

me blogs@ http://amitksaha.blogspot.com

URL:http://amitsaha.in.googlepages.com

Version control for /etc

Kapil Hari Paranjape [kapil at imsc.res.in]

Fri, 26 Oct 2007 08:53:02 +0530

Hello,

I was looking at version control mechanisms to handle /etc

on the machines here. If people on TAG have used such systems I would

appreciate feedback.

CVS: seems to be the classic solution. Cons: People say it is old and

unmaintained code which is "end-of-life".

Mercurial: One of the modern VC systems considered "notable" on

Rick Moen's knowledge base. One difficulty with "hg" is that it

insists on the "distributed" model. Putting the version control

history outside /etc (a la CVS) would require convoluted mounts.

GIT: Another modern VC system (though not "notable" as per Rick's

kb). It is rather similar to Mercurial in many ways. One difference

is that one can use the environment variable GIT_DIR to point to a

different directory for storing VC history.

Some reasons to keep VC history outside /etc:

1. This way one can easily check for "cruft" without

adding an explicit "ignore" for ".hg" or ".git" ...

2. Uses less space in "/etc".

3. Can keep the history on an "archival" disk safe from

potential corruption.

Any thoughts/suggestions by people on TAG are welcome as usual!

Regards,

Kapil.

--

[ Thread continues here (15 messages/30.26kB) ]

Question on how to block a ssh host from being used as a Socks proxy

Suramya Tomar [security at suramya.com]

Fri, 19 Oct 2007 04:21:16 +0530

Hey Everyone,

I have been using a SOCKs proxy via SSH (using port tunneling [1]) to

browse the net from unsecure locations and it works great.

However I have noticed that when I connect to certain hosts I am

unable to use the connection as a SOCKS proxy and I was wondering how

these hosts were configured to do this. It seems like a good feature to

have on servers that I configure. Are there any disadvantages to this

setup that I am missing?

I have tried looking for a solution online but I guess I am not asking

the right questions because I didn't find anything useful. So any

idea's/suggestions on what/where to look?

Thanks in advance.

- Suramya

[1] To set up a SOCKS proxy using SSH from a windows system follow these

steps:

Open PuTTY. You should be greeted with a configuration screen. First,

you will enter the hostname or IP address of the SSH server. Type in a

name for your connection settings in the box below ?Saved Sessions?, and

click the Save button.

Now you need to look at the tree of options to the left; expand the SSH

tree, and select ?Tunnels?. Enter 4567 (or any port number above 1024)

in the Source Port area, and click the Dynamic radio button to select

it. Leave the Destination field blank, and click ?Add?.

Now go back to the Session tree (very top of the left section), and save

again. You will be prompted to enter a username, which is the username

of your shell account. Type that in, hit enter, and then type in your

password when it prompts you.

In your browser change the proxy setting to localhost and the port you

used earlier and you can browse the net safely.

--

Name : Suramya Tomar

Homepage URL: http://www.suramya.com

[ Thread continues here (6 messages/9.88kB) ]

Apertium: an open source machine translation system

Jimmy ORegan [joregan at gmail.com]

Mon, 8 Oct 2007 17:05:38 +0100

On 30/09/2007, Jimmy O'Regan <joregan at gmail.com> wrote:

> Apertium (http://xixona.dlsi.ua.es/apertium-www/) is an open source

> machine translation system.

>

<snip>

> Polish is still[1] my main language of interest, and I'm working on a

> configuration for the morphological analyzer.

The start of my Polish-English module was added to SVN today

(http://apertium.svn.sourceforge.net/viewvc/apertium/apertium-en-pl/)

and can be tested here: http://xixona.dlsi.ua.es/testing/index.php

It's nothing more than a tiny inflecting dictionary at the moment, but

I'm working on it

Talkback: Discuss this article with The Answer Gang

Published in Issue 144 of Linux Gazette, November 2007

Talkback

Talkback:143/lg_mail.html

[ In reference to "Mailbag" in LG#143 ]

Etienne Lorrain [etienne_lorrain at yahoo.fr]

Wed, 3 Oct 2007 15:13:03 +0200 (CEST)

Clock problem

>Hi , my name is Jimmy,can anyone help me fix the time on my PC. I

>change the Battery so many time ,I mean the new CMOS Battery ,but my

>time is still not read correct

Check the board jumper which reset the CMOS configuration and your

motherboard documentation - this jumper shall not be let in the "reset"

position while operating the computer...

Just a guess.

Talkback:143/sephton.html

[ In reference to "A Question Of Rounding" in LG#143 ]

Mauro Orlandini - IASF/Bologna [orlandini at iasfbo.inaf.it]

Tue, 2 Oct 2007 09:53:23 +0200 (CEST)

Talking about rounding, I almost went crazy to find out why a perl program

I was writing did not give the right result. Here is the code:

#!/usr/bin/perl

#

$f = 4.95;

$F = (4.8+5.1)/2;

$F_spr = sprintf("%.10f", (4.8+5.1)/2);

printf " f: %32.30f\n", $f;

printf " F: %32.30f\n", $F;

printf " F_spr: %32.30f\n", $F_spr;

if ($f == $F) { print "\n f equals F!\n\n"; }

The test fails even if $f and $F are the same! I solved by using $F_spr

instead of $F, but it took me a day to find it out... 8-(

Ciao, Mauro

--

_^_ _^_

( _ )------------------------------------------------------------------( _ )

| / | Mauro Orlandini Email: orlandini at iasfbo.inaf.it | \ |

| / | INAF/IASF Bologna Voice: +39-051-639-8667 | \ |

| / | Via Gobetti 101 Fax: +39-051-639-8723 | \ |

| / | 40129 Bologna - Italy WWW: http://www.iasfbo.inaf.it/~mauro/ | \ |

| / |--------------------------------------------------------------------| \ |

| / | Today's quote: | \ |

[ Thread continues here (6 messages/16.09kB) ]

Talkback: 143/anonymous.html

[ In reference to "Linux Console Scrollback" in LG#143 ]

Andre Ferreira [andre.ferreira at safebootbrasil.com.br]

Thu, 18 Oct 2007 10:20:23 -0300

Hello,

I have a suggestion for the article about the Linux Console Scrollback that

appears in Linux Gazette #143 (October 2007).

He talks about the console scoll back function is not enough to see the

kernel messages, also he talks about see the message log that don't have

everything.

My suggestion is to mention the "kernel ring buffer" that can be accessed

and manipulated using the command "dmesg".

Of course change the size of the scroll back buffer in console has other

uses than to see the output of the kernel boot, but as he gives this as an

example I think is useful to talk about the kernel ring buffer.

Best regards,

Andre

Talkback: issue45/lg_tips45.html

[ In reference to "/lg_tips45.html" in LG#issue45 ]

Gary Dale [garydale at torfree.net]

Wed, 17 Oct 2007 19:33:16 -0400

While the coverage of this topic was generally thorough, there was one

important point that was missed. If you want to use tar to directly

split archives, you cannot use compression. If you want a compressed

archive, you must compress it first then use split to break it into

appropriate chunks.

However, if you do things this way, you must be aware that each chunk

must be good for you to be able to rejoin them later. For example, if

you were to split an archive into IG segments to back up to a UDF

filesystem (until recent kernels there was a 1G limit on UDF files) such

as a DVD-RAM, you must verify the copies to ensure you will be able to

restore the original archive later. If one of the segments develops a

bad sector, you may lose the entire archive.

Talkback: 135/misc/lg/diagnosing_sata_problems.html

[ In reference to "Linux Console Scrollback" in LG#135 ]

Neil Youngman [ny at youngman.org.uk]

Thu, 25 Oct 2007 22:02:09 +0100

On Thursday 25 October 2007 20:18, you wrote:

> Hi!

> I read your post in

> http://linuxgazette.net/135/misc/lg/diagnosing_sata_problems.html

> Did you get your sata drive work?

> I've got these kind of problems:

> http://ubuntuforums.org/showthread.php?t=591288

> Can you give any advices?

>

> -Juho from Finland

I solved my problems by replacing the SATA card. I'm afraid I can't offer much

advice. If it was working before you upgraded and you haven't touched the

hardware then it sounds like software. I'd try a few different live CDs

(Knoppix, Mint, etc), see which ones work and look at whether there are

obvious differences, such as modules loaded. That might offer some clues.

I've Cced the Answer Gang to see if they can offer any more useful advice.

Neil

Talkback: 108/bilbrey.html

[ In reference to "Using a Non-Default GUI (in RHEL and kin)

" in LG#108 ]

Brian Bilbrey [bilbrey at orbdesigns.com]

Wed, 31 Oct 2007 18:29:19 -0400

On Wed, Oct 31, 2007 at 12:15:46PM -0700, nishith datta wrote:

> hi brian,

> Sorry if I am bothering you with this email.

> This is in connection with your article about winmans in linuxGazette. It

> was very informative and good. I was struggling with installing fluxbox on

> RHEL 4.0 and I finally could do it with help of your article.

> Just that I have not understood what is the purpose of the following

> files /etc/X11/gdm/Sessions/fluxbox and the

> /etc/X11/dm/Sessions/fluxbox.desktop.

>

> Both have a exec command and I have entered the fluxbox binary only in the

> fluxbox.desktop file . It is working fine . What is the fluxbox file in

> gdm/Sessions dir for ?

> my files look like this :-

> /etc/X11/gdm/Sessions/fluxbox file

> #!/bin/bash

> exec /etc/X11/xdm/Xsession FluxBox

>

> /etc/X11/dm/Sessions/fluxbox.desktop file

> [Desktop Entry]

> Encoding=UTF-8

> Name=FluxBox

> Comment=This session logs you into fluxbox

> Exec=/opt/fluxbox-1.0.0/src/fluxbox

> Icon=

> Type=Application

> I hate to bother you . Hope it is alright and you will help me out in

> understanding things better.

No problem, Nishith. You're referring to this article, I think:

http://linuxgazette.net/108/bilbrey.html

I would hazard to guess that /etc/X11/dm is the default display manager

directory, where by default I mean "the place where Red Hat expects to

find display manager session stuff". Then there's directories for the

assorted actual display managers: xdm, kdm, gdm. That's for stock X, KDE,

and Gnome, respectively. But in each of those login manager screens, you

can select which window manager you want to use for that (and optionally

future) X sessions. So in each display manager configuration directory,

there are session files designed to work with that particular display

manager, for each installed Window Manager. So, for instance, if you

installed RHEL4 in kitchen sink mode, with both KDE and Gnome goo, then

you're likely to find these:

/etc/X11/

dm

xdm

gdm

kdm

Then, when you install a non-stock RPM of, say, fluxbox, you'll likely

find separate files for that window manager to define a session under each

of those DMs. That they're different (which seems to be your confusion) is

a function of the fact that each is a configuration file for a different

display manager.

But all of the others eventually refer back to files in /etc/X11/dm/Sessions

for the setup and startup of the window manager. In one Red Hat box I can

touch, I find /etc/X11/gdm/Sessions/GNOME. That invokes

/etc/X11/xdm/Xsession with an argument of "gnome". Looking at Xsession, I

see that ... it leads elsewhere entirely: /usr/share/switchdesk.

[ ... ]

[ Thread continues here (1 message/4.29kB) ]

Talkback: 141/misc/lg/backup_software_strategies.html

[ In reference to "Linux Console Scrollback" in LG#141 ]

Kapil Hari Paranjape [kapil at imsc.res.in]

Mon, 22 Oct 2007 11:05:33 +0530

Hello,

There was a discussion a while ago about Backup Strategies.

The enclosed URL points to some pre-strategic thoughts on Backup.

I have not yet used this thinking to formulate a proper Backup plan

that is consistent with it.

http://www.imsc.res.in/IMScWiki/WhatIsBackup

I have not yet submitted it as an article for LG since the actual

strategy is missing --- what good is theory without a practical

formula?!

Regards,

Kapil.

--

"A Question Of Rounding" in issue #143

Paul Sephton [paul at inet.co.za]

Sat, 06 Oct 2007 19:53:57 +0200

Hello, all

In order to clarify a few points, set some issues to rest, and update

the listing of the source code that appeared in "A Question Of

Rounding", the following may be of interest.

First of all, I have to state unequivocally that there is no bug. There

never was a bug. The printf() family of functions completely follow the

letter of both the C99 and IEEE specifications (at least for GLibC).

The matter has been completely explored and put to rest.

The issues brought to light in the article are, however very pertinent

to those of us who make a living producing code. The listing below

addresses some of the real life issues some of us face when having to

deal with floating point arithmetic.

Please refer to the discussion which follows for an explanation as to

why printf() behaves the way it does, and what this code does to address

some of the problems I highlighted in my article. The discussion is

also a summary of some of the many points raised in the bug report

discussion, and the information provided to me during that process by a

few very smart people.

[ ... ]

[ Thread continues here (1 message/11.46kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 144 of Linux Gazette, November 2007

NewsBytes

By Howard Dyckoff

News in General

Ubuntu 7.10 "Gutsy Gibbon" Released

As promised, Ubuntu 7.10 server and desktop were released on 10/18.

It offers new server and desktop features, including better hardware and driver

support, especially for printers and wireless devices.

Mark ShuttleWorth, CEO of Cannonical, noted that "...this is the 7th release of

Ubuntu and the thing we are most proud of... we were with a day of

schedule on all releases." Shuttleworth added, "We are delivering

what we consider to be an enterprise-class operating system, and we expect these

will be used immediately in production environments."

Among the innovations in this edition are "tracker", a hard drive indexing

system with the ability to search the entire desktop, "I believe we are the

first distro to deliver this capability," Shuttleworth said.

The server package adds more standardized configuration templates, to speed

deployments and aid rollout scalability. Configurations are included for

Web servers, databases, LAMP applications, file and print servers, etc.

This is a non-LTS release of Ubuntu. The next release, targeted for April

2008, will be a Long-Term-Support [LTS] release for those on support

contracts. LTS releases are planned for annual or biannual release.

This latest Ubuntu version also includes the following new features:

-

Enhanced security

AppArmor provides significantly enhanced security which protects the

server from intrusion. Common server applications such as Apache and

Postfix are protected with default policies. Administrators can easily

lock down programs and resources and add policies for their specific

requirements.

-

Virtualized kernel -

A tailored kernel optimized for use in virtualized environments. This

smaller and simpler kernel is particularly suited for virtual

appliances. This new kernel includes many improvements for

paravirtualization, one of which is VMI (Virtual Machine Interface), a

new independent way for hypervisors to relate to the kernel.

-

Tickless kernel -

A new "tickless" idle mode results in reduced power consumption/heat

emission. This feature results in significant energy and cost savings on

machines running several virtualized instances.

-

Messaging -

Deployments of large mail servers benefit from significant improvements

to Postfix's performance. The new stable release of Postfix takes

advantage of the kernel's epoll functionality to reduce load and improve

performance.

-

Printing -

Improved interoperability with CUPS now supporting automatic discovery

and authentication for many more protocols, including LDAP with SSL,

Kerberos, Mac OS X Bonjour, and Zeroconf. The desktop version also has

improved plug-and-play configuration for printers.

-

Windows compatibility -

Users of the desktop version with a dual partition can read from and

write to files that are on located in a Windows partition (including

NTFS).

-

Programming

- A new release of Python allows for a faster and more reliable

interpreter. Additionally, OpenMP is now supported in gcc 4.2, which

gives shared-memory programmers a simple and flexible interface for

developing parallel applications.

-

LDAP authentication -

Ubuntu Server is now fully supported as an LDAP authentication client.

-

"Landscape" management -

Commercially supported users of Ubuntu Server can take full advantage of

this Web-based management tool for managing large deployments.

Ubuntu Links

Desktop:

(http://www.ubuntu.com/news/ubuntu-desktop710

Laptop, thin client, and server:

(http://www.ubuntu.com/news/ubuntu-server710).

To coincide with the Ubuntu 7.10 launch, Canonical Ltd. announced updates

to Edubuntu, Kubuntu, and Xubuntu derivatives, including advanced thin

client capabilities and a KDE 4 beta tech preview. Additional information

is available at

http://www.ubuntu.com/news/ubuntu-family710.

OpenAjax Alliance Promotes InteropFest, Security for Mashups

At the September AjaxWorld conference, the OpenAjax Alliance, which is

dedicated to open and interoperable Ajax-based Web technologies, revealed new

initiatives for secure mashups and mobile AJAX.

AJAX is the technology behind the increasingly popular "mashup", a Web site or

application that combines content from more than one source into an integrated

experience. As AJAX and mashups continue to gain widespread acceptance under

the Web 2.0 umbrella, it is critical for organizations to understand these

technologies and to avoid possible problems by adhering to best practices.

The alliance prepared a new white paper titled "AJAX and Mashup Security",

itemizing the ways in which AJAX applications could be attacked, and providing

a set of best practice techniques to address each vulnerability. Available at

www.openajax.org, the white paper

represents the collaborative efforts of AJAX security experts, and was a joint

effort with the Marketing Working Group.

"ICEsoft has long recognized that security for enterprise-class applications

is a critical requirement," said Robert Lepack, VP of Marketing for ICEsoft

Technologies. "We view the publication of the Open Ajax white paper 'AJAX and

Mashup Security' to be an important step in the ongoing need to both educate

customers on the potential security risks of AJAX applications, and the best

practices described in the paper to be a key step toward developing much

needed standards."

In addition to a strong focus on security, the OpenAjax Alliance held

InteropFest 1.0, which is the final integration testing phase of OpenAjax Hub

1.0. OpenAjax Hub is a small JavaScript library that allows multiple AJAX

toolkits to work together on the same page. The central feature is a

publish/subscribe event manager, which enables loose assembly and integration

of AJAX components. OpenAjax Alliance will deliver both an open specification

and a reference open source implementation. Standards are the key to

interoperability, and allow the true possibilities of AJAX and Web 2.0 to be

realized.

"To further advance the AJAX ecosystem, OpenAjax Alliance members together are

developing a standard way to describe AJAX controls and their programmatic

interfaces so that it becomes easier for developers to use AJAX libraries with

development tools," said Kevin Hakman, director, TIBCO Software Inc. and Chair

of the Alliance IDE Working Group. "We're on pace to have an AJAX control

description specification ready for early 2008."

With finalization activities on OpenAjax Hub 1.0, the alliance has begun work

on OpenAjax Hub 1.1, which will add support for secure mashups and to enable

mediated Comet-style client-server messaging. As with OpenAjax Hub 1.1, the

alliance will deliver both a specification and a commercial-quality open

source reference implementation. The secure mashup features of OpenAjax Hub

1.1 will isolate mashup components in secure "sandboxes" and use the OpenAjax

Hub's publish/subscribe features to achieve mediated cross-component

messaging.

Also a part of InteropFest 1.0, interoperability certificates were awarded to

the following member organizations for their participation: 24SevenOffice,

Apache XAP, Dojo Foundation, ILOG, Getahead, IT Mill, Lightstreamer,

Microsoft, Nexaweb, Open Link, Open Spot, Software AG, and Tibco. The

interoperability event required integration of an organization's AJAX toolkit

with the OpenAjax Hub and at least one other AJAX component, where

cross-component messaging is accomplished using the OpenAjax Hub.

Interestingly, Microsoft's ASP.NET AJAX, formerly called Atlas, passed the

interoperability tests:

http://www.internetnews.com/dev-news/article.php/3701966

About 90 companies have joined the OpenAjax Alliance since it was formed in

1990. The mashup security white paper is available at

http://www.openajax.org/whitepapers/Introducing%20Ajax%20and%20OpenAjax.html

Sun Opens More Open Source

Sun held a developer summit of sorts, Oct. 15th, on its Santa Clara campus, for

many of Open Source projects it supports. Besides a report on Java

and the OpenJDK project, Sun engineers who are principals in the Postgres

project and their Glassfish application server project also reported on

progress.

Mark Reinhold, a member of the OpenJDK project's governing board, confirmed that

more of Java is now open source, with only about 4% still requiring what he

called "binary plugs" for sound and image drivers. He explained that Sun

still does not have the rights to make that code available, but is pushing the

code owners to release the necessary items or face a "name and shame" initiative

from Sun and other contributors to the OpenJDK.

The OpenJDK interim governance board was formed at JavaOne, last spring, and has

met in teleconference twice and face-to-face only once before the Sun Summit.

According to Reinhold, the plan is to have a draft constitution written by this

December, and to get it ratified by Spring 2008. As this independent

infrastructure is being built up, Sun is providing leadership and

support. Reinhold said that Sun would mostly act as "a benevolent

dictator", until OpenJDK could run on its own.

Version 2 of the Glassfish application server, based on Java 5, was

released in mid-September. It featured substantial performance

improvements, especially in clustering, and billed as

enterprise-ready. Sun will develop this Glassfish release as a product

for its customers.

GlassFish Version 3 is already under development, and will be a major change in

project architecture. It will become fully modular, based on a

100kB kernel, and will also feature multiple class-loaders. The

micro-kernel and JVM will have extensions to efficiently run major scripting

languages, such as PHP and Ruby.

Josh Berkus, both a long time Sun engineer and a long time contributor to the

PostgreSQL project, discussed the coming version of Postgres, as well as its

history going back to the 1980s at UC Berkeley.

There are an estimated 15 million "embedded" users of Postgres and over 200

active developers. Postgres is now distributed with Solaris &

OpenSolaris.

The presentations concluded with an Open Source Worldwide Panel led by Ian

Murdock and attended by developers from Europe, India, and Latin America,

including Suvendu Ray and Bruno Souza, among others. One issue discussed

was the twin problem of localization and de-localization: not only

efficient translation of language, but also mechanisms for sharing back the work

of developers collaborating in their local languages. A video of the panel

is available from Podtech here:

Open

Source Worldwide Panel.

On October 22-23, in parallel with InterOp NY, Sun will host the

Start-up Camp event in New York

City. The goal of Start-up Camp is to bring entrepreneurs and vendors together

to network, share advice, and learn from the experiences of other startups. The

event will continue to be a face-to-face, collaborative event, with the agenda

determined during the event. Start-up University will kick off Start-up Camp,

and provide an opportunity for attendees to learn about various topics in an

educational format.

Gphone due in November

The long-rumored Google answer to the Apple iPhone will make its debut in early

November, probably on November 5th, according to blogs and Google watch

sites. It will be a data-driven, Linux mobile device hosting Google

desktop gadgets and Google Web apps.

Unlike Apple, which tried to restrict developer access to its proprietary iPhone

platform, Google has been building developer communities for its varied

applications, and will probably leverage that support to enhance the new

platform. The Google Gears framework allows developers to create widgets

and gadgets that can be intermittently connected. And Google already has a

partner relationship with Samsung for putting Gmail and Google Search on 3G

phones. Google also recently purchased the Finnish social-networking company

Jaiku, which helps "friends" find each other via their mobile devices.

Perhaps this was the missing piece to Google's vision.

What is uncertain is whether Google will partner with the wireless carriers or

compete with them. And will it partner with one or more music services?

Also to be determined is if there will be an ad-supported version of the

messaging and voice services. Maybe 2008 will be the year of free Mobile

Linux.

http://blogs.zdnet.com/Berlind/?p=842

http://www.jessestay.com/articles/2007/10/12/could-google-launch-the-gphone-november-5/

http://googlewatch.eweek.com/content/google_products/for_google_phone_rumors_press_1_for_more_google_phone_rumors_press_2.html

VMworld 2007 Winners Announced

The Best of VMworld Awards, presented by SearchServerVirtualization.com,

recognize the best products shown during VMworld 2007. The overall Best of Show

award was given to IBM and VMware, jointly, for the recently announced IBM

System x 3950 M2 with VMware's new embedded hypervisor, ESX Server 3i.

In this first year for the Best of VMworld 2007 awards, judges awarded a Gold

award and Finalist awards in each of the six award categories. Go here for

complete details:

http://searchservervirtualization.techtarget.com/originalContent/0,289142,sid94_gci1272002,00.html

The judges also awarded a New Technology award to Onaro, Inc. for VM Insight,

which monitors and manages both physical and virtual machines; to Marathon

Technologies Corp. for its everRun FT for XenEnterprise; and to InovaWave, Inc.

for the InovaWave VirtualOctane for ESX Server. In addition, Green Storage

to AMD for its quad-core processor technology.

Events

November

NY Technology Forum

November 1-2, New York, NY

http://www.govtech.com/events/silo.php?id=121943

CSI

2007, November 3-9, Washington, D.C.

at the Hyatt Regency Crystal City.

http://www.csiannual.com/

Interop Berlin, Nov 6-8, 2007

http://www.interop.eu/

SOA

Executive Forum 2007

November 7-8, Millennium Broadway Hotel, New York City.

https://ssl.infoworld.com/servlet/soa/soa_reg.jsp

QCon San Francisco conference

Nov 5-9, 2007, Westin Market Street

Discount code: devtownstation_qconsf2007

http://www.elabs3.com/c.html?rtr=on&

Oracle

OpenWorld San Francisco

November 11-15, 2007,

San Francisco

http://www.oracle.com/openworld/

Supercomputing 2007

Nov 9-16, Tampa, FL

http://sc07.supercomputing.org

MemCon Tokyo

Nov. 13-14, 2007

Attendance is free for industry professionals

http://www.denali.com/memcon/registration2007TK.html

Certicom ECC Conference 2007

November 13-15, Four Seasons Hotel, Toronto

(focused on the use of Suite B crypto algorithms in the enterprise)

http://www.certicom.com/index.php?action=events,ecc2007_intro

Mobile Internet World

November 13-15, 2007, Hynes Center, Boston

http://www.mobilenetx.com/

IT

Governance Compliance Conference

November 14-16, 2007, Boston, Mass.

Large Installation System Administrators (LISA) Conference

November 14-15, 2007, Dallas, Texas, USA

http://www.usenix.org/events/lisa07/

Gartner Identity & Access Management Summit

14-16 November 2007, Los Angeles, CA

http://www.gartner.com/it/page.jsp?id=502298&tab=overview

Gartner 26th Annual Data Center Conference

November 27-30, Las Vegas, NV

http://www.gartner.com/it/summits/lsc26/index.jsp

December

Agile Development Practices Conference

December 3-6, 2007, Shingle Creek Resort, Orlando, FL

http://www.sqe.com/agiledevpractices/Schedule/Default.aspx

Gartner Enterprise Architect Summit

December 5-7, Las Vegas, NV

http://www.gartner.com/it/page.jsp?id=506878&tab=overview

January

SPIE Photonics West 2008

19 - 24 January,

San Jose, CA

ttp://spie.org/photonics-west.xml

MacWorld Conference and Expo

January 14-18, San Francisco

www.macworldexpo.com/

Distros

PostgreSQL beta 1 for version 8.3

A new version of the venerable Postgres database went into beta in October.

Among the features in the new version are:

-

Greatly improved performance and consistency, through HOT, Load Distributed

Checkpoint, JIT bgwriter, Asynchronous Commit, and other features.

-

TSearch2 full text search integrated into the core code with improved syntax

and ease of adding custom dictionaries.

-

SQL/XML syntax.

-

Logging to database-loadable CSV files.

-

Automated rebuilding of cached plans.

-

ENUMs, UUIDs, and arrays of complex types.

-

GSSAPI and SSPI authentication support.

... and many others.

See the

release notes here for a more complete list of new features.

In September, EnterpriseDB announced a new version of its EnterpriseDB Postgres,

the professional-grade distribution of the open source PostgreSQL database. The

new version of EnterpriseDB Postgres is based on Postgres 8.2.5.

The latest release includes a MySQL-to-PostgreSQL Migration Toolkit and a

Procedural Language Debugger. The Migration Toolkit, previously available as a

proprietary component of EnterpriseDB Advanced Server, the company's flagship

commercial database offering, has been re-released under the Artistic License,

an OSI-approved open source license.

OpenOffice.org 2.3 Released

The new OpenOffice includes new features in addition to improvements to

stability, performance, Microsoft Office compatibility, and accessibility. Some

new features are available as free extensions that increase OpenOffice.org

functionality.

New Chart: OpenOffice.org 2.3 introduces an all new chart with a new

chart wizard for complex charts. The 3D charts include several new charts types,

for example:

-

Regression curves

-

3D Exploded Pie

-

3D Donut

-

3D smooth lines and several others

Also improved is the data source handling where data ranges for columns and

rows labels can be separated from the data source range for the chart values,

and the ability to select different x-values for different series. There is

enhanced automatic logarithmic scaling. Finally, there are performance

improvements and an enhanced import and export of Microsoft Office charts.

OpenOffice.org 2.3 is

available for

download here.

New Feature: OpenOffice.org Extensions are independent add-ons to

enhance OpenOffice.org functionality. Sample extensions are:

Sun Report Builder:

Now availible for OpenOffice.org 2.3 is the Sun Report Builder for creating

stylish and smart database reports. The flexible report editor can define

group and page headers as well as group and page footers and even calculation

fields are available to accomplish complex database reports.

The Sun Report Builder uses the Pentaho Reporting Flow Engine of

Pentaho BI. This Extension

is available for free on

Sun

Report Builder.

Send and receive faxes with the OpenOffice.org eFax Extension:

Replace your fax machine with the eFax extension for OpenOffice.org, an online

fax service that eliminates the need for a fax machine. You can continue to

use your existing fax number or use a new number for free. When someone faxes

to your selected number, the fax is displayed in the eFax Messenger solution

on your computer, or the fax is converted to a file that is emailed to you as

an attachment.

eFax is a service of

J2global; for more

information see the

eFax web

page. This Extension is available for free on

OpenOffice.org

eFax Extension.

Mandriva Linux 2008.0 Now Out

The 21st release of Mandriva Linux was released in October and includes

three editions: "One", "Powerpack", and "Free". Versions "One" and

"Free" can be downloaded free of charge from official Mandriva mirrors

and via BitTorrent (visit

this page for downloads).

"Powerpack" is a commercial edition available by purchase from the Mandriva Store. For information on

the differences between the editions, visit Mandriva's Choosing

the right edition page.

A recent development snapshot of KDE 4 is available as a preview in Mandriva

Linux 2008, and NTFS write support is also included. The

Mandriva

Linux 2008 Errata list known issues with 2008 and how to fix or work around

them.

KDE 4 Beta 3 is out, Fedora 8 coming soon

This late-stage beta was available in mid-Oct. and also marks a freeze

of the KDE Development Platform. New educational features and

enhancements are included with further polishing of the KDE codebase.

The aim of the 4.0 release is to put foundations in place for future

innovations.

However, KDE 4.0 will probably not be ready for the first release of Fedora 8 in

early November. The October Fedora 8 beta included a completely free and

open source Java environment called

IcedTea,

which is derived from the OpenJDK project.

Elpicx 1.1 helps prep for LPI exam

The elpicx 1.1 live DVD was released

in mid-October. Elpicx is a Knoppix and CentOS-based Linux system that

helps students prepare for the Linux Professional Institute (LPI)

certification exam, by providing test emulators and a number of LPI

reference cards, study notes, preparation guides, and exam exercises.

Version 1.1 is based on Knoppix 5.1.1 and CentOS 4.3. Visit the project's

home page to read the

complete release announcement.

MEPIS 7 and its Anti-X due by November

MEPIS 7 RC Beta 5 was released in late September, and another RC is

expected around November 10. Then it is on its way toward final

release of version 7, at the end of November or early December.

The 7.0 RC 1 release of "antiX", a lightweight derivative of MEPIS, was out in

late September. AntiX is built and maintained by a MEPIS community member as a

free version of MEPIS for very old 32-bit PC hardware. The antiX Web site is at

antix.mepis.org, and an antiX forum is hosted at

www.mepislovers.org

AntiX 7.0 RC 1 is like AntiX 7.0 Beta2, except that the look and the packages

have been updated to be in sync with MEPIS 7.0 Beta 4. There are also fixes

for reported bugs that are unique to AntiX.

AntiX is designed to work on computers with as little as 64MB of RAM and

Pentium II or equivalent AMD processors. ISO images and deltas are available

in the "testing" subdirectory at the MEPIS Subscriber's Site and at the MEPIS

public mirrors.

Products

ASUS Launches Low Cost Eee PC

ASUSTeK will be offering a new low-cost laptop PCs to consumers for as little

$250, by year end. These laptops are targeted at non-power-users in

markets that are nearing saturation. They will function as personal as opposed

to business computers, and may be adopted by teens and tweeners.

Called Eee PCs, the new line of notebooks will initially be available in

English and classical Chinese versions, the latter for sale in Taiwan. Other

languages may be supported in 2009. Prototypes were shown at Intel IDF in San

Francisco.

These low-cost PCs will run both open-source Linux and Windows (but that

was a change for the prototypes shown in SF). Windows versions of the computer

would cost only about $50 more than Linux versions, about $300, suggesting

that Microsoft had offered the Windows operating systems at a big discount in

order to play on the Eee. But this still should provide a Linux incentive to

consumers.

The Eee will be first laptop to carry the ASUSTeK brand, rather than being

OEMed for other companies.

Zpower innovates Laptop Batteries

A safer, lighter, smaller and recyclable battery for computers and phones is

poised for production.

ZPower, formerly known as Zinc Matrix Power, has developed advanced,

rechargeable silver-zinc batteries. Initially targeted for notebook computers,

cell phones, and MP3 players, ZPower batteries currently have 30% greater energy

density than traditional lithium-ion batteries. Intel is interested in promoting

the technology, and has made a 10% equity investment in the firm.

ZPower showed its wares at the recent Intel IDF conference in SF. This included

working silver-zinc batteries for laptops that were half the thickness of

current lithium-ion batteries. When in full production in 2008, these batteries

will get 20-30% more battery life on a charge. Production goals for 2009 are

20-30% weight reductions and a target of 30-50% greater battery life.

In addition to the performance benefits, ZPower batteries are inherently safer.

The technology features a water-based chemistry that, and is not

flammable or toxic. The battery contains no lithium or flammable

liquids and is therefore free from the problems of thermal runaway,

fire, and danger of explosion. Silver-zinc batteries are not subject to

airline restrictions.

The new batteries also environmentally friendly. The primary elements used to

produce the batteries can be 100 percent recycled and re-used. The raw materials

recovered in the recycling process of silver-zinc batteries are the same quality

as those that went into the creation of the battery. The company plans to offer

cash incentives to consumers recycling the batteries.

Dr. Ross Dueber, a scientist at ZPower, told Linux Gazette that

the first production silver-zinc batteries will be about 10mm thick but

about the same weight as current batteries. Weight reduction will come

from better use of the materials and manufacturing efficiencies. The

first manufacturing partner will be Tyco Electric. ZPower is currently

working with other leading manufacturers of notebook computers and cell

phones to incorporate silver-zinc technology in next generation

products.

ZPower won Intel's "Technology Innovation Accelerated" (TIA) Award

in the Mobility category at the 2006 Fall IDF conference. The company

was also recently named a "GoingGreen 100" winner by AlwaysOn.

Troy Renken, Vice President of Product Planning and Electronics at ZPower Inc.

was a featured speaker in the Extended Battery Life panel at the Intel Developer

Forum (IDF) in Taipei, Taiwan on October 16th. The panel, titled "Realizing

the Vision of All Day and Beyond Battery Life for Mobile PCs," discussed

industry initiatives underway to extend battery life.

IBM and Linden Labs Partner on Open 3D Standards for Collaboration

IBM and Linden Lab, creator of the virtual world

Second Life

(www.secondlife.com),

will work with a broad community of partners to drive open standards and

interoperability to enable avatars -- the online persona of visitors to these

online worlds -- to move from one virtual world to another with ease and

support applications of virtual world technology for business and society in

commerce, collaboration, education and more.

As more enterprises and consumers explore the 3D Internet, the

ecosystem of virtual world hosts, application providers, and IT vendors

need to offer a variety of standards-based solutions in order to meet

end user requirements. To support this, IBM and Linden Lab are

committed to exploring the interoperability of virtual world platforms

and technologies, and plan to work with industry-wide efforts to further

expand the capabilities of virtual worlds.

"We have built the Second Life Grid as part of the evolution of the

Internet," said Ginsu Yoon, vice-president, Business Affairs, Linden

Lab. "Linden and IBM shares a vision that interoperability is key to the

continued expansion of the 3D Internet, and that this tighter

integration will benefit the entire industry. Our open source

development of interoperable formats and protocols will accelerate the

growth and adoption of all virtual worlds."

IBM and Linden Lab plan to work together on issues concerning the

integration of virtual worlds with the current Web; driving

security-rich transactions of virtual goods and services; working with

the industry to enable interoperability between various virtual worlds;

and building more stability and high quality of service into virtual

world platforms. These are expected to be key characteristics facing

organizations that want to take advantage of virtual worlds for

commerce, collaboration, education and other business applications.

More specifically, IBM and Linden Lab plan to collaborate on:

- "Universal" Avatars: Exploring technology and standards

for users of the 3D Internet to travel between different virtual worlds.

Users could maintain the same avatar name, appearance and other

important attributes (digital assets, identity certificates, and more)

for multiple worlds. The adoption of a universal avatar and associated

services are a possible first step toward the creation of a truly

interoperable 3D Internet.

-

Security-rich Transactions: Collaborating on the requirements for

standards-based software designed to enable the security-rich exchange of

assets in and across virtual worlds. This could allow users to

perform purchases or sales with other people in virtual worlds.

-

Integration with existing Web and business processes: Allowing

current business applications and data repositories, regardless of their

source, to function in virtual worlds.

-

Open standards for interoperability with the current Web:Open

source development of interoperable formats and protocols. Open

standards in this area are expected to allow virtual worlds to connect

together, so that users can cross from one world to another, just as they

can go from one Web page to another on the Internet today.

IBM has hosted discussions on virtual world interoperability, the role of

standards, and the potential of forming an industry-wide consortium open to

all. Linden Lab has formed an Architecture Working Group that describes the

road-map for the development of the Second Life Grid. This open collaboration

with the community allows users of Second Life to help define the direction of

an interoperable, Internet-scale architecture.

For more information about the Second Life Grid, visit

http://secondlifegrid.net/.

IBM Introduces its "Virtualization-Ready" System X Server

IBM has previewed the fourth generation of its virtualization chipset

technology, X4, to be available in a high-end, scalable server

leveraging the latest in quad-core processing technology from Intel. The

System x3950 M2 server will debut with a new embedded hypervisor capability,

enabling clients to easily deploy virtualized server applications right out of

the rack.

X4 chipsets mark significant advances in performance, availability, and

processing efficiencies for the IBM System x line of servers. X4 will enable x86

server configurations to fuel virtualization on high-end systems. Several other

new features will allow clients to easily adopt virtualization.

The new server will be ready for virtualization right out of the box by

eliminating software setup and installation time. An internal USB interface will

accommodate chip-based or "embedded" virtualization software preloaded on a 4GB

USB flash storage device.

The new system offers double the memory slot capacity. Also, four times the

amount of memory can be hosted on a single chassis compared to the previous

system, enabling more virtualization workloads.

IBM has developed and released three generations of X-Architecture chipsets

since 1997, and remains the only top-tier vendor in the industry to incorporate

its own chipset in Intel-based servers. The third generation chipset, X3,

introduced in 2005, was optimized for server consolidation and enterprise

application software.

Sun Intros Smallest Enterprise-Class Four-Socket Quad-Core Server

In late September, Sun Microsystems introduced its first quad-core x64 systems,

which deliver up to twice the expandability and compute power as other servers

at half the size. The Sun Fire

X4450 and Sun Fire X4150

servers, powered by Quad-Core Intel Xeon processors, solve critical problems

in the datacenter by offering more performance, higher density, and better power

efficiency than do competing systems. Both servers also can run Solaris, Linux,

Windows, or VMware.

The Sun Fire X4450 server is smallest four-socket 16-way rackmount server in a

2U form factor, and saves as much as 50 percent of the energy consumption tof

other servers, resulting in lower energy and cooling costs. The Sun Fire X4150

server, powered by the Quad-Core Intel Xeon processor 5300 series, is a

two-socket 1U system with up to twice the memory, internal storage, and

networking connectivity as competitive two-socket 1U servers. With more than 1

terabyte of high-performance internal disk storage, it is a good solution for

database and other disk-intensive applications.

The Sun Fire X4150 server has entry-level pricing starting at $2,995. The Sun

Fire X4450 has entry-level pricing starting at $8,895. For more information on

the Sun Fire X4450 and X4150 servers, please visit:

http://www.sun.com/X4450 and

http://www.sun.com/X4150

A video of the server announcement is available here:

http://sunfeedroom.sun.com/linking/index.jsp?skin=oneclip&fr_story=FRsupt215388&hl=false

--and all video segments here:

http://sunfeedroom.sun.com [...]

At the announcement, Pat Gelsinger, Senior Vice-President and General Manager

for Intel's Digital Enterprise Group, spoke about Intel-planned developments at

the 45 nm level. Sun also announced new Solaris features, including a graphical

installer, and enhancements to its famous D-Trace, including integration with

its NetBean Studio developer tools.

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2007, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 144 of Linux Gazette, November 2007

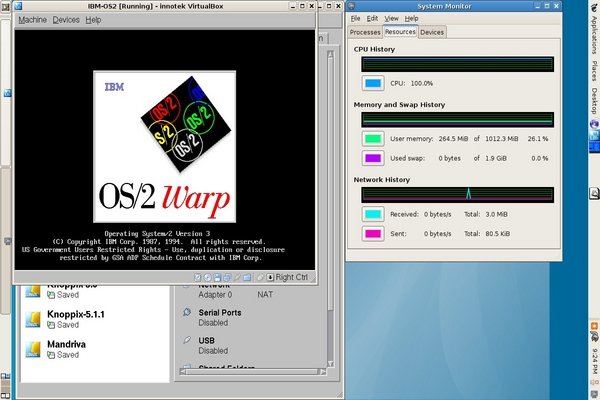

Virtualization made Easy

By Edgar Howell

Introduction

Recently, I finally found the time to check out something that had originally

been brought to my attention by an announcement in a local publication

early this year: Innotek's VirtualBox.

In short, this is an outstanding product that deserves consideration

by anybody in any way interested in virtualization.

Background

For a very long time, VMWare has been the name in

virtualization. A year or so ago, I played with their VMPlayer and what

it could do was very impressive, but an OS under it was noticeably

slower than natively on hardware. My understanding was that it could

emulate any environment, but at a considerable price.

Xen has been the "other" contender for a while.

When I was experimenting with it, it seemed to address only servers.

And required modifications to both operating systems involved.

VirtualBox, as I understand it, is in a sense between the two.

Ignoring the optional "guest additions",

it needs no alteration to either OS.

Rather, it provides a layer of software that

makes available one particular machine configuration and

can trap "dangerous" instructions and patch them on-the-fly

such that the OS in the VM can execute without prior modification.

Due to the patching, this only needs to be done once.

In my experience, this seems to work quite well.

Terminology

Typically, the term "host" refers to a combination of an

operating system and a PC or other computer (i.e., software + hardware),

as in the name of a GNU/Linux host in a network.

But in the context of virtualization:

•

"host" is the OS immediately interacting with the hardware

under which, in this case, VirtualBox has been installed;

•

"guest" is the OS installed in this environment and

accessing the virtual hardware made available by VirtualBox

running under control of the host.

The VirtualBox documentation talks about 2 states, "powered off" and "saved".

This is a simplification

that might cause some grief for those not used to dual-boot:

•

"Saved" is likely the state of greatest interest.

When you tell VirtualBox to save the machine state,

it is much like making use of the swap partition

to save the current state of an OS to speed up later re-boot after shutdown.

•

"Powered off" has precisely the same effect as

pulling the power plug on hardware:

buffers will not be flushed, you almost certainly will lose data,

on re-boot you will go through the fsck procedure.

This may make sense in some situations as when you so mess up

an installation that you want to start over anyhow.

On those -- likely rare -- occasions when you do not want to save

the current machine state of the guest OS,

in all probability, you will want to use the shutdown you would use normally,

whether via GUI or root: "shutdown -h now".

This leads to the state "powered off", but without loss of data.

Installation

This is professional-quality software with corresponding documentation.

Installation is extremely straight-forward.

This is true of both VirtualBox itself and

installing virtual machines under it.

It works as described in the documentation, and is quite easy to use.

No need for comments, other than that you should be sure to allow enough room

in the partition where the host resides.

Here are some statistics from a notebook:

Host partitions:

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda12 27774776 21330516 5033356 81% /

tmpfs 518276 0 518276 0% /lib/init/rw

udev 10240 116 10124 2% /dev

tmpfs 518276 0 518276 0% /dev/shm

/dev/sda9 4814936 109892 4460456 3% /shareEXT2

total 14488176

Guest machines available in the root partition:

-rw------- 1 web web 13634048 2007-09-06 11:30 Borland.vdi

-rw------- 1 web web 1844457984 2007-09-18 10:50 Debian-4.0a.vdi

-rw------- 1 web web 2926592512 2007-09-13 17:17 Debian-4.0.vdi

-rw------- 1 web web 2346725888 2007-09-26 14:19 Debian-4.0-xfce.vdi

-rw------- 1 web web 2905618944 2007-09-08 20:36 Fedora-Core-6.vdi

-rw------- 1 web web 12800 2007-09-09 08:08 Knoppix-5.1.1.vdi

-rw------- 1 web web 2422223360 2007-09-05 12:04 Kubuntu.vdi

-rw------- 1 web web 2775593472 2007-09-10 12:58 Mandriva.vdi

By the way, the above are just "virtual drives" and do not reflect,

for example, the Debian Xfce and Knoppix ISO's also taking up space

in an almost 30GB partition.

Based on past experience

I gave each machine 3GB to be used dynamically as needed,

not taken and formatted up front.

A Debian Example

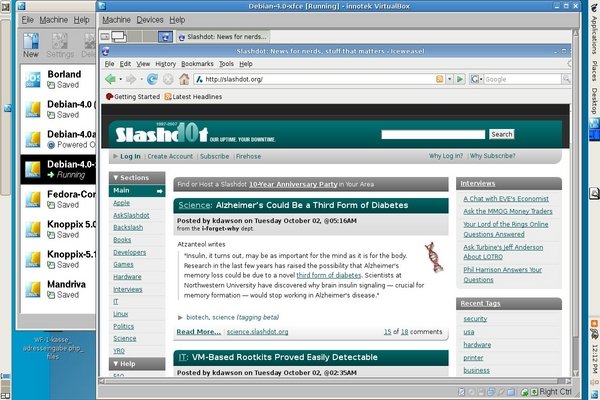

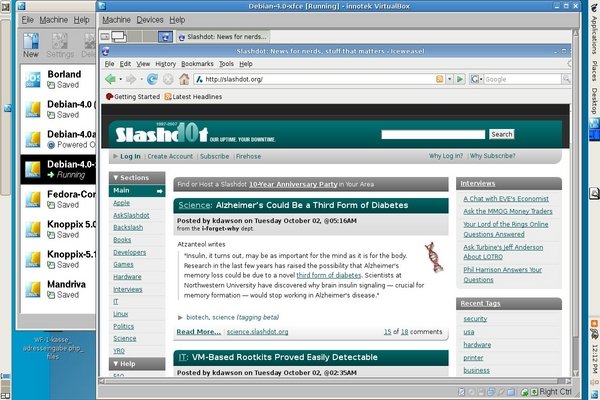

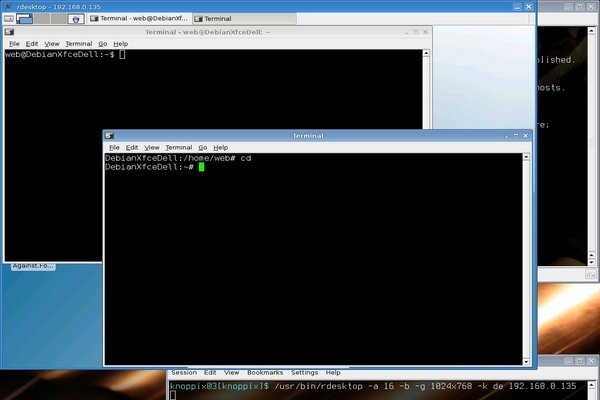

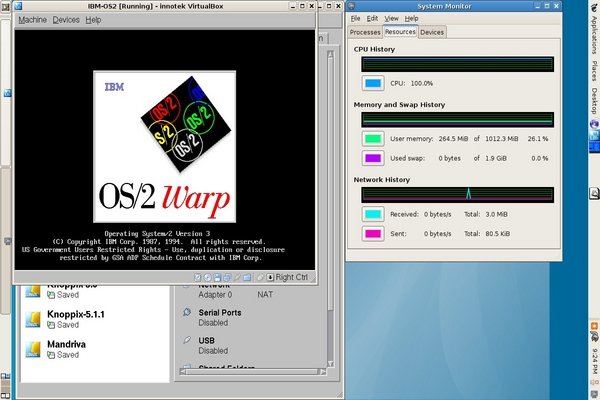

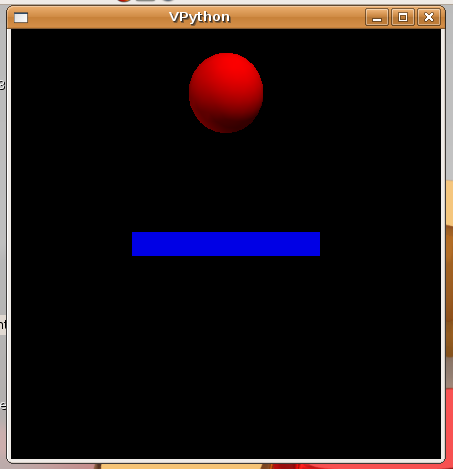

Here are a couple of screen-shots of an XFCE-based Debian installation

under VirtualBox.

This is what the display shows after using a VM as it is started by

VirtualBox.

You can see a bit of the VirtualBox window, the Debian guest itself,

and to the extreme left and right the task bars of the Debian host,

which I moved there only because of the proportions of the notebook display.

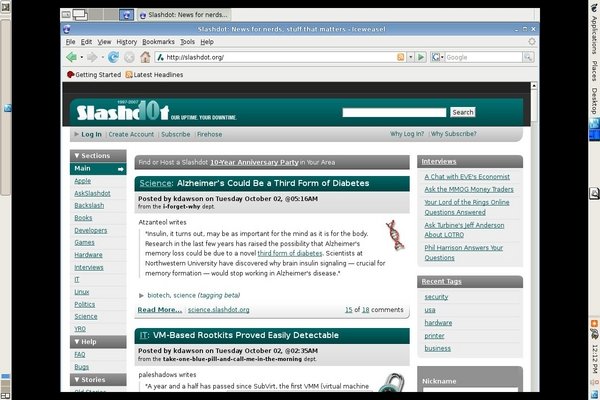

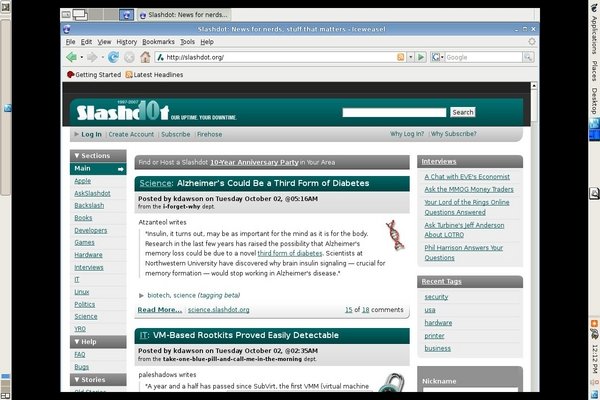

And here it is full-screen.

Not a great deal of difference:

on a notebook at least, full-screen doesn't do much more than

hide everything other than the VM of interest and the task bars of the host.

In particular, the VirtualBox task bar with the buttons to shut down the VM

are no longer visible.

Guest Additions

The so-called "Guest Additions" install code in the guest

OS to enhance behavior.

Innotek recommends installing them, and I concur.

The major enhancements are:

•

Coordination of time on VM and host

While there is no absolute necessity of having the VM reflect the

correct time of day -- indeed, the IBM-DOS/Borland VM shown

on the VirtualBox window in the first screen-shot above

can't even display the correct year! -- this seems desirable.

•

Sharing directories between VM and host

The VirtualBox documentation refers to "shared folders", but they are

directories and very useful if you need to move any data from one

environment to another.

•

Elimination of "mouse capture"

Natively a VirtualBox VM will not let the mouse move the cursor

outside of the area it occupies on the display.

If you have more than one VM active on the display at one time,

this can be a feature (overridden with the "host" key as documented).

I prefer to use the workspaces or desktops or whatever the dickens

the GUI-VTs are called to house the one or two VMs simultaneously active

(as on the bottom part of the left-most Debian host task bar, above).

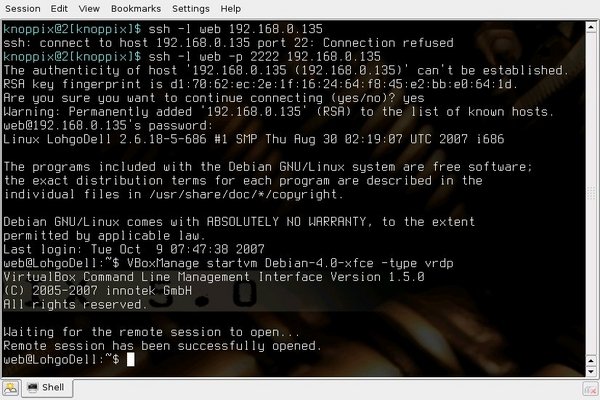

Remote Access

Remote access is very easy, and could be a way of obtaining lots more

use from old notebooks or PCs.

All you really need is a host machine with enough power to support a

couple of users, pretty much any PC by now.

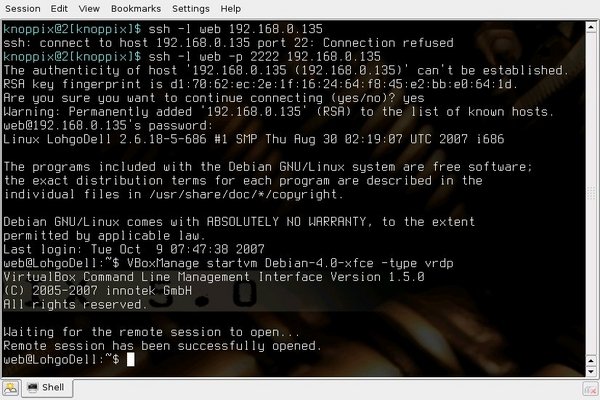

You can either start a virtual machine via the GUI on the host or

use ssh -- you need remote access anyhow -- as in the following

from a PC under Knoppix via the LAN to the notebook:

Once the virtual machine is running (a matter of a few seconds,

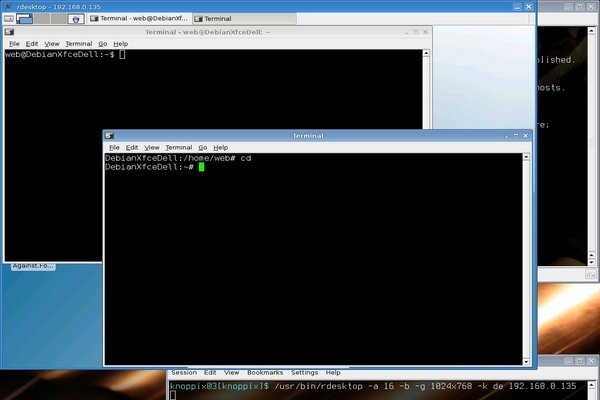

if it had previously been ended with "save state"),

you can logon to it with the command seen in the tiny window at the bottom:

At this point, response times are only limited by the network.

Internet access is available if the VM has it via the host.

The only caveat is that it is very easy to forget to shut down the VM properly;

terminating all connections to the VM without doing this

leads to the state "aborted", possible loss of data,

and the inevitable fsck delay on re-boot.

Security

With no claim to expertise in security matters I hesitate to offer suggestions,

only the following comments.

To the extent one trusts Innotek or has looked at the source code,

VirtualBox seems to improve security.

With NAT to the outside world it should only recognize

responses -- no connection requests -- from outside the host;

pretty much the behavior of a paranoid firewall.

Since VirtualBox supports snapshots, once set up it is possible

to re-boot the virtual machine from a snapshot, thereby eliminating any

possible changes to the environment about as effectively as using

a live CD such as Knoppix to browse the Internet.

To further improve security one can remove lots of software from

the host.

After all, it is only functioning as an intermediary, doesn't really need

a browser, for example.

However, keep in mind the reason that IP-Cop refuses to share a hard disk

with any other OS:

the more software on the hardware, the greater the risk of failure somewhere.

And if someone somehow should be able to break into your host with sufficient

privileges, he could easily send himself all of your VM's

to be looked at off-line at his leisure!

As usual, your call.

Use in Practice

Since initially installing VirtualBox with a Debian 4.0 host and

among others a Debian 4.0 guest

early in August, I have done easily 80 - 90% of my

Internet access in this environment on a notebook.

On rare occasion there has been disk I/O that the host attributed