- Table of Contents

- Introduction

- 1. Bash and Bash scripts

- 1.1. Common shell programs

- 1.2. Advantages of the Bourne Again SHell

- 1.3. Executing commands

- 1.4. Building blocks

- 1.5. Developing good scripts

- 1.6. Summary

- 1.7. Exercises

- 2. Writing and debugging scripts

- 2.1. Creating and running a script

- 2.2. Script basics

- 2.3. Debugging Bash scripts

- 2.4. Summary

- 2.5. Exercises

- 3. The Bash environment

- 3.1. Shell initialization files

- 3.2. Variables

- 3.3. Quoting characters

- 3.4. Shell expansion

- 3.5. Aliases

- 3.6. More Bash options

- 3.7. Summary

- 3.8. Exercises

- 4. Regular expressions

- 4.1. Regular expressions

- 4.2. Examples using grep

- 4.3. Pattern matching using Bash features

- 4.4. Summary

- 4.5. Exercises

- 5. The GNU sed stream editor

- 5.1. Introduction

- 5.2. Interactive editing

- 5.3. Non-interactive editing

- 5.4. Summary

- 5.5. Exercises

- 6. The GNU awk programming language

- 6.1. Getting started with gawk

- 6.2. The print program

- 6.3. Gawk variables

- 6.4. Summary

- 6.5. Exercises

- 7. Conditional statements

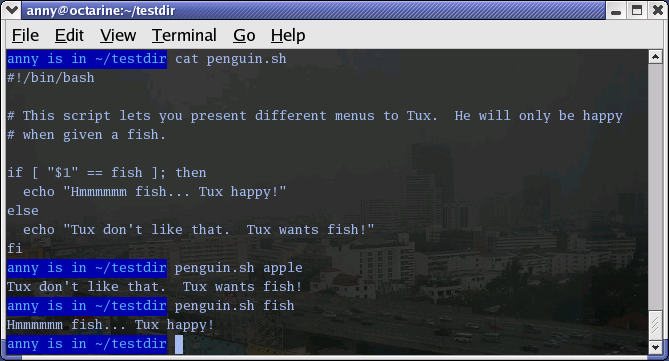

- 7.1. Introduction to if

- 7.2. More advanced if usage

- 7.3. Using case statements

- 7.4. Summary

- 7.5. Exercises

- 8. Writing interactive scripts

- 8.1. Displaying user messages

- 8.2. Catching user input

- 8.3. Summary

- 8.4. Exercises

- 9. Repetitive tasks

- 9.1. The for loop

- 9.2. The while loop

- 9.3. The until loop

- 9.4. I/O redirection and loops

- 9.5. Break and continue

- 9.6. Making menus with the select built-in

- 9.7. The shift built-in

- 9.8. Summary

- 9.9. Exercises

- 10. More on variables

- 10.1. Types of variables

- 10.2. Array variables

- 10.3. Operations on variables

- 10.4. Summary

- 10.5. Exercises

- 11. Functions

- 11.1. Introduction

- 11.2. Examples of functions in scripts

- 11.3. Summary

- 11.4. Exercises

- 12. Catching signals

- A. Shell Features

- A.1. Common features

- A.2. Differing features

- Glossary

- Index

- List of Tables

- 1. Typographic and usage conventions

- 1-1. Overview of programming terms

- 2-1. Overview of set debugging options

- 3-1. Reserved Bourne shell variables

- 3-2. Reserved Bash variables

- 3-3. Special bash variables

- 3-4. Arithmetic operators

- 4-1. Regular expression operators

- 5-1. Sed editing commands

- 5-2. Sed options

- 6-1. Formatting characters for gawk

- 7-1. Primary expressions

- 7-2. Combining expressions

- 8-1. Escape sequences used by the echo command

- 8-2. Options to the read built-in

- 10-1. Options to the declare built-in

- 12-1. Control signals in Bash

- 12-2. Common kill signals

- A-1. Common Shell Features

- A-2. Differing Shell Features

- List of Figures

- 1. Bash Guide for Beginners front cover

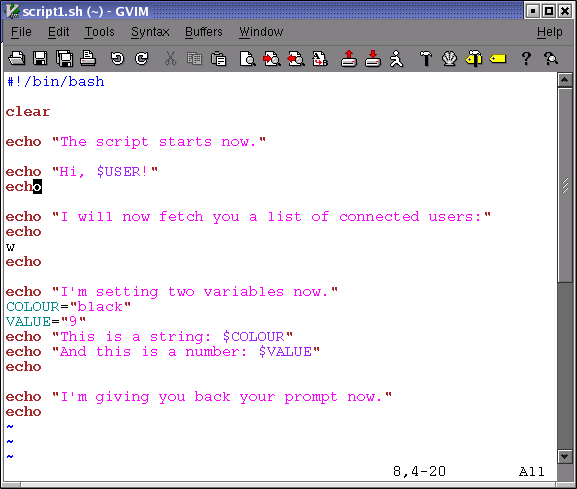

- 2-1. script1.sh

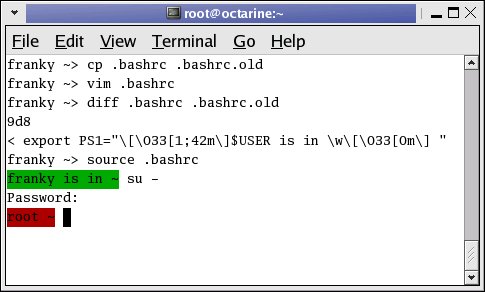

- 3-1. Different prompts for different users

- 6-1. Fields in awk

- 7-1. Testing of a command line argument with if

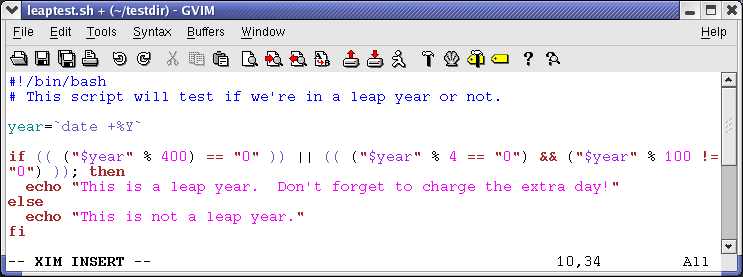

- 7-2. Example using Boolean operators

Introduction

1. Why this guide?

The primary reason for writing this document is that a lot of readers feel the existing HOWTO to be too short and incomplete, while the Bash Scripting guide is too much of a reference work. There is nothing in between these two extremes. I also wrote this guide on the general principal that not enough free basic courses are available, though they should be.

This is a practical guide which, while not always being too serious, tries to give real-life instead of theoretical examples. I partly wrote it because I don't get excited with stripped down and over-simplified examples written by people who know what they are talking about, showing some really cool Bash feature so much out of its context that you cannot ever use it in practical circumstances. You can read that sort of stuff after finishing this book, which contains exercises and examples that will help you survive in the real world.

From my experience as UNIX/Linux user, system administrator and trainer, I know that people can have years of daily interaction with their systems, without having the slightest knowledge of task automation. Thus they often think that UNIX is not userfriendly, and even worse, they get the impression that it is slow and old-fashioned. This problem is another one that can be remedied by this guide.

2. Who should read this book?

Everybody working on a UNIX or UNIX-like system who wants to make life easier on themselves, power users and sysadmins alike, can benefit from reading this book. Readers who already have a grasp of working the system using the command line will learn the ins and outs of shell scripting that ease execution of daily tasks. System administration relies a great deal on shell scripting; common tasks are often automated using simple scripts. This document is full of examples that will encourage you to write your own and that will inspire you to improve on existing scripts.

Prerequisites/not in this course:

You should be an experienced UNIX or Linux user, familiar with basic commands, man pages and documentation

Being able to use a text editor

Understand system boot and shutdown processes, init and initscripts

Create users and groups, set passwords

Permissions, special modes

Understand naming conventions for devices, partitioning, mounting/unmounting file systems

Adding/removing software on your system

See Introduction to Linux (or your local TLDP mirror) if you haven't mastered one or more of these topics. Additional information can be found in your system documentation (man and info pages), or at the Linux Documentation Project.

3. New versions, translations and availability

The most recent edition can be found at http://tille.garrels.be/training/bash/. You should find the same version at http://tldp.org/LDP/Bash-Beginners-Guide/html/index.html.

This guide is available in print from Fultus.com.

This guide has been translated:

Chinese translation at http://xiaowang.net/bgb-cn/, by Wang Wei.

Ukrainian translation at http://docs.linux.org.ua/index.php/LDP:Bash_beginners_guide, by Yaroslav Fedevych and his team.

A french translation is in the making and will be linked to as soon as it is finished.

4. Revision History

| Revision History | ||

|---|---|---|

| Revision 1.11 | 2008-12-27 | Revised by: MG |

| Processed input from readers. | ||

| Revision 1.10 | 2008-06-06 | Revised by: MG |

| address change | ||

| Revision 1.9 | 2006-10-10 | Revised by: MG |

| Incorporated reader remarks, added index using DocBook tags. | ||

| Revision 1.8 | 2006-03-15 | Revised by: MG |

| clarified example in Chap4, corrected here doc in chap9, general checks and correction of typos, added link to Chinese and Ukrainian translation, note and stuff to know about awk in chap6. | ||

| Revision 1.7 | 2005-09-05 | Revised by: MG |

| Corrected typos in chapter 3, 6 and 7, incorporated user remarks, added a note in chap7. | ||

| Revision 1.6 | 2005-03-01 | Revised by: MG |

| Minor debugging, added more keywords, info about new Bash 3.0, took out blank image. | ||

| Revision 1.0 | 2004-04-27 | Revised by: TM |

| Initial release for LDP; more exercises, more markup, less errors and abuse; added glossary. | ||

| Revision 1.0-beta | 2003-04-20 | Revised by: MG |

| Pre-release | ||

5. Contributions

Thanks to all the friends who helped (or tried to) and to my husband; your encouraging words made this work possible. Thanks to all the people who submitted bug reports, examples and remarks - among many, many others:

Hans Bol, one of the groupies

Mike Sim, remarks on style

Dan Richter, for array examples

Gerg Ferguson, for ideas on the title

Mendel Leo Cooper, for making room

#linux.be, for keeping my feet on the ground

Frank Wang, for his detailed remarks on all the things I did wrong ;-)

Special thanks to Tabatha Marshall, who volunteered to do a complete review and spell and grammar check. We make a great team: she works when I sleep. And vice versa ;-)

6. Feedback

Missing information, missing links, missing characters, remarks? Mail it to

the maintainer of this document.7. Copyright information

* Copyright (c) 2002-2007, Machtelt Garrels * All rights reserved. * Redistribution and use in source and binary forms, with or without * modification, are permitted provided that the following conditions are met: * * * Redistributions of source code must retain the above copyright * notice, this list of conditions and the following disclaimer. * * Redistributions in binary form must reproduce the above copyright * notice, this list of conditions and the following disclaimer in the * documentation and/or other materials provided with the distribution. * * Neither the name of the author, Machtelt Garrels, nor the * names of its contributors may be used to endorse or promote products * derived from this software without specific prior written permission. * * THIS SOFTWARE IS PROVIDED BY THE AUTHOR AND CONTRIBUTORS "AS IS" AND ANY * EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED * WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE * DISCLAIMED. IN NO EVENT SHALL THE AUTHOR AND CONTRIBUTORS BE LIABLE FOR ANY * DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES * (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; * LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND * ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT * (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS * SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. |

The author and publisher have made every effort in the preparation of this book to ensure the accuracy of the information. However, the information contained in this book is offered without warranty, either express or implied. Neither the author nor the publisher nor any dealer or distributor will be held liable for any damages caused or alleged to be caused either directly or indirectly by this book.

The logos, trademarks and symbols used in this book are the properties of their respective owners.

8. What do you need?

bash, available from http://www.gnu.org/directory/GNU/. The Bash shell is available on nearly every Linux system, and can these days be found on a wide variety of UNIX systems.

Compiles easily if you need to make your own, tested on a wide variety of UNIX, Linux, MS Windows and other systems.

9. Conventions used in this document

The following typographic and usage conventions occur in this text:

Table 1. Typographic and usage conventions

| Text type | Meaning | |

|---|---|---|

| "Quoted text" | Quotes from people, quoted computer output. | |

| Literal computer input and output captured from the terminal, usually rendered with a light grey background. | |

| command | Name of a command that can be entered on the command line. | |

| VARIABLE | Name of a variable or pointer to content of a variable, as in $VARNAME. | |

| option | Option to a command, as in "the -a option to the ls command". | |

| argument | Argument to a command, as in "read man ls". | |

command options arguments | Command synopsis or general usage, on a separated line. | |

| filename | Name of a file or directory, for example "Change to the /usr/bin directory." | |

| Key | Keys to hit on the keyboard, such as "type Q to quit". | |

| Graphical button to click, like the button. | ||

| -> | Choice to select from a graphical menu, for instance: "Select -> in your browser." | |

| Terminology | Important term or concept: "The Linux kernel is the heart of the system." | |

| The backslash in a terminal view or command synopsis indicates an unfinished line. In other words, if you see a long command that is cut into multiple lines, \ means "Don't press Enter yet!" | |

| See Chapter 1 | link to related subject within this guide. | |

| The author | Clickable link to an external web resource. |

10. Organization of this document

This guide discusses concepts useful in the daily life of the serious Bash user. While a basic knowledge of the usage of the shell is required, we start with a discussion of the basic shell components and practices in the first three chapters.

Chapters four to six are discussions of basic tools that are commonly used in shell scripts.

Chapters eight to twelve discuss the most common constructs in shell scripts.

All chapters come with exercises that will test your preparedness for the next chapter.

Chapter 1: Bash basics: why Bash is so good, building blocks, first guidelines on developing good scripts.

Chapter 2: Script basics: writing and debugging.

Chapter 3: The Bash Environment: initialization files, variables, quoting characters, shell expansion order, aliases, options.

Chapter 4: Regular expressions: an introduction.

Chapter 5: Sed: an introduction to the sed line editor.

Chapter 6:Awk: introduction to the awk programming language.

Chapter 7: Conditional statements: constructs used in Bash to test conditions.

Chapter 8: Interactive scripts: making scripts user-friendly, catching user input.

Chapter 9: Executing commands repetitively: constructs used in Bash to automate command execution.

Chapter 10: Advanced variables: specifying variable types, introduction to arrays of variables, operations on variables.

Chapter 11: Functions: an introduction.

Chapter 12: Catching signals: introduction to process signalling, trapping user-sent signals.

Chapter 1. Bash and Bash scripts

1.1. Common shell programs

1.1.1. General shell functions

The UNIX shell program interprets user commands, which are either directly entered by the user, or which can be read from a file called the shell script or shell program. Shell scripts are interpreted, not compiled. The shell reads commands from the script line per line and searches for those commands on the system (see Section 1.2), while a compiler converts a program into machine readable form, an executable file - which may then be used in a shell script.

Apart from passing commands to the kernel, the main task of a shell is providing a user environment, which can be configured individually using shell resource configuration files.

1.1.2. Shell types

Just like people know different languages and dialects, your UNIX system will usually offer a variety of shell types:

sh or Bourne Shell: the original shell still used on UNIX systems and in UNIX-related environments. This is the basic shell, a small program with few features. While this is not the standard shell, it is still available on every Linux system for compatibility with UNIX programs.

bash or Bourne Again shell: the standard GNU shell, intuitive and flexible. Probably most advisable for beginning users while being at the same time a powerful tool for the advanced and professional user. On Linux, bash is the standard shell for common users. This shell is a so-called superset of the Bourne shell, a set of add-ons and plug-ins. This means that the Bourne Again shell is compatible with the Bourne shell: commands that work in sh, also work in bash. However, the reverse is not always the case. All examples and exercises in this book use bash.

csh or C shell: the syntax of this shell resembles that of the C programming language. Sometimes asked for by programmers.

tcsh or TENEX C shell: a superset of the common C shell, enhancing user-friendliness and speed. That is why some also call it the Turbo C shell.

ksh or the Korn shell: sometimes appreciated by people with a UNIX background. A superset of the Bourne shell; with standard configuration a nightmare for beginning users.

The file /etc/shells gives an overview of known shells on a Linux system:

mia:~> cat /etc/shells /bin/bash /bin/sh /bin/tcsh /bin/csh |

Your default shell is set in the /etc/passwd file, like this line for user mia:

mia:L2NOfqdlPrHwE:504:504:Mia Maya:/home/mia:/bin/bash |

To switch from one shell to another, just enter the name of the new shell in the active terminal. The system finds the directory where the name occurs using the PATH settings, and since a shell is an executable file (program), the current shell activates it and it gets executed. A new prompt is usually shown, because each shell has its typical appearance:

mia:~> tcsh [mia@post21 ~]$ |

1.2. Advantages of the Bourne Again SHell

1.2.1. Bash is the GNU shell

The GNU project (GNU's Not UNIX) provides tools for UNIX-like system administration which are free software and comply to UNIX standards.

Bash is an sh-compatible shell that incorporates useful features from the Korn shell (ksh) and C shell (csh). It is intended to conform to the IEEE POSIX P1003.2/ISO 9945.2 Shell and Tools standard. It offers functional improvements over sh for both programming and interactive use; these include command line editing, unlimited size command history, job control, shell functions and aliases, indexed arrays of unlimited size, and integer arithmetic in any base from two to sixty-four. Bash can run most sh scripts without modification.

Like the other GNU projects, the bash initiative was started to preserve, protect and promote the freedom to use, study, copy, modify and redistribute software. It is generally known that such conditions stimulate creativity. This was also the case with the bash program, which has a lot of extra features that other shells can't offer.

1.2.2. Features only found in bash

1.2.2.1. Invocation

In addition to the single-character shell command line options which can generally be configured using the set shell built-in command, there are several multi-character options that you can use. We will come across a couple of the more popular options in this and the following chapters; the complete list can be found in the Bash info pages, ->.

1.2.2.2. Bash startup files

Startup files are scripts that are read and executed by Bash when it starts. The following subsections describe different ways to start the shell, and the startup files that are read consequently.

1.2.2.2.1. Invoked as an interactive login shell, or with `--login'

Interactive means you can enter commands. The shell is not running because a script has been activated. A login shell means that you got the shell after authenticating to the system, usually by giving your user name and password.

Files read:

/etc/profile

~/.bash_profile, ~/.bash_login or ~/.profile: first existing readable file is read

~/.bash_logout upon logout.

Error messages are printed if configuration files exist but are not readable. If a file does not exist, bash searches for the next.

1.2.2.2.2. Invoked as an interactive non-login shell

A non-login shell means that you did not have to authenticate to the system. For instance, when you open a terminal using an icon, or a menu item, that is a non-login shell.

Files read:

~/.bashrc

This file is usually referred to in ~/.bash_profile:

if [ -f ~/.bashrc ]; then . ~/.bashrc; fi

See Chapter 7 for more information on the if construct.

1.2.2.2.3. Invoked non-interactively

All scripts use non-interactive shells. They are programmed to do certain tasks and cannot be instructed to do other jobs than those for which they are programmed.

Files read:

defined by BASH_ENV

PATH is not used to search for this file, so if you want to use it, best refer to it by giving the full path and file name.

1.2.2.2.4. Invoked with the sh command

Bash tries to behave as the historical Bourne sh program while conforming to the POSIX standard as well.

Files read:

/etc/profile

~/.profile

When invoked interactively, the ENV variable can point to extra startup information.

1.2.2.2.5. POSIX mode

This option is enabled either using the set built-in:

set -o posix

or by calling the bash program with the --posix option. Bash will then try to behave as compliant as possible to the POSIX standard for shells. Setting the POSIXLY_CORRECT variable does the same.

Files read:

defined by ENV variable.

1.2.2.2.6. Invoked remotely

Files read when invoked by rshd:

~/.bashrc

| Avoid use of r-tools |

|---|---|

Be aware of the dangers when using tools such as rlogin, telnet, rsh and rcp. They are intrinsically insecure because confidential data is sent over the network unencrypted. If you need tools for remote execution, file transfer and so on, use an implementation of Secure SHell, generally known as SSH, freely available from http://www.openssh.org. Different client programs are available for non-UNIX systems as well, see your local software mirror. |

1.2.2.3. Interactive shells

1.2.2.3.1. What is an interactive shell?

An interactive shell generally reads from, and writes to, a user's terminal: input and output are connected to a terminal. Bash interactive behavior is started when the bash command is called upon without non-option arguments, except when the option is a string to read from or when the shell is invoked to read from standard input, which allows for positional parameters to be set (see Chapter 3 ).

1.2.2.3.2. Is this shell interactive?

Test by looking at the content of the special parameter -, it contains an 'i' when the shell is interactive:

eddy:~> echo $- himBH |

In non-interactive shells, the prompt, PS1, is unset.

1.2.2.3.3. Interactive shell behavior

Differences in interactive mode:

Bash reads startup files.

Job control enabled by default.

Prompts are set, PS2 is enabled for multi-line commands, it is usually set to ">". This is also the prompt you get when the shell thinks you entered an unfinished command, for instance when you forget quotes, command structures that cannot be left out, etc.

Commands are by default read from the command line using readline.

Bash interprets the shell option ignoreeof instead of exiting immediately upon receiving EOF (End Of File).

Command history and history expansion are enabled by default. History is saved in the file pointed to by HISTFILE when the shell exits. By default, HISTFILE points to ~/.bash_history.

Alias expansion is enabled.

In the absence of traps, the SIGTERM signal is ignored.

In the absence of traps, SIGINT is caught and handled. Thus, typing Ctrl+C, for example, will not quit your interactive shell.

Sending SIGHUP signals to all jobs on exit is configured with the huponexit option.

Commands are executed upon read.

Bash checks for mail periodically.

Bash can be configured to exit when it encounters unreferenced variables. In interactive mode this behavior is disabled.

When shell built-in commands encounter redirection errors, this will not cause the shell to exit.

Special built-ins returning errors when used in POSIX mode don't cause the shell to exit. The built-in commands are listed in Section 1.3.2.

Failure of exec will not exit the shell.

Parser syntax errors don't cause the shell to exit.

Simple spell check for the arguments to the cd built-in is enabled by default.

Automatic exit after the length of time specified in the TMOUT variable has passed, is enabled.

More information:

See Chapter 12 for more about signals.

Section 3.4 discusses the various expansions performed upon entering a command.

1.2.2.4. Conditionals

Conditional expressions are used by the [[ compound command and by the test and [ built-in commands.

Expressions may be unary or binary. Unary expressions are often used to examine the status of a file. You only need one object, for instance a file, to do the operation on.

There are string operators and numeric comparison operators as well; these are binary operators, requiring two objects to do the operation on. If the FILE argument to one of the primaries is in the form /dev/fd/N, then file descriptor N is checked. If the FILE argument to one of the primaries is one of /dev/stdin, /dev/stdout or /dev/stderr, then file descriptor 0, 1 or 2 respectively is checked.

Conditionals are discussed in detail in Chapter 7.

More information about the file descriptors in Section 8.2.3.

1.2.2.5. Shell arithmetic

The shell allows arithmetic expressions to be evaluated, as one of the shell expansions or by the let built-in.

Evaluation is done in fixed-width integers with no check for overflow, though division by 0 is trapped and flagged as an error. The operators and their precedence and associativity are the same as in the C language, see Chapter 3.

1.2.2.6. Aliases

Aliases allow a string to be substituted for a word when it is used as the first word of a simple command. The shell maintains a list of aliases that may be set and unset with the alias and unalias commands.

Bash always reads at least one complete line of input before executing any of the commands on that line. Aliases are expanded when a command is read, not when it is executed. Therefore, an alias definition appearing on the same line as another command does not take effect until the next line of input is read. The commands following the alias definition on that line are not affected by the new alias.

Aliases are expanded when a function definition is read, not when the function is executed, because a function definition is itself a compound command. As a consequence, aliases defined in a function are not available until after that function is executed.

We will discuss aliases in detail in Section 3.5.

1.2.2.7. Arrays

Bash provides one-dimensional array variables. Any variable may be used as an array; the declare built-in will explicitly declare an array. There is no maximum limit on the size of an array, nor any requirement that members be indexed or assigned contiguously. Arrays are zero-based. See Chapter 10.

1.2.2.8. Directory stack

The directory stack is a list of recently-visited directories. The pushd built-in adds directories to the stack as it changes the current directory, and the popd built-in removes specified directories from the stack and changes the current directory to the directory removed.

Content can be displayed issuing the dirs command or by checking the content of the DIRSTACK variable.

More information about the workings of this mechanism can be found in the Bash info pages.

1.2.2.9. The prompt

Bash makes playing with the prompt even more fun. See the section Controlling the Prompt in the Bash info pages.

1.2.2.10. The restricted shell

When invoked as rbash or with the --restricted or -r option, the following happens:

The cd built-in is disabled.

Setting or unsetting SHELL, PATH, ENV or BASH_ENV is not possible.

Command names can no longer contain slashes.

Filenames containing a slash are not allowed with the . (source) built-in command.

The hash built-in does not accept slashes with the -p option.

Import of functions at startup is disabled.

SHELLOPTS is ignored at startup.

Output redirection using >, >|, ><, >&, &> and >> is disabled.

The exec built-in is disabled.

The -f and -d options are disabled for the enable built-in.

A default PATH cannot be specified with the command built-in.

Turning off restricted mode is not possible.

When a command that is found to be a shell script is executed, rbash turns off any restrictions in the shell spawned to execute the script.

More information:

->->

Section 8.2.3: advanced redirection

1.3. Executing commands

1.3.1. General

Bash determines the type of program that is to be executed. Normal programs are system commands that exist in compiled form on your system. When such a program is executed, a new process is created because Bash makes an exact copy of itself. This child process has the same environment as its parent, only the process ID number is different. This procedure is called forking.

After the forking process, the address space of the child process is overwritten with the new process data. This is done through an exec call to the system.

The fork-and-exec mechanism thus switches an old command with a new, while the environment in which the new program is executed remains the same, including configuration of input and output devices, environment variables and priority. This mechanism is used to create all UNIX processes, so it also applies to the Linux operating system. Even the first process, init, with process ID 1, is forked during the boot procedure in the so-called bootstrapping procedure.

1.3.2. Shell built-in commands

Built-in commands are contained within the shell itself. When the name of a built-in command is used as the first word of a simple command, the shell executes the command directly, without creating a new process. Built-in commands are necessary to implement functionality impossible or inconvenient to obtain with separate utilities.

Bash supports 3 types of built-in commands:

Bourne Shell built-ins:

:, ., break, cd, continue, eval, exec, exit, export, getopts, hash, pwd, readonly, return, set, shift, test, [, times, trap, umask and unset.

Bash built-in commands:

alias, bind, builtin, command, declare, echo, enable, help, let, local, logout, printf, read, shopt, type, typeset, ulimit and unalias.

Special built-in commands:

When Bash is executing in POSIX mode, the special built-ins differ from other built-in commands in three respects:

Special built-ins are found before shell functions during command lookup.

If a special built-in returns an error status, a non-interactive shell exits.

Assignment statements preceding the command stay in effect in the shell environment after the command completes.

The POSIX special built-ins are :, ., break, continue, eval, exec, exit, export, readonly, return, set, shift, trap and unset.

Most of these built-ins will be discussed in the next chapters. For those commands for which this is not the case, we refer to the Info pages.

1.3.3. Executing programs from a script

When the program being executed is a shell script, bash will create a new bash process using a fork. This subshell reads the lines from the shell script one line at a time. Commands on each line are read, interpreted and executed as if they would have come directly from the keyboard.

While the subshell processes each line of the script, the parent shell waits for its child process to finish. When there are no more lines in the shell script to read, the subshell terminates. The parent shell awakes and displays a new prompt.

1.4. Building blocks

1.4.1. Shell building blocks

1.4.1.1. Shell syntax

If input is not commented, the shell reads it and divides it into words and operators, employing quoting rules to define the meaning of each character of input. Then these words and operators are translated into commands and other constructs, which return an exit status available for inspection or processing. The above fork-and-exec scheme is only applied after the shell has analyzed input in the following way:

The shell reads its input from a file, from a string or from the user's terminal.

Input is broken up into words and operators, obeying the quoting rules, see Chapter 3. These tokens are separated by metacharacters. Alias expansion is performed.

The shell parses (analyzes and substitutes) the tokens into simple and compound commands.

Bash performs various shell expansions, breaking the expanded tokens into lists of filenames and commands and arguments.

Redirection is performed if necessary, redirection operators and their operands are removed from the argument list.

Commands are executed.

Optionally the shell waits for the command to complete and collects its exit status.

1.4.1.2. Shell commands

A simple shell command such as touch file1 file2 file3 consists of the command itself followed by arguments, separated by spaces.

More complex shell commands are composed of simple commands arranged together in a variety of ways: in a pipeline in which the output of one command becomes the input of a second, in a loop or conditional construct, or in some other grouping. A couple of examples:

ls | more

gunzip file.tar.gz | tar xvf -

1.4.1.3. Shell functions

Shell functions are a way to group commands for later execution using a single name for the group. They are executed just like a "regular" command. When the name of a shell function is used as a simple command name, the list of commands associated with that function name is executed.

Shell functions are executed in the current shell context; no new process is created to interpret them.

Functions are explained in Chapter 11.

1.4.1.4. Shell parameters

A parameter is an entity that stores values. It can be a name, a number or a special value. For the shell's purpose, a variable is a parameter that stores a name. A variable has a value and zero or more attributes. Variables are created with the declare shell built-in command.

If no value is given, a variable is assigned the null string. Variables can only be removed with the unset built-in.

Assigning variables is discussed in Section 3.2, advanced use of variables in Chapter 10.

1.4.1.5. Shell expansions

Shell expansion is performed after each command line has been split into tokens. These are the expansions performed:

Brace expansion

Tilde expansion

Parameter and variable expansion

Command substitution

Arithmetic expansion

Word splitting

Filename expansion

We'll discuss these expansion types in detail in Section 3.4.

1.4.1.6. Redirections

Before a command is executed, its input and output may be redirected using a special notation interpreted by the shell. Redirection may also be used to open and close files for the current shell execution environment.

1.4.1.7. Executing commands

When executing a command, the words that the parser has marked as variable assignments (preceding the command name) and redirections are saved for later reference. Words that are not variable assignments or redirections are expanded; the first remaining word after expansion is taken to be the name of the command and the rest are arguments to that command. Then redirections are performed, then strings assigned to variables are expanded. If no command name results, variables will affect the current shell environment.

An important part of the tasks of the shell is to search for commands. Bash does this as follows:

Check whether the command contains slashes. If not, first check with the function list to see if it contains a command by the name we are looking for.

If command is not a function, check for it in the built-in list.

If command is neither a function nor a built-in, look for it analyzing the directories listed in PATH. Bash uses a hash table (data storage area in memory) to remember the full path names of executables so extensive PATH searches can be avoided.

If the search is unsuccessful, bash prints an error message and returns an exit status of 127.

If the search was successful or if the command contains slashes, the shell executes the command in a separate execution environment.

If execution fails because the file is not executable and not a directory, it is assumed to be a shell script.

If the command was not begun asynchronously, the shell waits for the command to complete and collects its exit status.

1.4.1.8. Shell scripts

When a file containing shell commands is used as the first non-option argument when invoking Bash (without -c or -s, this will create a non-interactive shell. This shell first searches for the script file in the current directory, then looks in PATH if the file cannot be found there.

1.5. Developing good scripts

1.5.1. Properties of good scripts

This guide is mainly about the last shell building block, scripts. Some general considerations before we continue:

A script should run without errors.

It should perform the task for which it is intended.

Program logic is clearly defined and apparent.

A script does not do unnecessary work.

Scripts should be reusable.

1.5.2. Structure

The structure of a shell script is very flexible. Even though in Bash a lot of freedom is granted, you must ensure correct logic, flow control and efficiency so that users executing the script can do so easily and correctly.

When starting on a new script, ask yourself the following questions:

Will I be needing any information from the user or from the user's environment?

How will I store that information?

Are there any files that need to be created? Where and with which permissions and ownerships?

What commands will I use? When using the script on different systems, do all these systems have these commands in the required versions?

Does the user need any notifications? When and why?

1.5.3. Terminology

The table below gives an overview of programming terms that you need to be familiar with:

Table 1-1. Overview of programming terms

| Term | What is it? |

|---|---|

| Command control | Testing exit status of a command in order to determine whether a portion of the program should be executed. |

| Conditional branch | Logical point in the program when a condition determines what happens next. |

| Logic flow | The overall design of the program. Determines logical sequence of tasks so that the result is successful and controlled. |

| Loop | Part of the program that is performed zero or more times. |

| User input | Information provided by an external source while the program is running, can be stored and recalled when needed. |

1.5.4. A word on order and logic

In order to speed up the developing process, the logical order of a program should be thought over in advance. This is your first step when developing a script.

A number of methods can be used; one of the most common is working with lists. Itemizing the list of tasks involved in a program allows you to describe each process. Individual tasks can be referenced by their item number.

Using your own spoken language to pin down the tasks to be executed by your program will help you to create an understandable form of your program. Later, you can replace the everyday language statements with shell language words and constructs.

The example below shows such a logic flow design. It describes the rotation of log files. This example shows a possible repetitive loop, controlled by the number of base log files you want to rotate:

Do you want to rotate logs?

If yes:

Enter directory name containing the logs to be rotated.

Enter base name of the log file.

Enter number of days logs should be kept.

Make settings permanent in user's crontab file.

If no, go to step 3.

Do you want to rotate another set of logs?

If yes: repeat step 1.

If no: go to step 3.

Exit

The user should provide information for the program to do something. Input from the user must be obtained and stored. The user should be notified that his crontab will change.

1.5.5. An example Bash script: mysystem.sh

The mysystem.sh script below executes some well-known commands (date, w, uname, uptime) to display information about you and your machine.

tom:~> cat -n mysystem.sh

1 #!/bin/bash

2 clear

3 echo "This is information provided by mysystem.sh. Program starts now."

4

5 echo "Hello, $USER"

6 echo

7

8 echo "Today's date is `date`, this is week `date +"%V"`."

9 echo

10

11 echo "These users are currently connected:"

12 w | cut -d " " -f 1 - | grep -v USER | sort -u

13 echo

14

15 echo "This is `uname -s` running on a `uname -m` processor."

16 echo

17

18 echo "This is the uptime information:"

19 uptime

20 echo

21

22 echo "That's all folks!"

|

A script always starts with the same two characters, "#!". After that, the shell that will execute the commands following the first line is defined. This script starts with clearing the screen on line 2. Line 3 makes it print a message, informing the user about what is going to happen. Line 5 greets the user. Lines 6, 9, 13, 16 and 20 are only there for orderly output display purposes. Line 8 prints the current date and the number of the week. Line 11 is again an informative message, like lines 3, 18 and 22. Line 12 formats the output of the w; line 15 shows operating system and CPU information. Line 19 gives the uptime and load information.

Both echo and printf are Bash built-in commands. The first always exits with a 0 status, and simply prints arguments followed by an end of line character on the standard output, while the latter allows for definition of a formatting string and gives a non-zero exit status code upon failure.

This is the same script using the printf built-in:

tom:~> cat mysystem.sh #!/bin/bash clear printf "This is information provided by mysystem.sh. Program starts now.\n" printf "Hello, $USER.\n\n" printf "Today's date is `date`, this is week `date +"%V"`.\n\n" printf "These users are currently connected:\n" w | cut -d " " -f 1 - | grep -v USER | sort -u printf "\n" printf "This is `uname -s` running on a `uname -m` processor.\n\n" printf "This is the uptime information:\n" uptime printf "\n" printf "That's all folks!\n" |

Creating user friendly scripts by means of inserting messages is treated in Chapter 8.

| Standard location of the Bourne Again shell |

|---|---|

This implies that the bash program is installed in /bin. |

| If stdout is not available |

|---|---|

If you execute a script from cron, supply full path names and redirect output and errors. Since the shell runs in non-interactive mode, any errors will cause the script to exit prematurely if you don't think about this. |

The following chapters will discuss the details of the above scripts.

1.5.6. Example init script

An init script starts system services on UNIX and Linux machines. The system log daemon, the power management daemon, the name and mail daemons are common examples. These scripts, also known as startup scripts, are stored in a specific location on your system, such as /etc/rc.d/init.d or /etc/init.d. Init, the initial process, reads its configuration files and decides which services to start or stop in each run level. A run level is a configuration of processes; each system has a single user run level, for instance, for performing administrative tasks, for which the system has to be in an unused state as much as possible, such as recovering a critical file system from a backup. Reboot and shutdown run levels are usually also configured.

The tasks to be executed upon starting a service or stopping it are listed in the startup scripts. It is one of the system administrator's tasks to configure init, so that services are started and stopped at the correct moment. When confronted with this task, you need a good understanding of the startup and shutdown procedures on your system. We therefore advise that you read the man pages for init and inittab before starting on your own initialization scripts.

Here is a very simple example, that will play a sound upon starting and stopping your machine:

#!/bin/bash # This script is for /etc/rc.d/init.d # Link in rc3.d/S99audio-greeting and rc0.d/K01audio-greeting case "$1" in 'start') cat /usr/share/audio/at_your_service.au > /dev/audio ;; 'stop') cat /usr/share/audio/oh_no_not_again.au > /dev/audio ;; esac exit 0 |

The case statement often used in this kind of script is described in Section 7.2.5.

1.6. Summary

Bash is the GNU shell, compatible with the Bourne shell and incorporating many useful features from other shells. When the shell is started, it reads its configuration files. The most important are:

/etc/profile

~/.bash_profile

~/.bashrc

Bash behaves different when in interactive mode and also has a POSIX compliant and a restricted mode.

Shell commands can be split up in three groups: the shell functions, shell built-ins and existing commands in a directory on your system. Bash supports additional built-ins not found in the plain Bourne shell.

Shell scripts consist of these commands arranged as shell syntax dictates. Scripts are read and executed line per line and should have a logical structure.

1.7. Exercises

These are some exercises to warm you up for the next chapter:

Where is the bash program located on your system?

Use the --version option to find out which version you are running.

Which shell configuration files are read when you login to your system using the graphical user interface and then opening a terminal window?

Are the following shells interactive shells? Are they login shells?

A shell opened by clicking on the background of your graphical desktop, selecting "Terminal" or such from a menu.

A shell that you get after issuing the command ssh localhost.

A shell that you get when logging in to the console in text mode.

A shell obtained by the command xterm &.

A shell opened by the mysystem.sh script.

A shell that you get on a remote host, for which you didn't have to give the login and/or password because you use SSH and maybe SSH keys.

Can you explain why bash does not exit when you type Ctrl+C on the command line?

Display directory stack content.

If it is not yet the case, set your prompt so that it displays your location in the file system hierarchy, for instance add this line to ~/.bashrc:

export PS1="\u@\h \w> "

Display hashed commands for your current shell session.

How many processes are currently running on your system? Use ps and wc, the first line of output of ps is not a process!

How to display the system hostname? Only the name, nothing more!

Chapter 2. Writing and debugging scripts

2.1. Creating and running a script

2.1.1. Writing and naming

A shell script is a sequence of commands for which you have a repeated use. This sequence is typically executed by entering the name of the script on the command line. Alternatively, you can use scripts to automate tasks using the cron facility. Another use for scripts is in the UNIX boot and shutdown procedure, where operation of daemons and services are defined in init scripts.

To create a shell script, open a new empty file in your editor. Any text editor will do: vim, emacs, gedit, dtpad et cetera are all valid. You might want to chose a more advanced editor like vim or emacs, however, because these can be configured to recognize shell and Bash syntax and can be a great help in preventing those errors that beginners frequently make, such as forgetting brackets and semi-colons.

| Syntax highlighting in vim |

|---|---|

In order to activate syntax highlighting in vim, use the command :syntax enable or :sy enable or :syn enable You can add this setting to your .vimrc file to make it permanent. |

Put UNIX commands in the new empty file, like you would enter them on the command line. As discussed in the previous chapter (see Section 1.3), commands can be shell functions, shell built-ins, UNIX commands and other scripts.

Give your script a sensible name that gives a hint about what the script does. Make sure that your script name does not conflict with existing commands. In order to ensure that no confusion can rise, script names often end in .sh; even so, there might be other scripts on your system with the same name as the one you chose. Check using which, whereis and other commands for finding information about programs and files:

which -a script_name

whereis script_name

locate script_name

2.1.2. script1.sh

In this example we use the echo Bash built-in to inform the user about what is going to happen, before the task that will create the output is executed. It is strongly advised to inform users about what a script is doing, in order to prevent them from becoming nervous because the script is not doing anything. We will return to the subject of notifying users in Chapter 8.

Write this script for yourself as well. It might be a good idea to create a directory ~/scripts to hold your scripts. Add the directory to the contents of the PATH variable:

export PATH="$PATH:~/scripts"

If you are just getting started with Bash, use a text editor that uses different colours for different shell constructs. Syntax highlighting is supported by vim, gvim, (x)emacs, kwrite and many other editors; check the documentation of your favorite editor.

| Different prompts |

|---|---|

The prompts throughout this course vary depending on the author's mood. This resembles much more real life situations than the standard educational $ prompt. The only convention we stick to, is that the root prompt ends in a hash mark (#). |

2.1.3. Executing the script

The script should have execute permissions for the correct owners in order to be runnable. When setting permissions, check that you really obtained the permissions that you want. When this is done, the script can run like any other command:

willy:~/scripts> chmod u+x script1.sh willy:~/scripts> ls -l script1.sh -rwxrw-r-- 1 willy willy 456 Dec 24 17:11 script1.sh willy:~> script1.sh The script starts now. Hi, willy! I will now fetch you a list of connected users: 3:38pm up 18 days, 5:37, 4 users, load average: 0.12, 0.22, 0.15 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT root tty2 - Sat 2pm 4:25m 0.24s 0.05s -bash willy :0 - Sat 2pm ? 0.00s ? - willy pts/3 - Sat 2pm 3:33m 36.39s 36.39s BitchX willy ir willy pts/2 - Sat 2pm 3:33m 0.13s 0.06s /usr/bin/screen I'm setting two variables now. This is a string: black And this is a number: 9 I'm giving you back your prompt now. willy:~/scripts> echo $COLOUR willy:~/scripts> echo $VALUE willy:~/scripts> |

This is the most common way to execute a script. It is preferred to execute the script like this in a subshell. The variables, functions and aliases created in this subshell are only known to the particular bash session of that subshell. When that shell exits and the parent regains control, everything is cleaned up and all changes to the state of the shell made by the script, are forgotten.

If you did not put the scripts directory in your PATH, and . (the current directory) is not in the PATH either, you can activate the script like this:

./script_name.sh

A script can also explicitly be executed by a given shell, but generally we only do this if we want to obtain special behavior, such as checking if the script works with another shell or printing traces for debugging:

rbash script_name.sh

sh script_name.sh

bash -x script_name.sh

The specified shell will start as a subshell of your current shell and execute the script. This is done when you want the script to start up with specific options or under specific conditions which are not specified in the script.

If you don't want to start a new shell but execute the script in the current shell, you source it:

source script_name.sh

| source = . |

|---|---|

The Bash source built-in is a synonym for the Bourne shell . (dot) command. |

The script does not need execute permission in this case. Commands are executed in the current shell context, so any changes made to your environment will be visible when the script finishes execution:

willy:~/scripts> source script1.sh --output ommitted-- willy:~/scripts> echo $VALUE 9 willy:~/scripts> |

2.2. Script basics

2.2.1. Which shell will run the script?

When running a script in a subshell, you should define which shell should run the script. The shell type in which you wrote the script might not be the default on your system, so commands you entered might result in errors when executed by the wrong shell.

The first line of the script determines the shell to start. The first two characters of the first line should be #!, then follows the path to the shell that should interpret the commands that follow. Blank lines are also considered to be lines, so don't start your script with an empty line.

For the purpose of this course, all scripts will start with the line

#!/bin/bash

As noted before, this implies that the Bash executable can be found in /bin.

2.2.2. Adding comments

You should be aware of the fact that you might not be the only person reading your code. A lot of users and system administrators run scripts that were written by other people. If they want to see how you did it, comments are useful to enlighten the reader.

Comments also make your own life easier. Say that you had to read a lot of man pages in order to achieve a particular result with some command that you used in your script. You won't remember how it worked if you need to change your script after a few weeks or months, unless you have commented what you did, how you did it and/or why you did it.

Take the script1.sh example and copy it to commented-script1.sh, which we edit so that the comments reflect what the script does. Everything the shell encounters after a hash mark on a line is ignored and only visible upon opening the shell script file:

#!/bin/bash # This script clears the terminal, displays a greeting and gives information # about currently connected users. The two example variables are set and displayed. clear # clear terminal window echo "The script starts now." echo "Hi, $USER!" # dollar sign is used to get content of variable echo echo "I will now fetch you a list of connected users:" echo w # show who is logged on and echo # what they are doing echo "I'm setting two variables now." COLOUR="black" # set a local shell variable VALUE="9" # set a local shell variable echo "This is a string: $COLOUR" # display content of variable echo "And this is a number: $VALUE" # display content of variable echo echo "I'm giving you back your prompt now." echo |

In a decent script, the first lines are usually comment about what to expect. Then each big chunk of commands will be commented as needed for clarity's sake. Linux init scripts, as an example, in your system's init.d directory, are usually well commented since they have to be readable and editable by everyone running Linux.

2.3. Debugging Bash scripts

2.3.1. Debugging on the entire script

When things don't go according to plan, you need to determine what exactly causes the script to fail. Bash provides extensive debugging features. The most common is to start up the subshell with the -x option, which will run the entire script in debug mode. Traces of each command plus its arguments are printed to standard output after the commands have been expanded but before they are executed.

This is the commented-script1.sh script ran in debug mode. Note again that the added comments are not visible in the output of the script.

willy:~/scripts> bash -x script1.sh + clear + echo 'The script starts now.' The script starts now. + echo 'Hi, willy!' Hi, willy! + echo + echo 'I will now fetch you a list of connected users:' I will now fetch you a list of connected users: + echo + w 4:50pm up 18 days, 6:49, 4 users, load average: 0.58, 0.62, 0.40 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT root tty2 - Sat 2pm 5:36m 0.24s 0.05s -bash willy :0 - Sat 2pm ? 0.00s ? - willy pts/3 - Sat 2pm 43:13 36.82s 36.82s BitchX willy ir willy pts/2 - Sat 2pm 43:13 0.13s 0.06s /usr/bin/screen + echo + echo 'I'\''m setting two variables now.' I'm setting two variables now. + COLOUR=black + VALUE=9 + echo 'This is a string: ' This is a string: + echo 'And this is a number: ' And this is a number: + echo + echo 'I'\''m giving you back your prompt now.' I'm giving you back your prompt now. + echo |

There is now a full-fledged debugger for Bash, available at SourceForge. These debugging features are available in most modern versions of Bash, starting from 3.x.

2.3.2. Debugging on part(s) of the script

Using the set Bash built-in you can run in normal mode those portions of the script of which you are sure they are without fault, and display debugging information only for troublesome zones. Say we are not sure what the w command will do in the example commented-script1.sh, then we could enclose it in the script like this:

set -x # activate debugging from here w set +x # stop debugging from here |

Output then looks like this:

willy: ~/scripts> script1.sh The script starts now. Hi, willy! I will now fetch you a list of connected users: + w 5:00pm up 18 days, 7:00, 4 users, load average: 0.79, 0.39, 0.33 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT root tty2 - Sat 2pm 5:47m 0.24s 0.05s -bash willy :0 - Sat 2pm ? 0.00s ? - willy pts/3 - Sat 2pm 54:02 36.88s 36.88s BitchX willyke willy pts/2 - Sat 2pm 54:02 0.13s 0.06s /usr/bin/screen + set +x I'm setting two variables now. This is a string: And this is a number: I'm giving you back your prompt now. willy: ~/scripts> |

You can switch debugging mode on and off as many times as you want within the same script.

The table below gives an overview of other useful Bash options:

Table 2-1. Overview of set debugging options

| Short notation | Long notation | Result |

|---|---|---|

| set -f | set -o noglob | Disable file name generation using metacharacters (globbing). |

| set -v | set -o verbose | Prints shell input lines as they are read. |

| set -x | set -o xtrace | Print command traces before executing command. |

The dash is used to activate a shell option and a plus to deactivate it. Don't let this confuse you!

In the example below, we demonstrate these options on the command line:

willy:~/scripts> set -v willy:~/scripts> ls ls commented-scripts.sh script1.sh willy:~/scripts> set +v set +v willy:~/scripts> ls * commented-scripts.sh script1.sh willy:~/scripts> set -f willy:~/scripts> ls * ls: *: No such file or directory willy:~/scripts> touch * willy:~/scripts> ls * commented-scripts.sh script1.sh willy:~/scripts> rm * willy:~/scripts> ls commented-scripts.sh script1.sh |

Alternatively, these modes can be specified in the script itself, by adding the desired options to the first line shell declaration. Options can be combined, as is usually the case with UNIX commands:

#!/bin/bash -xv

Once you found the buggy part of your script, you can add echo statements before each command of which you are unsure, so that you will see exactly where and why things don't work. In the example commented-script1.sh script, it could be done like this, still assuming that the displaying of users gives us problems:

echo "debug message: now attempting to start w command"; w |

In more advanced scripts, the echo can be inserted to display the content of variables at different stages in the script, so that flaws can be detected:

echo "Variable VARNAME is now set to $VARNAME." |

2.4. Summary

A shell script is a reusable series of commands put in an executable text file. Any text editor can be used to write scripts.

Scripts start with #! followed by the path to the shell executing the commands from the script. Comments are added to a script for your own future reference, and also to make it understandable for other users. It is better to have too many explanations than not enough.

Debugging a script can be done using shell options. Shell options can be used for partial debugging or for analyzing the entire script. Inserting echo commands at strategic locations is also a common troubleshooting technique.

2.5. Exercises

This exercise will help you to create your first script.

Write a script using your favorite editor. The script should display the path to your homedirectory and the terminal type that you are using. Additionally it shows all the services started up in runlevel 3 on your system. (hint: use HOME, TERM and ls /etc/rc3.d/S*)

Add comments in your script.

Add information for the users of your script.

Change permissions on your script so that you can run it.

Run the script in normal mode and in debug mode. It should run without errors.

Make errors in your script: see what happens if you misspell commands, if you leave out the first line or put something unintelligible there, or if you misspell shell variable names or write them in lower case characters after they have been declared in capitals. Check what the debug comments say about this.

Chapter 3. The Bash environment

3.1. Shell initialization files

3.1.1. System-wide configuration files

3.1.1.1. /etc/profile

When invoked interactively with the --login option or when invoked as sh, Bash reads the /etc/profile instructions. These usually set the shell variables PATH, USER, MAIL, HOSTNAME and HISTSIZE.

On some systems, the umask value is configured in /etc/profile; on other systems this file holds pointers to other configuration files such as:

/etc/inputrc, the system-wide Readline initialization file where you can configure the command line bell-style.

the /etc/profile.d directory, which contains files configuring system-wide behavior of specific programs.

All settings that you want to apply to all your users' environments should be in this file. It might look like this:

# /etc/profile

# System wide environment and startup programs, for login setup

PATH=$PATH:/usr/X11R6/bin

# No core files by default

ulimit -S -c 0 > /dev/null 2>&1

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

HOSTNAME=`/bin/hostname`

HISTSIZE=1000

# Keyboard, bell, display style: the readline config file:

if [ -z "$INPUTRC" -a ! -f "$HOME/.inputrc" ]; then

INPUTRC=/etc/inputrc

fi

PS1="\u@\h \W"

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE INPUTRC PS1

# Source initialization files for specific programs (ls, vim, less, ...)

for i in /etc/profile.d/*.sh ; do

if [ -r "$i" ]; then

. $i

fi

done

# Settings for program initialization

source /etc/java.conf

export NPX_PLUGIN_PATH="$JRE_HOME/plugin/ns4plugin/:/usr/lib/netscape/plugins"

PAGER="/usr/bin/less"

unset i

|

This configuration file sets some basic shell environment variables as well as some variables required by users running Java and/or Java applications in their web browser. See Section 3.2.

See Chapter 7 for more on the conditional if used in this file; Chapter 9 discusses loops such as the for construct.

The Bash source contains sample profile files for general or individual use. These and the one in the example above need changes in order for them to work in your environment!

3.1.1.2. /etc/bashrc

On systems offering multiple types of shells, it might be better to put Bash-specific configurations in this file, since /etc/profile is also read by other shells, such as the Bourne shell. Errors generated by shells that don't understand the Bash syntax are prevented by splitting the configuration files for the different types of shells. In such cases, the user's ~/.bashrc might point to /etc/bashrc in order to include it in the shell initialization process upon login.

You might also find that /etc/profile on your system only holds shell environment and program startup settings, while /etc/bashrc contains system-wide definitions for shell functions and aliases. The /etc/bashrc file might be referred to in /etc/profile or in individual user shell initialization files.

The source contains sample bashrc files, or you might find a copy in /usr/share/doc/bash-2.05b/startup-files. This is part of the bashrc that comes with the Bash documentation:

alias ll='ls -l'

alias dir='ls -ba'

alias c='clear'

alias ls='ls --color'

alias mroe='more'

alias pdw='pwd'

alias sl='ls --color'

pskill()

{

local pid

pid=$(ps -ax | grep $1 | grep -v grep | gawk '{ print $1 }')

echo -n "killing $1 (process $pid)..."

kill -9 $pid

echo "slaughtered."

}

|

Apart from general aliases, it contains useful aliases which make commands work even if you misspell them. We will discuss aliases in Section 3.5.2. This file contains a function, pskill; functions will be studied in detail in Chapter 11.

3.1.2. Individual user configuration files

| I don't have these files?! |

|---|---|

These files might not be in your home directory by default; create them if needed. |

3.1.2.1. ~/.bash_profile

This is the preferred configuration file for configuring user environments individually. In this file, users can add extra configuration options or change default settings:

franky~> cat .bash_profile

#################################################################

# #

# .bash_profile file #

# #

# Executed from the bash shell when you log in. #

# #

#################################################################

source ~/.bashrc

source ~/.bash_login

case "$OS" in

IRIX)

stty sane dec

stty erase

;;

# SunOS)

# stty erase

# ;;

*)

stty sane

;;

esac

|

This user configures the backspace character for login on different operating systems. Apart from that, the user's .bashrc and .bash_login are read.

3.1.2.2. ~/.bash_login

This file contains specific settings that are normally only executed when you log in to the system. In the example, we use it to configure the umask value and to show a list of connected users upon login. This user also gets the calendar for the current month:

####################################################################### # # # Bash_login file # # # # commands to perform from the bash shell at login time # # (sourced from .bash_profile) # # # ####################################################################### # file protection umask 002 # all to me, read to group and others # miscellaneous w cal `date +"%m"` `date +"%Y"` |

In the absence of ~/.bash_profile, this file will be read.

3.1.2.3. ~/.profile

In the absence of ~/.bash_profile and ~/.bash_login, ~/.profile is read. It can hold the same configurations, which are then also accessible by other shells. Mind that other shells might not understand the Bash syntax.

3.1.2.4. ~/.bashrc

Today, it is more common to use a non-login shell, for instance when logged in graphically using X terminal windows. Upon opening such a window, the user does not have to provide a user name or password; no authentication is done. Bash searches for ~/.bashrc when this happens, so it is referred to in the files read upon login as well, which means you don't have to enter the same settings in multiple files.

In this user's .bashrc a couple of aliases are defined and variables for specific programs are set after the system-wide /etc/bashrc is read:

franky ~> cat .bashrc

# /home/franky/.bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# shell options

set -o noclobber

# my shell variables

export PS1="\[\033[1;44m\]\u \w\[\033[0m\] "

export PATH="$PATH:~/bin:~/scripts"

# my aliases

alias cdrecord='cdrecord -dev 0,0,0 -speed=8'

alias ss='ssh octarine'

alias ll='ls -la'

# mozilla fix

MOZILLA_FIVE_HOME=/usr/lib/mozilla

LD_LIBRARY_PATH=/usr/lib/mozilla:/usr/lib/mozilla/plugins

MOZ_DIST_BIN=/usr/lib/mozilla

MOZ_PROGRAM=/usr/lib/mozilla/mozilla-bin

export MOZILLA_FIVE_HOME LD_LIBRARY_PATH MOZ_DIST_BIN MOZ_PROGRAM

# font fix

alias xt='xterm -bg black -fg white &'

# BitchX settings

export IRCNAME="frnk"

# THE END

franky ~>

|

More examples can be found in the Bash package. Remember that sample files might need changes in order to work in your environment.

Aliases are discussed in Section 3.5.

3.1.2.5. ~/.bash_logout

This file contains specific instructions for the logout procedure. In the example, the terminal window is cleared upon logout. This is useful for remote connections, which will leave a clean window after closing them.

franky ~> cat .bash_logout ####################################################################### # # # Bash_logout file # # # # commands to perform from the bash shell at logout time # # # ####################################################################### clear franky ~> |

3.1.3. Changing shell configuration files

When making changes to any of the above files, users have to either reconnect to the system or source the altered file for the changes to take effect. By interpreting the script this way, changes are applied to the current shell session:

Most shell scripts execute in a private environment: variables are not inherited by child processes unless they are exported by the parent shell. Sourcing a file containing shell commands is a way of applying changes to your own environment and setting variables in the current shell.

This example also demonstrates the use of different prompt settings by different users. In this case, red means danger. When you have a green prompt, don't worry too much.

Note that source resourcefile is the same as . resourcefile.

Should you get lost in all these configuration files, and find yourself confronted with settings of which the origin is not clear, use echo statements, just like for debugging scripts; see Section 2.3.2. You might add lines like this:

echo "Now executing .bash_profile.." |

or like this:

echo "Now setting PS1 in .bashrc:" export PS1="[some value]" echo "PS1 is now set to $PS1" |

3.2. Variables

3.2.1. Types of variables

As seen in the examples above, shell variables are in uppercase characters by convention. Bash keeps a list of two types of variables:

3.2.1.1. Global variables

Global variables or environment variables are available in all shells. The env or printenv commands can be used to display environment variables. These programs come with the sh-utils package.

Below is a typical output:

franky ~> printenv CC=gcc CDPATH=.:~:/usr/local:/usr:/ CFLAGS=-O2 -fomit-frame-pointer COLORTERM=gnome-terminal CXXFLAGS=-O2 -fomit-frame-pointer DISPLAY=:0 DOMAIN=hq.garrels.be e= TOR=vi FCEDIT=vi FIGNORE=.o:~ G_BROKEN_FILENAMES=1 GDK_USE_XFT=1 GDMSESSION=Default GNOME_DESKTOP_SESSION_ID=Default GTK_RC_FILES=/etc/gtk/gtkrc:/nethome/franky/.gtkrc-1.2-gnome2 GWMCOLOR=darkgreen GWMTERM=xterm HISTFILESIZE=5000 history_control=ignoredups HISTSIZE=2000 HOME=/nethome/franky HOSTNAME=octarine.hq.garrels.be INPUTRC=/etc/inputrc IRCNAME=franky JAVA_HOME=/usr/java/j2sdk1.4.0 LANG=en_US LDFLAGS=-s LD_LIBRARY_PATH=/usr/lib/mozilla:/usr/lib/mozilla/plugins LESSCHARSET=latin1 LESS=-edfMQ LESSOPEN=|/usr/bin/lesspipe.sh %s LEX=flex LOCAL_MACHINE=octarine LOGNAME=franky LS_COLORS=no=00:fi=00:di=01;34:ln=01;36:pi=40;33:so=01;35:bd=40;33;01:cd=40;33;01:or=01;05;37;41:mi=01;05;37;41:ex=01;32:*.cmd=01;32:*.exe=01;32:*.com=01;32:*.btm=01;32:*.bat=01;32:*.sh=01;32:*.csh=01;32:*.tar=01;31:*.tgz=01;31:*.arj=01;31:*.taz=01;31:*.lzh=01;31:*.zip=01;31:*.z=01;31:*.Z=01;31:*.gz=01;31:*.bz2=01;31:*.bz=01;31:*.tz=01;31:*.rpm=01;31:*.cpio=01;31:*.jpg=01;35:*.gif=01;35:*.bmp=01;35:*.xbm=01;35:*.xpm=01;35:*.png=01;35:*.tif=01;35: MACHINES=octarine MAILCHECK=60 MAIL=/var/mail/franky MANPATH=/usr/man:/usr/share/man/:/usr/local/man:/usr/X11R6/man MEAN_MACHINES=octarine MOZ_DIST_BIN=/usr/lib/mozilla MOZILLA_FIVE_HOME=/usr/lib/mozilla MOZ_PROGRAM=/usr/lib/mozilla/mozilla-bin MTOOLS_FAT_COMPATIBILITY=1 MYMALLOC=0 NNTPPORT=119 NNTPSERVER=news NPX_PLUGIN_PATH=/plugin/ns4plugin/:/usr/lib/netscape/plugins OLDPWD=/nethome/franky OS=Linux PAGER=less PATH=/nethome/franky/bin.Linux:/nethome/franky/bin:/usr/local/bin:/usr/local/sbin:/usr/X11R6/bin:/usr/bin:/usr/sbin:/bin:/sbin:. PS1=\[\033[1;44m\]franky is in \w\[\033[0m\] PS2=More input> PWD=/nethome/franky SESSION_MANAGER=local/octarine.hq.garrels.be:/tmp/.ICE-unix/22106 SHELL=/bin/bash SHELL_LOGIN=--login SHLVL=2 SSH_AGENT_PID=22161 SSH_ASKPASS=/usr/libexec/openssh/gnome-ssh-askpass SSH_AUTH_SOCK=/tmp/ssh-XXmhQ4fC/agent.22106 START_WM=twm TERM=xterm TYPE=type USERNAME=franky USER=franky _=/usr/bin/printenv VISUAL=vi WINDOWID=20971661 XAPPLRESDIR=/nethome/franky/app-defaults XAUTHORITY=/nethome/franky/.Xauthority XENVIRONMENT=/nethome/franky/.Xdefaults XFILESEARCHPATH=/usr/X11R6/lib/X11/%L/%T/%N%C%S:/usr/X11R6/lib/X11/%l/%T/%N%C%S:/usr/X11R6/lib/X11/%T/%N%C%S:/usr/X11R6/lib/X11/%L/%T/%N%S:/usr/X11R6/lib/X11/%l/%T/%N%S:/usr/X11R6/lib/X11/%T/%N%S XKEYSYMDB=/usr/X11R6/lib/X11/XKeysymDB XMODIFIERS=@im=none XTERMID= XWINHOME=/usr/X11R6 X=X11R6 YACC=bison -y |

3.2.1.2. Local variables

Local variables are only available in the current shell. Using the set built-in command without any options will display a list of all variables (including environment variables) and functions. The output will be sorted according to the current locale and displayed in a reusable format.

Below is a diff file made by comparing printenv and set output, after leaving out the functions which are also displayed by the set command:

franky ~> diff set.sorted printenv.sorted | grep "<" | awk '{ print $2 }'

BASE=/nethome/franky/.Shell/hq.garrels.be/octarine.aliases

BASH=/bin/bash

BASH_VERSINFO=([0]="2"

BASH_VERSION='2.05b.0(1)-release'

COLUMNS=80

DIRSTACK=()

DO_FORTUNE=

EUID=504

GROUPS=()

HERE=/home/franky

HISTFILE=/nethome/franky/.bash_history

HOSTTYPE=i686

IFS=$'

LINES=24

MACHTYPE=i686-pc-linux-gnu

OPTERR=1

OPTIND=1

OSTYPE=linux-gnu

PIPESTATUS=([0]="0")

PPID=10099

PS4='+

PWD_REAL='pwd

SHELLOPTS=braceexpand:emacs:hashall:histexpand:history:interactive-comments:monitor

THERE=/home/franky

UID=504

|

| Awk |

|---|---|

the GNU Awk programming language is explained in Chapter 6. |

3.2.1.3. Variables by content

Apart from dividing variables in local and global variables, we can also divide them in categories according to the sort of content the variable contains. In this respect, variables come in 4 types:

String variables

Integer variables

Constant variables

Array variables

We'll discuss these types in Chapter 10. For now, we will work with integer and string values for our variables.

3.2.2. Creating variables

Variables are case sensitive and capitalized by default. Giving local variables a lowercase name is a convention which is sometimes applied. However, you are free to use the names you want or to mix cases. Variables can also contain digits, but a name starting with a digit is not allowed:

prompt> export 1number=1 bash: export: `1number=1': not a valid identifier |

To set a variable in the shell, use

VARNAME="value"

Putting spaces around the equal sign will cause errors. It is a good habit to quote content strings when assigning values to variables: this will reduce the chance that you make errors.

Some examples using upper and lower cases, numbers and spaces:

franky ~> MYVAR1="2" franky ~> echo $MYVAR1 2 franky ~> first_name="Franky" franky ~> echo $first_name Franky franky ~> full_name="Franky M. Singh" franky ~> echo $full_name Franky M. Singh franky ~> MYVAR-2="2" bash: MYVAR-2=2: command not found franky ~> MYVAR1 ="2" bash: MYVAR1: command not found franky ~> MYVAR1= "2" bash: 2: command not found franky ~> unset MYVAR1 first_name full_name franky ~> echo $MYVAR1 $first_name $full_name <--no output--> franky ~> |

3.2.3. Exporting variables

A variable created like the ones in the example above is only available to the current shell. It is a local variable: child processes of the current shell will not be aware of this variable. In order to pass variables to a subshell, we need to export them using the export built-in command. Variables that are exported are referred to as environment variables. Setting and exporting is usually done in one step:

export VARNAME="value"

A subshell can change variables it inherited from the parent, but the changes made by the child don't affect the parent. This is demonstrated in the example:

franky ~> full_name="Franky M. Singh" franky ~> bash franky ~> echo $full_name franky ~> exit franky ~> export full_name franky ~> bash franky ~> echo $full_name Franky M. Singh franky ~> export full_name="Charles the Great" franky ~> echo $full_name Charles the Great franky ~> exit franky ~> echo $full_name Franky M. Singh franky ~> |

When first trying to read the value of full_name in a subshell, it is not there (echo shows a null string). The subshell quits, and full_name is exported in the parent - a variable can be exported after it has been assigned a value. Then a new subshell is started, in which the variable exported from the parent is visible. The variable is changed to hold another name, but the value for this variable in the parent stays the same.

3.2.4. Reserved variables

3.2.4.1. Bourne shell reserved variables

Bash uses certain shell variables in the same way as the Bourne shell. In some cases, Bash assigns a default value to the variable. The table below gives an overview of these plain shell variables:

Table 3-1. Reserved Bourne shell variables

| Variable name | Definition |

|---|---|

| CDPATH | A colon-separated list of directories used as a search path for the cd built-in command. |

| HOME | The current user's home directory; the default for the cd built-in. The value of this variable is also used by tilde expansion. |